[Demo] Design Event-Driven Microservices for Cloud → Register Now

With the support we received from Confluent and our cloud provider, moving our on-prem Kafka deployment to Confluent Cloud went well, as did the overall cloud migration.

As the largest online marketplace in Switzerland, Ricardo is accustomed to tackling large-scale IT initiatives and completing them on time. So, when the company recently decided to undertake a migration from data center to the cloud, they made a bold move: canceling the contract with the data center in advance.

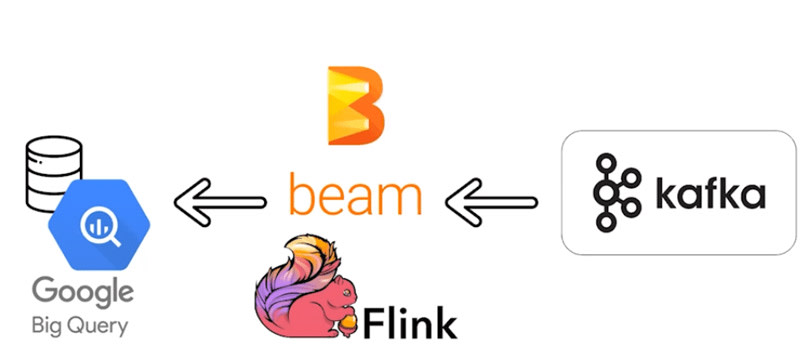

The move was made simpler, in part, because Ricardo was already using Apache Kafka® for event streaming in its data center. Working with Confluent engineers, Kaymak and his data intelligence team easily transitioned from on-prem Kafka to Confluent Cloud, and completed the overall cloud migration on schedule.

More Customer Stories

Risk Focus Delivers Order Management Platform at Top 20 Global Bank

A leading skincare brand and direct selling company in North America.

At the heart of Roosevelt is a real-time streaming architecture built on Confluent that powers not only BRIDE, but also eligibility, billing, member portals, and other core functions on the platform.