[Webinar] From Fire Drills to Zero-Loss Resilience | Register Now

Build Faster.

Scale Smarter.

With the World's Data Streaming Platform, by the original co-creators of Apache Kafka®

- Eliminate costly data infrastructureSee how SAS saved 69%

Replace point-to-point, batch, and streaming pipelines with one platform.

- Clean data at the sourceSee how Notion powers 100M+ users daily

Prevent downstream data delays and quality issues.

- Be ready for AI nowSee 14x predictive performance at GEP

Ensure AI/ML apps, agents & systems access the right data in real time.

New in Confluent Cloud: Data Streaming for Any Workload, At Any Scale

New in Confluent Intelligence: A2A, Multivariate Anomaly Detection, & more

Migrate to Confluent Cloud in Days, Not Weeks, with Kafka Copy Paste (KCP)

Introducing Confluent Private Cloud: Cloud-Level Agility for Your Private Infra

Manage and Monitor Across Environments With Unified Stream Manager

The 2025 Data Streaming Report

New in Confluent Cloud: Data Streaming for Any Workload, At Any Scale

New in Confluent Intelligence: A2A, Multivariate Anomaly Detection, & more

Migrate to Confluent Cloud in Days, Not Weeks, with Kafka Copy Paste (KCP)

Introducing Confluent Private Cloud: Cloud-Level Agility for Your Private Infra

Manage and Monitor Across Environments With Unified Stream Manager

The 2025 Data Streaming Report

New in Confluent Cloud: Data Streaming for Any Workload, At Any Scale

New in Confluent Intelligence: A2A, Multivariate Anomaly Detection, & more

Migrate to Confluent Cloud in Days, Not Weeks, with Kafka Copy Paste (KCP)

Introducing Confluent Private Cloud: Cloud-Level Agility for Your Private Infra

Manage and Monitor Across Environments With Unified Stream Manager

The 2025 Data Streaming Report

Building Data-Driven,

AI-First Companies

For every data opportunity, Confluent helps you innovate faster and operate more efficiently.

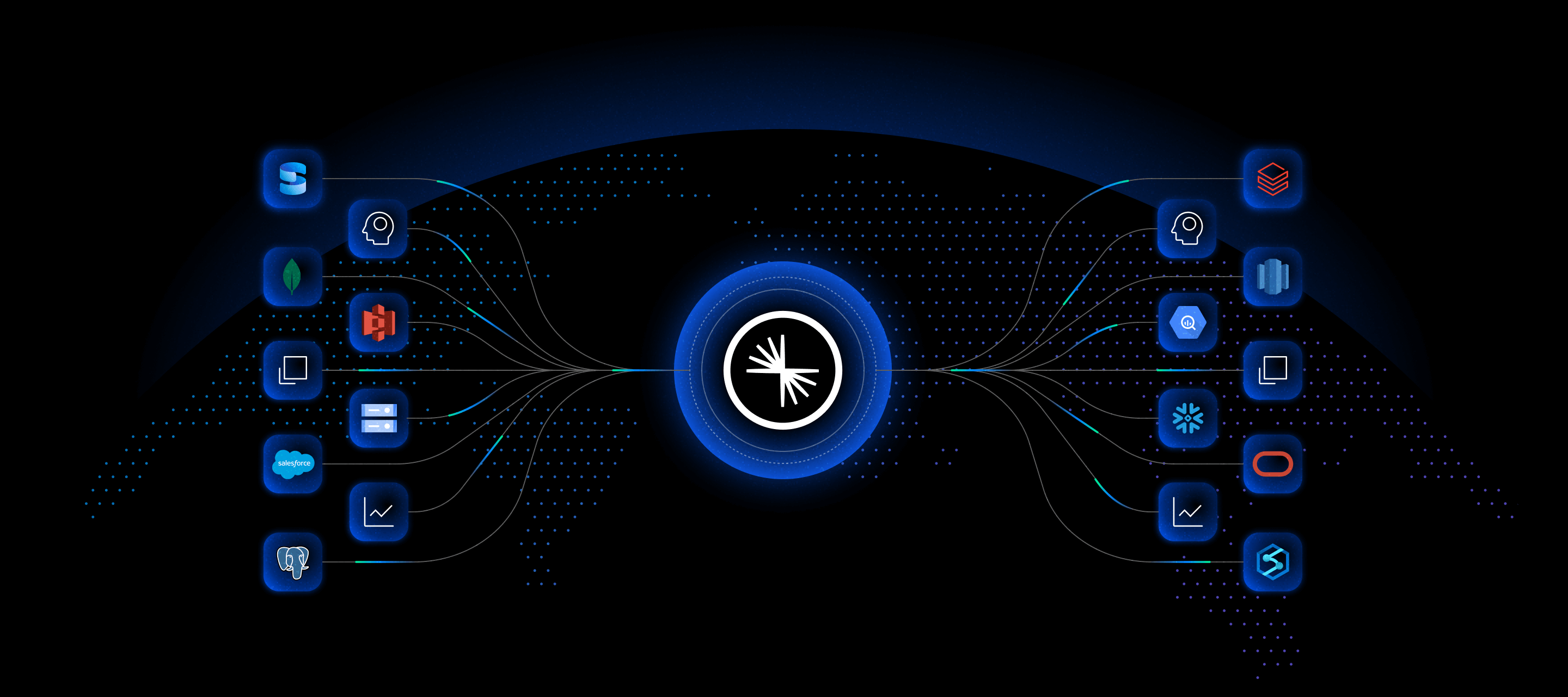

Power Resilient Apps & Agents With Event-Driven Design

With Confluent, you can build fault-tolerant apps and microservices that respond to business events in real time.

Stream real-time events, setting data in motion across your entire business.

Connect legacy databases, modern SaaS apps, and more with pre-built integrations.

Govern and process data streams so your apps act on trusted, secure, and compliant data.

Industry Solutions That Drive Results

Financial Services

Transform your business with real-time data that drives agility, security, and growth.

Key Outcomes

- Detect fraud in milliseconds.

- Analyze risk in real time for smarter decisions.

- Power real-time payments with speed and reliability.

- Deliver regulatory reporting with trusted data.

The Confluent Difference

Confluent provides a cloud-native and complete data streaming platform that is available everywhere your business needs to be at a cost less than open source Kafka and your favorite hyperscaler with transparent consumption-based pricing.

Cloud-Native

Kafka completely re-architected for the cloud to create a 10X better cloud service, eliminating your Kafka ops burden.

Complete

A complete, enterprise-grade data streaming platform with all the essential tools enabling developers to build quickly, reliably, and securely.

Everywhere

Freedom of choice to deploy Kafka anywhere from on-prem to cloud and across clouds.

See Confluent In Action

![Welcome to Confluent Cloud image]()

![Contact a specialist to book a demo of Confluent]()

Contact a Specialist

Contact a SpecialistReceive a tailored solution fit to your data streaming needs while receiving guidance every step of the way.

![Try an interactive demo of Confluent]()

Visit the Demo Center

Explore DemosLearn how to build real-time applications, data streaming pipelines, and much more.

Frequently Asked

Questions

Data streaming is the practice of treating information as a continuous flow of events rather than static batches. Instead of waiting for data to pile up before it’s processed, insights and actions can be triggered the moment new events occur. Apache Kafka® has become the standard technology that makes this possible.

Confluent takes Kafka further, rearchitecting it for the cloud. Both Confluent Cloud and Confluent Platform provide high-performance, enterprise-ready data streaming capabilities—built to scale seamlessly, reduce operations overhead, and deliver faster results. Confluent Cloud is powered by Kora, our cloud-native engine that provides autoscaling and 20-90%+ throughput savings.

Traditional APIs follow a request-response model, where apps must ask for data to receive it. Data streaming flips the model: producers continuously publish events to a central log, and consumers subscribe to whatever streams they need in real time. This event-driven approach enables more scalable, decoupled architectures.

A data streaming platform enables organizations to stream data continuously in real time, connect systems across environments, process events as they happen, and govern data for reliability and compliance. It provides the foundation to power analytics, AI, and event-driven applications, all while ensuring trusted, scalable, and secure data flows across the business.

Organizations of all sizes—from startups to global enterprises—use Confluent to modernize their data foundations. It’s especially valuable for teams looking to build event-driven applications, leverage analytics and AI on live data, or replace brittle batch-based ETL pipelines with a more reliable, real-time approach.

Any app that relies on fast, trustworthy data can benefit. Examples include streaming analytics dashboards, fraud detection engines, real-time personalization, cybersecurity systems, and event-driven AI like RAG or multi-agent systems. If your application needs to react instantly and reliably, Confluent provides the foundation.

Confluent enables a wide range of industry-agnostic data streaming use cases, including:

- Real-time analytics and dashboards to make faster, data-driven decisions.

- Event-driven applications that react instantly to business events.

- AI and machine learning powered by fresh, contextual, and trustworthy data.

- Data integration across hybrid and multi-cloud environments for seamless operations.

- Data governance and reliability to ensure compliance and accuracy across systems.

- And many more.

Industry-specific use cases that Confluent enables also include:

- Financial services: Fraud detection, risk analysis, and real-time payments.

- Retail & ecommerce: Inventory visibility, demand forecasting, and personalized recommendations.

- Manufacturing & automotive: Predictive maintenance, intelligent production, and supply chain optimization.

- Telecommunications: Network monitoring, IoT data integration, and 5G service optimization.

- Healthcare: Patient monitoring, real-time clinical analytics, and data integration across systems.

- Software & technology: Event-driven applications, SaaS integrations, and operational telemetry.

- And many more here as well.

Confluent supports common formats like Avro, Protobuf, and JSON Schema through its Schema Registry, which is part of Stream Quality in Stream Governance (Confluent’s fully managed governance suite). With Confluent’s portfolio of 120+ pre-built connectors you can quickly connect to databases, data warehouses, SaaS apps, and cloud services—making it easy to integrate data across your entire ecosystem.

Yes. Confluent is fully compatible with Apache Kafka®. With features like Cluster Linking, you can mirror topics in real time, replicate data and metadata, or migrate existing workloads to Confluent—all without downtime.

Absolutely. Confluent Cloud’s Kora engine delivers massive scalability and reliability, backed by a 99.99% uptime SLA for production workloads. It can handle GBps+ workloads while scaling 10x faster than traditional Kafka. Confluent Platform also runs on a cloud-native distribution of Kafka and provides features for easier self-managed scaling, including Confluent for Kubernetes and Ansible playbooks. Security is built in, with enterprise-grade features and compliance certifications including SOC 2, ISO 27001, and PCI DSS.

Sign up for Confluent Cloud and you’ll receive $400 in credits to start building right away. We’ll walk you through how to launch a cluster, connect a data source, and start using Schema Registry in minutes. Our Demo Center and Confluent Developer site also provide guided resources to help you build quickly and confidently.

It’s a common mix-up! Confluent is all about real-time data streaming, helping businesses move, connect, process, and govern data at scale. Confluence, on the other hand, is a web-based workspace for team collaboration and documentation. So while they both help teams work smarter, only Confluent powers the data that drives modern applications, AI, and analytics.