[Webinar] Master Apache Kafka Fundamentals with Confluent | Register Now

Technology

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

KSQL February Release: Streaming SQL for Apache Kafka

We are pleased to announce the release of KSQL v0.5, aka the February 2018 release of KSQL. This release is focused on bug fixes and as well as performance and stability […]

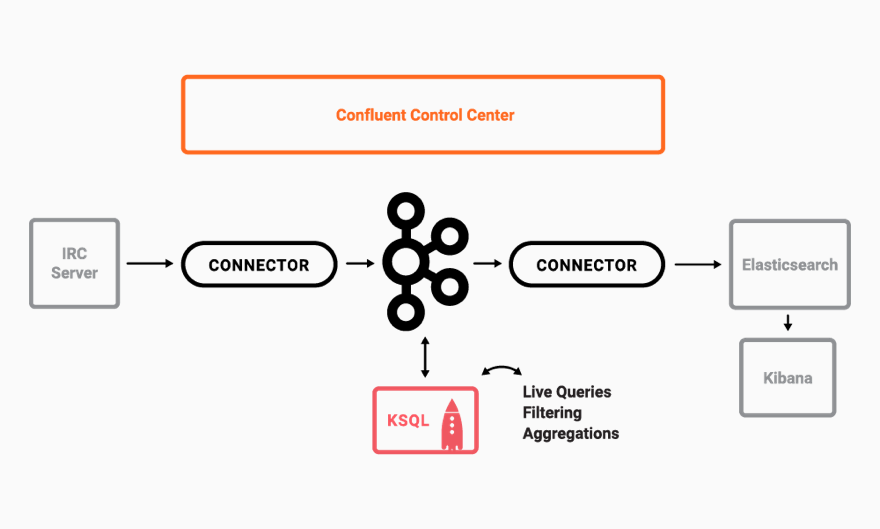

Secure Stream Processing with Apache Kafka, Confluent Platform and KSQL

In this blog post, we first look at stream processing examples using KSQL that show how companies are using Apache Kafka® to grow their business and to analyze data in […]

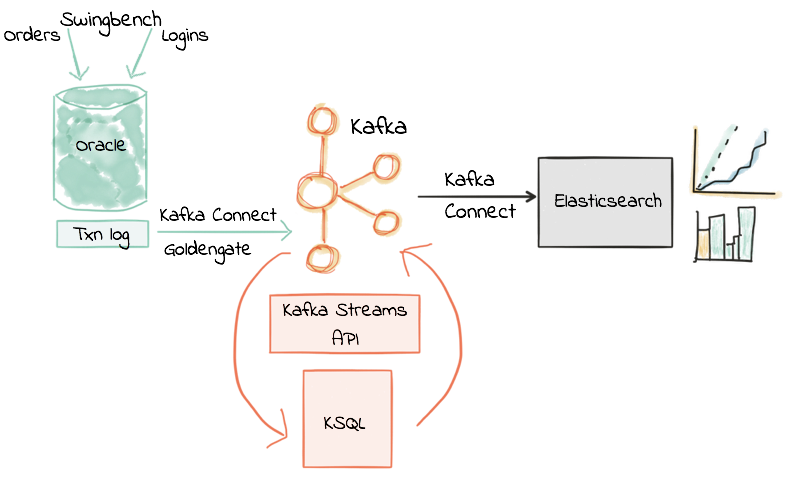

KSQL in Action: Real-Time Streaming ETL from Oracle Transactional Data

In this post I’m going to show what streaming ETL looks like in practice. We’re replacing batch extracts with event streams, and batch transformation with in-flight transformation. But first, a […]

KSQL January release: Streaming SQL for Apache Kafka

We are pleased to announce the release of KSQL 0.4, aka the January 2018 release of KSQL. As usual, this release is a mix of new features as well as […]

Should You Put Several Event Types in the Same Kafka Topic?

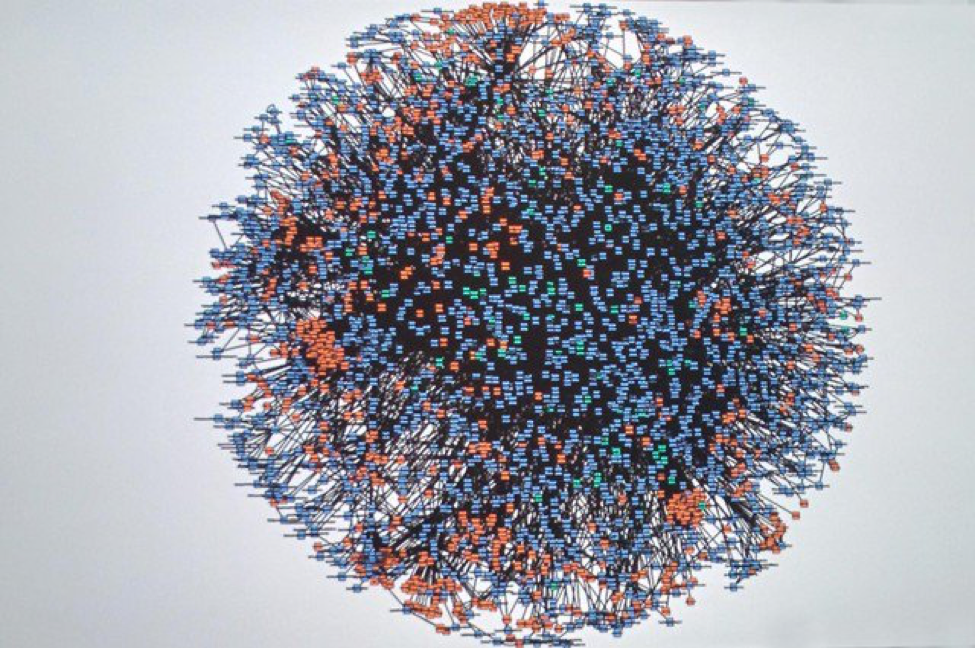

If you adopt a streaming platform such as Apache Kafka, one of the most important questions to answer is: what topics are you going to use? In particular, if you […]

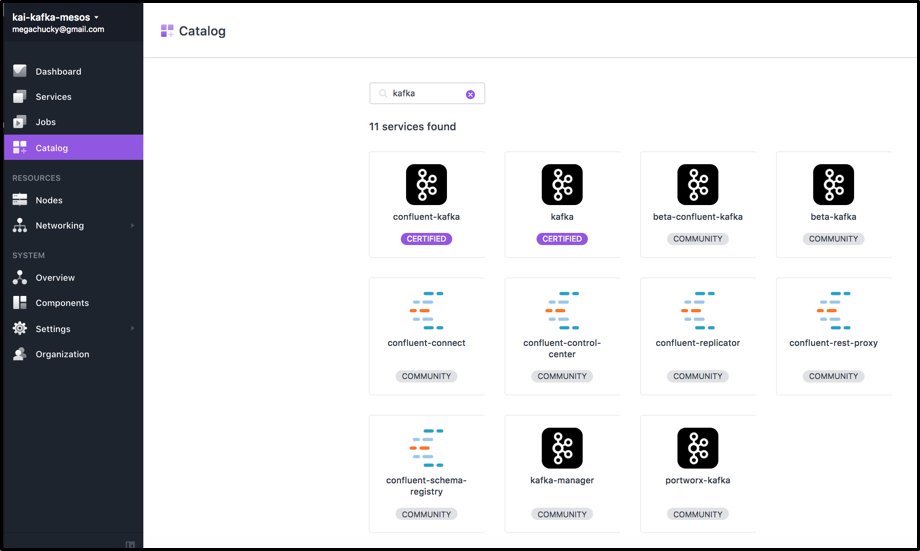

Apache Mesos, Apache Kafka and Kafka Streams for Highly Scalable Microservices

Apache Kafka and Apache Mesos are very well-known and successful Apache projects. A lot has happened in these projects since Confluent’s last blog post on the topic in July 2015. […]

KSQL December Release: Streaming SQL for Apache Kafka

We are very excited to announce the December release of KSQL, the streaming SQL engine for Apache Kafka®! As we announced in the November release blog, we are releasing KSQL […]

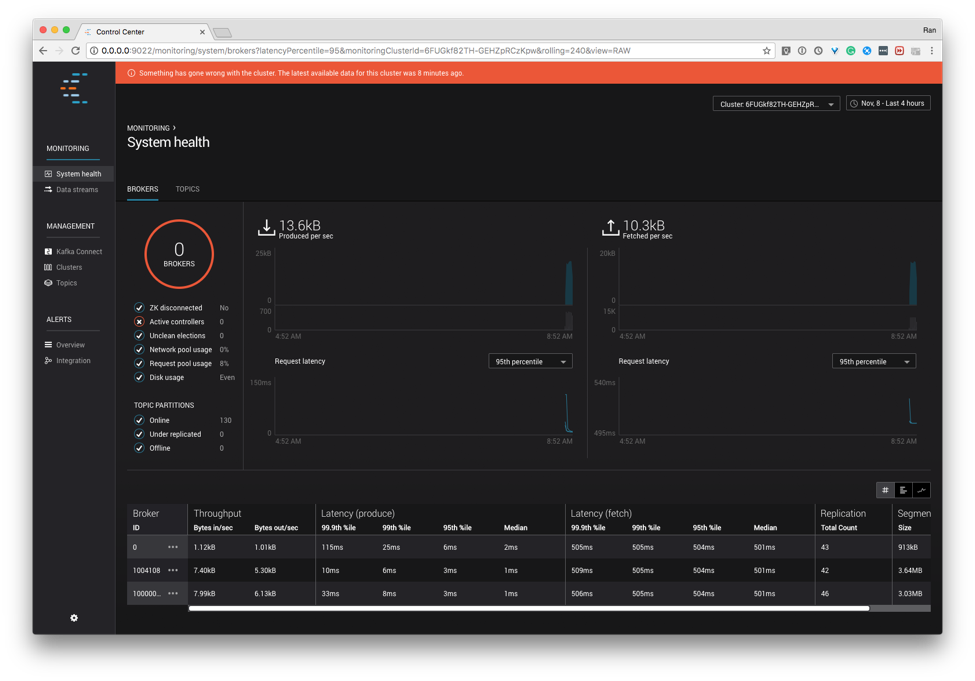

The Blog Post on Monitoring an Apache Kafka Deployment to End Most Blog Posts

Confluent Platform is the central nervous system for a business, uniting your organization around a Kafka-based single source of truth. Apache Kafka® has been in production at thousands of companies […]

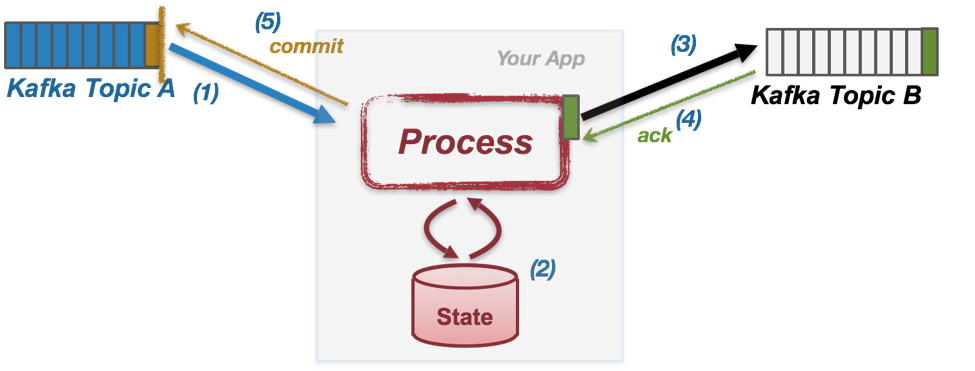

Enabling Exactly-Once in Kafka Streams

This blog post is the third and last in a series about the exactly-once semantics for Apache Kafka®. See Exactly-once Semantics are Possible: Here’s How Kafka Does it for the […]

Monitoring Apache Kafka with Confluent Control Center Video Tutorials

Mission critical applications built on Kafka need a robust and Kafka-specific monitoring solution that is performant, scalable, durable, highly available, and secure. Confluent Control Center helps monitor your Kafka deployments […]

Handling GDPR with Apache Kafka: How does a log forget?

If you follow the press around Apache Kafka you’ll probably know it’s pretty good at tracking and retaining messages, but sometimes removing messages is important too. GDPR is a good […]

Toward a Functional Programming Analogy for Microservices

Microservices are all the rage these days. Passionate, thoughtful advocates and detractors present compelling arguments for and against the architectural style. Usually, these arguments boil down to whether organizations should adopt, refrain from, or abandon microservices...

Introducing Confluent Platform 4.0

I am very excited to announce the general availability of Confluent Platform 4.0, the enterprise distribution of Apache Kafka 1.0. This release includes a number of significant improvements, including enhancements […]

Confluent Cloud: Enterprise-Ready, Hosted Apache Kafka is Here!

Today, 40,000 people in Las Vegas are thinking about the Cloud—not because the weather is dry in Nevada, but because AWS re:Invent is in full force. Today is a perfect […]