OSS Kafka couldn’t save them. See how data streaming came to the rescue! | Watch now

Streaming Data Pipelines

Streaming data pipelines enable continuous data ingestion, processing, and movement from its source(s) into its destination as soon as the data is generated in real time. Similarly known as streaming ETL and real-time dataflow, this technology is used across countless industries to turn databases into live feeds for streaming ingest and processing to accelerate data delivery, real-time insights, and analytics.

Created by the founders of Apache Kafka, Confluent's data streaming platform automates real-time data pipelines with 120+ pre-built integrations, security, governance, and countless use cases.

What Are Streaming Data Pipelines?

- Mobile banking apps

- GPS apps that recommend driving routes based on live traffic information

- Smartwatches that track steps and heart rate

- Personalized real-time recommendations in shopping or entertainment apps

- Sensors in a factory where temperatures or other conditions need to be monitored to prevent safety incidents

Real time. At scale.

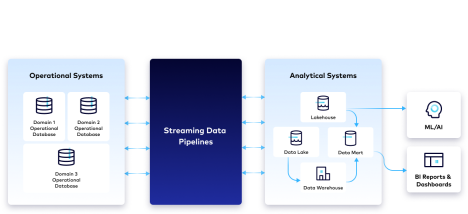

Streaming data pipelines support a variety of data formats from heterogeneous data sources and operate on a scale of millions of events per second. They break down data silos by streaming data across environments, unlocking real-time data flows for an organization, and are often used to bridge 2 types of systems:

- Operational Systems where data in different formats is stored in operational databases that may be on-premises or in the cloud.

- Analytical Systems where data populates a data warehouse or data lake and is used for real-time analytics, reporting, BI dashboards, and other data science initiatives.

Why Streaming Data Pipelines?

Real-time data flows

By moving and transforming data from source to target systems as data happens in real time, streaming data pipelines provide the latest, most accurate data in a readily usable format, which increases dev agility and uncovers insights that help organizations make better-informed, more proactive decisions. The ability to respond intelligently to real-time events lowers risk, generates more revenue or cost savings, and delivers more personalized customer experiences.

Real-time stream processing

Stream processing has significant advantages over batch processing, especially in terms of data latency. Batch-based ETL pipelines ingest and transform data in periodic, oftenly nightly, batches. By contrast, stream processing allows real-time data to be continuously transformed en route to target systems as data is being generated. This minimizes latency and allows streaming data to power use cases like real-time fraud detection. For more information on different kinds of data processing, visit Batch vs. Stream Processing.

Agile pipeline development

By eliminating hand coding of individual data pipelines, organizations spend less time on break-and-fix and more time on the innovative use of data streams and adapting data for changing business needs. With less technical debt and upkeep required, teams also end up with better governed use of data across the enterprise.

Scalable, fault-tolerant cloud architecture

Auto-scaling and elasticity in the cloud allow consumers of streaming data to focus on the use of the data, not on the management of infrastructure. Since events flow continuously, built-in fault tolerance protects organizations from data loss, missed opportunities, and delayed response times.

How Streaming Data Pipelines Work

Streaming data pipelines continuously execute a series of steps, including ingesting raw data as it's generated, cleaning it, and customizing the results to suit the needs of the organization.

Ingestion

Real-time data is ingested from on-premises and cloud-based data sources (e.g., applications, databases, IoT sensors) in different formats.

Stream processing

Data manipulation, cleansing, transformation, and preparation remove unusable data from the pipeline, and readies the data for monitoring, analysis, and other downstream use.

Schema standardization

In order to prepare and analyze streaming data, it must be consistently structured into a standard schema, a guide that determines and understands how data is organized. Adhering to a standard schema enables scalable data compatibility while reducing operational complexity.

Stream monitoring

To better understand the systems generating continuous events, most organizations apply observability and monitoring to event streams. The ability to see what is happening to streams—how many messages are produced or consumed over time, for example—helps ensure that they are working properly and delivering data in real time, without data loss.

Message broker

A message broker handles serving and delivery of data throughout the streaming data pipeline, ensuring exactly-once processing and maintaining the integrity and lineage of the data.

Provisioning

Data is made readily available for a variety of target destinations and downstream consumers, who often require simultaneous access to timely and historical data. To meet this requirement, many architectures will include a combination of data storage in data lakes, data warehouses, and the message broker itself.

5 Principles for Building Modern Streaming Data Pipelines

1. Streaming

Use data streams and a streaming platform to maintain real-time, high-fidelity, event-level repositories of reusable data within the organization instead of pushing periodic, low-fidelity snapshots to external repositories. Use schemas as the data contract between producers and consumers, ensuring data compatibility.

2. Decentralized

Support domain-oriented, decentralized data ownership that allows teams closest to the data to create and share data streams that can be reused. Empower teams working with self-service data to be able to easily publish and subscribe to data streams across the entire business.

3. Declarative

Leverage a declarative language such as SQL to specify the logical flow of data—where data comes from, where it’s going—without the low-level operational details, instead allowing infrastructure to automatically handle changes in data scale.

4. Developer-oriented

Bring agile development and CI/CD practices to pipelines, allowing teams to build modular, reusable data flows that can be developed, tested, deployed into different environments, and versioned independently. Enable team members with different skills to collaborate on pipelines using tools that support both visual IDEs (integrated development environments) and code editors. This is distinct from traditional ETL tools, which tend to be built for non-developers.

5. Governed

Maintain the balance between centralized standards for continuous data observability, security, policy management, and compliance, while providing visibility, transparency, and compatibility of data with intuitive search, discovery, and lineage so developers and engineers can innovate faster.

Examples of Use Cases

Migration to cloud

To migrate or modernize application development operations and take advantage of cloud-native tools, streaming data pipelines help connect and sync on-premises and multi-cloud databases in real time. Events can be processed and enriched with other streams to power new applications and reduce maintenance and TCO of legacy databases. Watch a demo where an Oracle CDC connector streams changes from a legacy database to a cloud-native database like MongoDB, with the use of stream processing for real-time fraud detection.

Real-time analytics

Streaming data pipelines help businesses derive valuable insights by streaming data from on-premises systems to cloud data warehouses for real-time analytics, ML modeling, reporting, and creating BI dashboards. Moving workloads to the cloud brings flexibility, agility, and cost-efficiency of computing and storage. Here’s a demo featuring the use of PostgreSQL CDC to stream customer data, stream processing to transform data in real time, and a Snowflake connector for real-time data warehousing and subsequent analysis and reporting.

SIEM optimization

Streaming data pipelines deliver contextually rich data necessary to be more situationally aware, helps automate and orchestrate threat detection, reduce false positives, and enable a proactive posture toward threats and cyberattacks. Visit this page to learn more about how consolidating, categorizing, and enriching data (e.g., logs, network data, telemetry and sensor data, real-time events) can equip teams with the right data at the right time for real-time monitoring and security forensics.

Mainframe data access

Streaming data pipelines can unlock access to critical systems-of-record data, providing real-time access to mainframe data combined with other data to reduce silos and power new applications, increase data portability across different systems, and reduce MIPS and networking costs. Learn more here.

Why Confluent

Piping data directly from source to target systems creates point-to-point connections, which are unscalable and difficult to secure and maintain in a cost-effective way. Confluent’s fully managed Apache Kafka is a data streaming platform that serves as the backbone for data integration and building extensible streaming data pipelines. Using Confluent, you can decouple data producers and consumers, and abstract away the process of stitching together heterogeneous systems.

Confluent offers 120+ pre-built source and sink connectors to help you integrate and stream data between a variety of systems across hybrid and multicloud environments. Save 6 months to years of engineering time and reduce operational overhead by deploying fully managed connectors that provide your teams with self-serve data wherever and whenever they need it. Additionally, you can process and transform data streams in real time with Flink, and ensure data quality and compliance with Stream Governance. Confluent’s Stream Designer is a no-code visual UI that helps you build, test, and deploy streaming data pipelines in minutes.