OSS Kafka couldn’t save them. See how data streaming came to the rescue! | Watch now

Data Streaming Across Any Hybrid or Multicloud Architecture

Confluent’s hybrid and multicloud data streaming solutions power real-time interoperability between any systems, applications, and datastores on any number of on-premises and cloud environments. Innovate faster, reduce risk, and maximize ROI with cloud-native ease and simplicity.

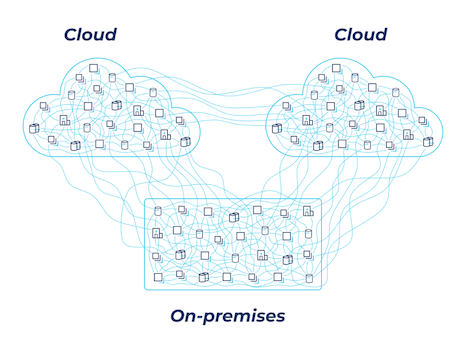

Complex Data Architectures Are Slowing You Down

Lots of point-to-point interconnections create a giant mess

Data movement using periodic batch jobs, ETL tools, existing messaging systems, APIs, and custom, DIY engineering work results in a complex “spaghetti-mess” of brittle, point-to-point interconnections that have to be individually networked, secured, monitored, and maintained. This makes your data architecture slow, expensive, brittle, and insecure.

The adoption of new cloud services makes these problems exponentially worse because more individual connections are added, complex new cloud networking and security challenges are introduced, and all sorts of additional compliance and data sovereignty laws must be addressed across different global regions.

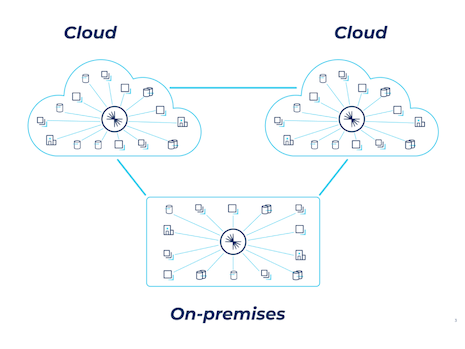

Simplify Your Architecture and Accelerate Your Business

Eliminate the need for point-to-point interconnections

Replace brittle point-to-point interconnections with a real-time, global data plane that connects all of the systems, applications, datastores, and environments that make up your enterprise. Set data in motion everywhere, regardless of whether systems are running on-prem, in the cloud, or a combination of both.

Address networking and security challenges once instead of every time a new connection is made. Free up valuable engineering resources to focus on building things that move the needle for your business instead of dealing with complex data infrastructure projects.

Mobilize Your Data Everywhere in Real Time and Increase Innovation

Confluent’s solution for hybrid and multicloud architectures helps organizations accelerate development velocity, realize the benefits of the cloud faster, and deliver a new class of real-time applications that power rich, frontend customer experiences and efficient, backend operations. All of an organization's datastores, applications, and systems can now operate on a singular and consistent real-time view of an organization’s data, eliminating the need for periodic batch jobs that create inconsistent copies of data in different places at different times.

Fast

Build innovative real-time applications with global consistency

Cost-Effective

Reduce hidden cloud costs and free up valuable engineering resources

Resilient

Maximize uptime by eliminating single points of failure

Secure

Safely and securely share data across the global organization

Wherever You Are on Your Cloud Journey, We Have a Solution for You

Migrate

Incrementally migrate from legacy on-premises infrastructure to and across clouds

Augment

Easily integrate existing systems and modernize at your own pace

Innovate

Build state-of-the-art cloud-native applications and accelerate time to market

Unlock New Hybrid and Multicloud Use Cases

Data warehouse streaming pipelines

Leverage modern cloud data warehouse services using Confluent as a persistent, real-time, bi-directional bridge between existing and new data warehouse systems. Deliver connected, real-time analytics with Confluent.

App modernization

Confluent modernizes any system with an event-driven architecture. The second an event happens, services update data in real time for seamless integration, data consistency, and scalable, responsive microservices orchestration.

Disaster recovery

Deploying a disaster recovery strategy with Confluent can increase availability and reliability of your mission-critical applications by minimizing data loss and downtime during unexpected disasters, like public cloud provider outages.

Mainframe integration

Leverage Confluent’s data in motion platform to unlock your mainframe data for real-time insights without incurring the complexity and expense that come with sending ongoing queries to mainframe databases.

Additional Resources

Learn more about how Confluent's complete, fully managed platform is revolutionizing the way businesses achieve real-time data management, insights and analytics, everywhere.