OSS Kafka couldn’t save them. See how data streaming came to the rescue! | Watch now

Application

Join us for the Inaugural Stream Data Hackathon

I’m happy to announce that Confluent will be hosting the first Stream Data Hackathon this April 25th in San Francisco! Apache Kafka has recently introduced two new major features: Kafka […]

Announcing Confluent University: World-Class Apache Kafka Training

I’m pleased to announce the launch of Confluent University: world-class Apache Kafka training now available both onsite and at public venues. Although we’ve been offering onsite training to our customers […]

Introducing the Kafka Consumer: Getting Started with the New Apache Kafka 0.9 Consumer Client

When Apache Kafka® was originally created, it shipped with a Scala producer and consumer client. Over time we came to realize many of the limitations of these APIs. For example, […]

Yes, Virginia, You Really Do Need a Schema Registry

I ran into the schema-management problem while working with my second Hadoop customer. Until then, there was one true database and the database was responsible for managing schemas and pretty […]

Apache Kafka, Samza, and the Unix Philosophy of Distributed Data

One of the things I realised while doing research for my book is that contemporary software engineering still has a lot to learn from the 1970s. As we’re in such […]

Getting started with Kafka in node.js with the Confluent REST Proxy

Previously, I posted about the Kafka REST Proxy from Confluent, which provides easy access to a Kafka cluster from any language. That post focused on the motivation, low-level examples, and […]

The Value of Apache Kafka in Big Data Ecosystem

This is a repost of a recent article that I wrote for ODBMS. In the last few years, there has been significant growth in the adoption of Apache Kafka. Current […]

Compatibility Testing For Apache Kafka

Testing is one of the hardest parts of building reliable distributed systems. Kafka has long had a set of system tests that cover distributed operation but this is an area […]

Turning the database inside-out with Apache Samza

This is an edited and expanded transcript of a talk I gave at Strange Loop 2014. The video recording (embedded below) has been watched over 8,000 times. For those of […]

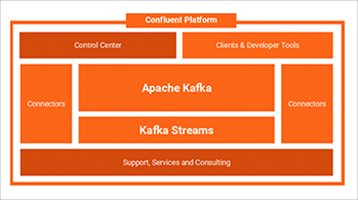

Announcing the Confluent Platform 1.0

We are very excited to announce general availability of Confluent Platform 1.0, a stream data platform powered by Apache Kafka, that enables high-throughput, scalable, reliable and low latency stream data […]

Announcing Confluent, a Company for Apache Kafka® and Realtime Data

Today I’m excited to announce that Neha Narkhede, Jun Rao, and I are forming a start-up called Confluent around realtime data and the open source project Apache Kafka® that we […]