OSS Kafka couldn’t save them. See how data streaming came to the rescue! | Watch now

Real-time data demands real-time processing

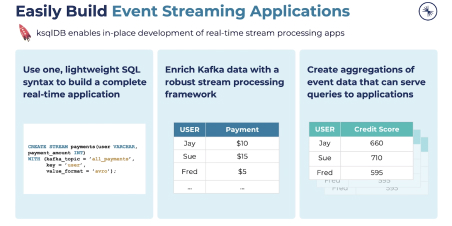

Now that your data is in motion, it’s time to make sense of it. Stream processing enables you to derive instant insights from your data streams, but setting up the infrastructure to support it can be complex. That’s why Confluent developed ksqlDB, the database purpose-built for stream processing applications.

Announcing Confluent Cloud for Apache Flink®

Easily leverage stream processing with the industry’s only cloud-native, serverless Flink service

Process your real-time data streams instantaneously with just a few SQL statements

Create real-time value by processing data in motion rather than data at rest

Make your data immediately actionable by continuously processing streams of data generated throughout your business. ksqlDB’s intuitive syntax lets you quickly access and augment data in Kafka, enabling development teams to seamlessly create real-time innovative customer experiences and fulfill data-driven operational needs.

Simplify your stream processing architecture

ksqlDB offers a single solution for collecting streams of data, enriching them, and serving queries on new derived streams and tables. That means less infrastructure to deploy, maintain, scale, and secure. With less moving parts in your data architecture, you can focus on what really matters -- innovation.

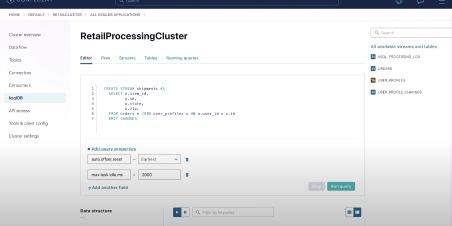

Start building real-time applications with simple SQL syntax

Build real-time applications with the same ease and familiarity as building traditional apps on a relational database -- all through a familiar, lightweight SQL syntax. How does Kafka Streams compare to ksqlDB? Well. ksqlDB is built on top of Kafka Streams, a lightweight, powerful Java library for enriching, transforming, and processing real-time streams of data. Having Kafka Streams at its core means ksqlDB is built on well-designed and easily understood layers of abstractions. So now, beginners and experts alike can easily unlock and fully leverage the power of Kafka in a fun and accessible way.

Simplified architecture, advanced functionalities

Push and pull queries

Query tables and streams by either continuously subscribing to changing query results as new events occur (push queries) or looking up results at a point in time (pull queries), removing the need to integrate separate systems to serve each.

Fully managed and hosted

Eliminate the operational burden of running your own infrastructure for ksqlDB by leveraging a fully managed service in Confluent Cloud. With self-serve provisioning, in-place upgrades and guaranteed 99.9% uptime SLA, you can focus on building useful application functionality and not managing clusters.

User-defined functions

Extend ksqlDB with custom functions that are specific to your use case. Leverage Java to express your own data processing logic, exposing it to the ksqlDB engine using convenient hooks.

Embedded connectors

Easily move data streams from existing systems in and out of ksqlDB. Rather than running a separate Kafka Connect cluster for capturing events, ksqlDB can run pre-built connectors directly on its servers.

Industry-leading security

Rest assured your data is protected by leveraging ksqlDB alongside Role-Based Access Control, Audit Logs, and Secret Protection. Confluent designs products with security in mind, making ksqlDB secure by default.

Enterprise-level support

Have access to expert guidance 24/7 for faster issue resolution and bug fixes. Confluent’s experts are here not only to support your Confluent ksqlDB needs, but any needs across the entire platform for data in motion.

Ready to get started?

Getting started with ksqlDB is easy. Sign up today, get a demo, or join one of our hands-on workshops.

Join us for a live demo

Try ksqlDB for free

Virtual hands-on lab

Free ksqlDB 101 Course

Additional resources

Building Stream Processing Applications with Confluent

Streaming Applications with Zero Infrastructure

Develop a Streaming ETL pipeline from MongoDB to Snowflake with Apache Kafka

Docs: ksqlDB

Continue learning about Confluent

Running Apache Kafka® in 2021: A Cloud-Native Service eBook

Learn how Confluent Cloud speeds up app dev, unblocks your people, and frees up your budget.

How Confluent Completes Apache Kafka® eBook

Learn how Confluent offers a complete and secure enterprise-grade distribution of Kafka.

Modernize Your Business with Confluent’s Connector Portfolio

Learn how to connect your data in motion more quickly, securely, and reliably with 120+ pre-built, expert-certified connectors.