[Webinar] Master Apache Kafka Fundamentals with Confluent | Register Now

Apache Flume: An Introduction

Apache Flume is an open-source distributed system originated at Cloudera and developed by the Apache Software Foundation. Designed for efficient data extraction, aggregation, and movement from various sources to a centralized storage or processing system, Flume is commonly used in big data environments.

Confluent’s fully managed, cloud-native data streaming platform automates data ingestion, aggregation, and streaming data pipelines. With 120+ connectors, you can move high-volume data streams from any data source to any sink, in real time.

Architecture Overview

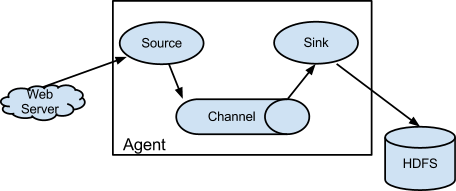

Apache Flume’s architecture revolves around a simple yet powerful concept of data flow pipelines. It consists of three main components: sources, channels, and sinks. Sources represent the data origin, channels act as intermediate storage for the data, and sinks are the final destinations where the data is delivered. This modular architecture allows flexibility in configuring the data flow and ensures fault tolerance and scalability.

An example use case of Apache Flume could be collecting log data from multiple servers and aggregating it into a centralized logging system. Flume can efficiently handle data from diverse sources, such as log files, network streams, or social media feeds, making it suitable for a wide range of data ingestion scenarios.

While Flume specializes in reliable and scalable data ingestion, usually to Hadoop, Apache Kafka is primarily designed as a distributed streaming platform that provides high-throughput, fault-tolerant publish-subscribe messaging.

Kafka can also be used as a log ingest pipeline to Hadoop, but since Kafka offers advanced features like strong durability guarantees and real-time stream processing, it is also a great choice for use cases that fall outside of Flume’s areas of strength, such as streaming applications and data integration across various systems.

How Flume Works

A Flume agent hosts the components through which events flow from an external source to the next destination (hop):

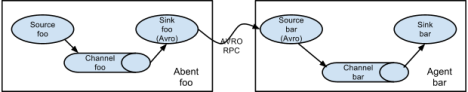

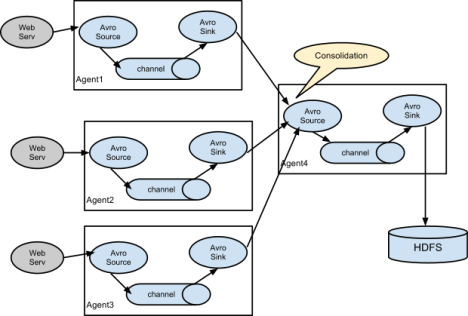

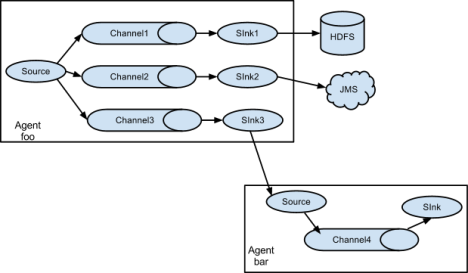

Flume allows users to build different data flow architectures, including multi-hop flows, fan-in, fan-out, and contextual routing.

In a multi-hop flow, Flume agents are strung together:

In a consolidation flow, multiple Flume agents are linked to a single sink agent that writes to the target sink:

Flume can support writing to multiple sinks from the same source, employing contextual routing and fan-out architecture patterns:

Flume agents are lightweight Java processes that can be installed alongside a data source either on the same host or the same local network. This enables efficient collection of log data that would otherwise roll away. Keeping this data and moving it to a single place allows it to be aggregated, processed and queried for insights such as user behavior, cross-channel data correlation, service level quality, and so on.

What Is Apache Flume Used for?

Apache Flume is a distributed and reliable system designed for efficiently collecting, aggregating, and transporting large amounts of streaming data. It is commonly used in big data environments to ingest log files, social media data, clickstreams, and other high-volume data sources.

The most common reason it's used is because Flume provides a flexible and scalable architecture that enables data ingestion from various sources, including web servers, databases, and application logs. It uses a modular design with customizable components to facilitate data flow from the data source to a distributed filesystem or data lake. Once there, it can be analyzed by data processing frameworks, such as Apache Hadoop and Apache Spark. By simplifying the data ingestion process, it ensures reliable delivery and fault tolerance in distributed systems.

Common use cases:

Log Collection

Apache Flume is frequently used for collecting log files from various sources, such as web servers, application servers, and network devices. For example, Flume can be configured to ingest Apache web server logs and transport them to a centralized storage or analytics system.

Social Media Data Ingestion

Flume is suitable for capturing and processing real-time social media data from platforms like Twitter, Facebook, or Instagram. It enables organizations to extract valuable insights and perform sentiment analysis on social media streams.

Data Replication

Flume can replicate data across multiple systems or data centers for redundancy and backup purposes. It ensures data availability and disaster recovery. For instance, Flume can replicate data from a primary database to a secondary backup database.

Data Aggregation

Flume can aggregate data from multiple sources into a single destination. This is useful when dealing with data from distributed systems or different applications that need to be combined for analysis. For example, Flume can aggregate logs from multiple web servers into a central log repository.

Clickstream Analysis

Flume can capture and process clickstream data, which provides valuable insights into user behavior and website usage patterns. It enables businesses to analyze user navigation, page views, and interactions to optimize their websites or applications.

Internet of Things (IoT) Data Ingestion

Flume can handle the high-volume and real-time data generated by IoT devices. It allows organizations to collect and process sensor data, telemetry data, and other IoT-generated data for monitoring, analysis, and decision-making.

Data Pipeline

Flume serves as a component in data pipelines, facilitating the flow of data between various stages. It can connect data producers with processing frameworks like Apache Hadoop or Apache Spark. For example, Flume can ingest data from a messaging system and feed it into a Spark streaming job for real-time processing.

ETL (Extract, Transform, Load)

Flume can be part of an ETL process, where it extracts data from different sources, applies transformations if needed, and loads it into a target system or data warehouse. For instance, Flume can extract data from a database, transform it to a desired format, and load it into a data lake.

Data Streaming and Real-Time Analytics

Flume enables real-time data streaming for analytics applications. It can capture and deliver data streams to analytics platforms or real-time dashboards for immediate insights and decision-making.

Data Archiving

Flume can be utilized for archiving historical data by ingesting data from various sources and storing it in a long-term storage system. This is particularly useful for compliance, audit, or historical analysis purposes.

Benefits

Apache Flume effectively solves the problem of scalable data ingestion and movement, usually from an event data source to a data repository, distributed filesytem, or data lake, making data available for processing by a framework such as Hadoop or Spark.

The data ingestion problem is often overlooked and underappreciated. Ingesting data from a single type of data source is pretty straightforward, but as soon as data needs to be ingested from heterogeneous sources with an SLA, frameworks such as Flume become increasingly useful.

Challenges

Flume is subject to a lot of the same challenges that are inherent in distributed “Big Data” systems, such as:

-

Reliability: Ensuring data reliability during ingestion and transmission can be a challenge for Flume, necessitating fault tolerance and data recovery mechanisms to minimize data loss or corruption.

-

Complexity: Flume's configuration and deployment can be complex, especially for users with limited experience, demanding simplified interfaces or tools to streamline the setup process.

-

Data Transformation: Flume may encounter challenges in handling data transformation tasks, such as data enrichment or formatting, which require additional processing components or custom development.

-

Real-Time Processing: Providing real-time data ingestion and processing capabilities can be challenging for Flume, necessitating efficient buffering and streaming mechanisms to minimize latency and ensure timely data delivery.

How Confluent Can Help

While Flume is primarily used for log ingestion, specifically to Hadoop, for batch processing, Kafka can also be used for this. Moreover, Kafka’s capabilities are often considered as a superset of Flume’s.

Organizations that use both Flume and Kafka together have a variety of choices, such as a Kafka sink in Flume (dubbed “Flafka”) where Flume can be configured as a Kafka producer. Likewise, there is a source connector for Flume in the Kafka Connect ecosystem.

It’s worth noting that although it is called a “sink” connector from the perspective of Flume, Flume usually serves as a source from the point of view of Kafka. When Flume and Kafka are integrated, data is usually moving from Flume to Kafka. This speaks to the more generalized utility of Kafka as a framework.

Users who are interested in Apache Flume may also be interested in Confluent and Apache Kafka, since it can be deployed to solve a lot of the same ingestion problems in addition to offering other capabilities such as real-time stream processing, broad connectivity, distributed publish / subscribe, and event-driven microservices architectures.