OSS Kafka couldn’t save them. See how data streaming came to the rescue! | Watch now

When Should You Spin Up Your Next Apache Kafka Cluster?

A common question we encounter is “When is it a good time to spin up another Apache Kafka® cluster?” And the counter question to this has always been “Why do you need one more cluster?” Most of the time, a five-to-six node Kafka cluster (or 1 to 2 CKU Confluent Cloud cluster) easily services a huge volume of messages with better-than-expected throughput, latency, and plenty of room for more traffic. So, when do you need another cluster?

The physical limits of a single cluster

As stated in Jun Rao’s blog post, the safe physical limit of a single cluster is 200,000 partitions or 4,000 partitions per broker. (This also depends on the hardware you are using.) There are some users who have 9,000 partitions per broker and they don’t face any serious issues, but they use very high-end infrastructure. If you are likely to exceed these limits then, yes, you should consider another cluster. Moreover, with Confluent Cloud and KRaft, these limits are expanding further every day.

Network bandwidth, allocated storage, CPU, and memory also count toward the limits of your cluster. These depend on the level of the infrastructure you are using. However, partition count is very intrinsic to Kafka and so it is taken as an example metric.

Why use multiple Kafka clusters?

Kafka operators are wary of running many applications on a single cluster. Common reasons for this include:

Data privacy: Accidentally or intentionally exposing data in the Kafka topics outside the group that it was meant for.

Noisy neighbors: One or more client applications consuming the majority of the cluster resources and starving others.

Chargeback: Correctly billing a team for its proportionate share of the cluster usage.

Operations overhead: More applications mean more operations overhead.

Segregation of analytical and transactional data: Is it justified to use the same cluster for processing both types of data?

Compromising SLA: Risk of SLA compromise if too many applications are onboarded.

None of these concerns should be an issue because Confluent Cloud, Confluent Platform, and Apache Kafka were designed for internet-scale messaging and have a wide range of security features that cover every aspect of security, such as AuthN, AuthZ, data security (in-transit and at rest), audit log, etc., that protect your data. Features such as client quotas and authorization ensure that data privacy and noisy neighbor scenarios can be easily handled. Features such as role-based access control (RBAC) ensure that SecOps can be divided and delegated to relevant owners or groups to reduce the operations overhead.

Valid reasons for using multiple clusters

However, some valid reasons for needing multiple clusters include:

Compliance: For example, PCI DSS requires a Chinese wall between payment card data and other product data, so you will end up using separate clusters.

Doing business in multiple geographic regions: It is usually best for cost, latency, and development time to have a Kafka cluster available in every region where applications will be running.

Disaster recovery (DR): Setting up a disaster recovery cluster in a separate cloud or geographic region so that your business can continue operations even during a regional outage.

Allowing individual lines of business to operate and grow their Kafka deployments independently, with the scale, administration, and setup that works best for them.

Advantages of a single cluster

The value of Apache Kafka lies beyond internet-scale, high-throughput, low-latency messaging. Kafka’s data streaming capabilities allow event data to be correlated while your data is still in motion. Traditionally, we have seen relational operations on data at rest. Similarly, Confluent ksqlDB, KStreams, and Apache Flink with Apache Kafka allow unions, intersections, joins, and aggregations for your data while it is still in motion to derive business insights in real time.

This capability empowers you to correlate two events while the data is still in flight. For example:

Card transactions originating from two disparate geographic locations at the same time (card theft)

Item sales with item inventory

Correlating trade volumes between different asset classes

Validating the authenticity of insurance claims with incident reports

Concurrent loan applications received on multiple channels from the same borrower, collectively exceeding the permissible debt-to-income ratio (fraud)

Or even the sale of beer with that of diapers

Now imagine that the data for such events are in two different clusters. In that case, you will need system integration between your two Kafka clusters. Moreover, the event correlation could be slightly delayed depending on how far apart those clusters are. Furthermore, two replicated clusters are eventually consistent.

Whereas, if all of your business-critical data is in the same cluster then event correlation could be simpler and faster:

This is among the most important reasons for using a single cluster. Others include:

Economies of scale (fewer nodes) when self-managing to support the same level of throughput

As Kafka Connect, ksqlDB, and Kafka Streams clusters can only bootstrap to one cluster, you only need one of each of those instead of multiple—so all of the same advantages of a single cluster percolate into those

How should you use multiple Kafka clusters?

If you set up multiple Kafka clusters for reasons such as exceeding the per-cluster partition limit, compliance, or for the type of data being handled (transactions versus analytics) then use the guidelines below:

As much as possible, keep or aggregate all of your business-critical data in the same cluster—this facilitates faster business insights and timely action

Consider a second cluster for non-mission-critical data like data from internal corporate systems (HR, finance, or operations)

If you set up multiple clusters you can still make the data across multiple clusters available together for co-processing by connecting them as shown below:

Confluent Cluster Linking along with Schema Linking makes it possible to exchange data between multiple clusters without requiring a Kafka Connect cluster, unlike Apache MirrorMaker 2.

This frees up your data to flow seamlessly across your enterprise and partner network. Be wary of deploying isolated clusters that can’t communicate, as it proliferates data silos across your organization.

How do you run and operate large clusters?

With large clusters that are exposed as enterprise-wide shared services, operational tasks can be daunting. You may feel that you need an army of operators to manage such services. In reality, if you follow a federated model, such services can be managed by smaller teams. Moreover, your operations overhead will reduce drastically if you consider migrating your clusters to a cloud-native SaaS solution like Confluent Cloud.

Here are the best practices for a federated service governance approach for your large Confluent Platform, Confluent Cloud, or Apache Kafka clusters:

Include only the core cluster components in the scope of the shared service: Kafka brokers, ZooKeeper, Schema Registry. These components are the heart of any Kafka cluster. Most of the resources are provisioned here, e.g., topics, ACLs, storage, data quality (schemas), users, TLS certificates, etc. These components also determine the quality of service for your cluster, e.g., throughput, availability, DR, and more, as such these components should be owned and managed by the shared services team.

Delegate the responsibility of installing and managing Confluent Platform component sub-clusters to your line of business (LOB) teams, e.g., sub-clusters such as Kafka Connect and ksqlDB.

With the federated service governance approach the responsibilities for service operation are divided between your shared services team and the LOB teams.

Responsibilities of the shared services team

All of the responsibilities listed below apply only to the shared service cluster and do not extend to any Confluent Platform component sub-clusters owned by the LOB teams.

Infrastructure provisioning

Security

Capacity assurance

Providing self-service operations capabilities

Managing operations

Providing monitoring

Data governance

Let's focus on two important aspects, security and capacity, as the implications need due consideration. We will also consider self-service and data governance briefly.

Server security

The shared services team is responsible for securing all aspects of the central cluster which includes:

Exposing required listeners (ports)

Securing each listener with

Authentication

TLS

Managing TLS certificates

Authorization and quotas

Audit logs

User provisioning

Rotate keys and credentials

Disk/volume encryption

In the list above, authorization is the most complex task. It requires providing selective access to resources such as Kafka topics, consumer groups, schemas, transaction IDs, user after user. Fortunately, Confluent provides RBAC which makes this task highly scalable as it allows delegating this responsibility to the LOB teams with roles such as ClusterAdmin, ResourceOwner, etc. As such, this task can be exposed as a self-service capability and automated using, for example, Confluent’s Terraform Provider.

The rest of the tasks in the list above, although not delegatable, can be automated.

Capacity assurance

Let’s say you have exposed a self-service task for the creation of topics. An LOB team submits an online form that starts a workflow. When the form comes for your approval, you find that the team is requesting the creation of a topic that will process 100 GB of data each day. Unfortunately, you can’t fulfill this request as your cluster does not have sufficient storage capacity. What do you do?

You may have noticed two things:

That any approval-seeking step in your self-service workflow defeats its purpose.

You can’t automate all your resource provisioning self-service tasks.

There are best practices available to handle these challenges:

Provision extra capacity in your cluster when you build it.

The “extra” part of the capacity should be linked to your business growth forecasts. Make your business partners accountable for the forecasts.

No matter how accurate the business forecasts are, things can go wrong since no one can predict the macroeconomic factors. If that happens, it is easy to provision additional storage and CPU these days thanks to containerization and virtualization (read Kubernetes and cloud).

If you are using Confluent Cloud, then you can simply add more CKUs (Confluent unit for Kafka) by simply dragging a slider bar or by using the API or CLI.

Build resource capacity ranges in your service offerings so that no approval is necessary for certain capacity thresholds, e.g., up to 20 topics a day not exceeding a combined data consumption of 20 GB per day, beyond which an approval from the shared services team would be required.

Enforce bandwidth consumption quotas for each team using the Quotas feature so that no single team is able to consume more than their fair share of the available cluster bandwidth. Learn more about this feature in the documentation:

Enforcing client quotas (Apache Kafka and Confluent Platform)

This discussion about capacity provisioning poses an interesting question:

Is Kafka “auto-scalable”?

Before we contemplate this, let’s first make it clear what we mean by auto-scaling. With auto-scaling it is meant that with the help of external system administration tools, it should be possible to allocate or deallocate computing resources such as CPU and storage to your Kafka cluster proportionate with the demand (message traffic) and without direct human intervention.

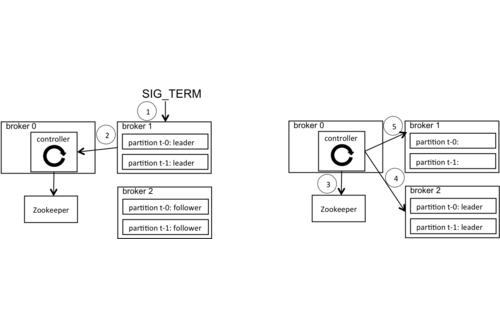

The answer is yes and no. Let’s answer the “yes” part first. We can add more broker nodes to existing clusters or decommission existing nodes from a cluster, similarly, you can add more CKUs for Confluent Cloud clusters. With the Self-Balancing Cluster feature in Confluent Platform, you can ensure that topic partitions (and so the data/disk usage) are uniformly distributed across available nodes dynamically. Similarly, Confluent Cloud will manage continuously balancing your topic partitions across the nodes for you. So yes, Kafka is horizontally scalable.

However, you can’t add or reduce partitions of an existing Kafka topic without losing the message ordering. Moreover, you have to decide the partition count for your topic in advance by anticipating/planning the number of consumer instances. The partition count for your Kafka topics does not shrink or expand automatically based on the number of consumer instances you deploy (for example, in your Kubernetes cluster). So Kafka topics are not auto-scalable.

Self-service capabilities

Typically, companies choose to expose a selected list of self-service capabilities over UI as a workflow or it can be exposed as an API, CLI, or integrated with their favorite CI/CD tools for a DevOps-friendly approach. Here are some resources for developing your self-service operations capabilities:

Data governance

Given that your Apache Kafka clusters are used for data exchange between various independent teams, it is of paramount importance that you govern the structure of your data.

There are two possibilities for Kafka topics:

They are used only by one team which is very small.

They are used by multiple large teams which are spread wide and far.

In the first case, there is no problem—the topic and schemas can remain completely private and all access rights can be delegated to this single team. However, case #2 requires close coordination between multiple teams, so a well-thought-out governance process and structure are warranted.

A simple process could be to retain the ownership of the data with the shared services team and any data change should use a workflow requiring approval from members from more than one team depending on those Kafka topics.

Responsibilities of the LOB teams

The LOB teams have two main responsibilities:

Request resources on the shared service cluster for their teams

Manage operations for their LOB-specific sub-clusters

Provisioning resources on the shared services cluster includes using the self-service tasks to provision resources such as topics, users, and their access permissions. It also involves coordination with the shared services team as part of the provisioning workflow.

Whereas managing the operations for their sub-cluster includes:

Provisioning infrastructure

Capacity assurance

Adding security (using the delegated roles provided by Confluent RBAC)

Managing operations

Where will this lead you?

When it comes to the capability maturity for your Kafka-based event streaming service the ultimate goal is to make it the central nervous system of your business. Following the best practices discussed here will lead your Kafka services in this direction.

If you follow this structure, policy, and practice, you can easily promote and reuse your Kafka clusters as shared infrastructure for your analytics needs too, as a data mesh. And your service governance will resemble the federated computational governance idea of data mesh and each of your LOB clusters will resemble the data mesh sub-domains. That said, Kafka is a great fit for both analytics and transactional data use cases.

To learn more, you can check out Confluent Cloud, a fully managed event streaming service based on Apache Kafka.

If you want more information on Kafka and event streaming, check out Confluent Developer to find the largest collection of resources for getting started, including end-to-end Kafka tutorials, videos, demos, meetups, podcasts, and more.

Did you like this blog post? Share it now

Subscribe to the Confluent blog

How to Choose the Number of Topics/Partitions in a Kafka Cluster?

Note For the latest, check out the blog posts Apache Kafka® Made Simple: A First Glimpse of a Kafka Without ZooKeeper and Apache Kafka Supports 200K Partitions Per Cluster.

Apache Kafka Supports 200K Partitions Per Cluster

In Kafka, a topic can have multiple partitions to which records are distributed. Partitions are the unit of parallelism. In general, more partitions leads to higher throughput. However, there are […]