Stream with Kafka powered by Kora

Basic

$0/Month

- Serverless, fully managed clusters with zero ops

- Autoscaling Kafka resources

- 80+ fully managed connectors

- Security and governance essentials

- Multicloud across AWS, Azure, and GCP

Standard

~$385/Month

- Everything in Basic

- High availability with 99.99% uptime SLA

- Infinite storage

- Audit logs to detect security threats

- 20%+ throughput savings that scale with usage

Enterprise

~$1,150/Month

- Everything in Basic and Standard

- Private networking

- GBps+ autoscaling

- Enhanced partition limits

- 32%+ throughput savings that scale with usage

Tier | Basic | Standard | Enterprise | |

|---|---|---|---|---|

eCKU $/eCKU-hour eCKUs or Elastic CKUs are units of horizontal scalability in Confluent Cloud which autoscale to meet demand. eCKU limits vary by cluster type. Learn more. | First eCKU free, then $0.14 | $0.75 | $2.25 | |

Data In/Out (Ingress/Egress) $/GB | $0.05 | |||

Data Stored $/GB-month | $0.08 | $0.08 | $0.08 | |

Estimated Monthly Starting Cost Assumes 70% utilization, i.e., clusters are active ~70% of the time. | $0/Month | $385/Month | $1,150/Month | |

| Features | Basic |

|---|---|

eCKU $/eCKU-hour | First eCKU free, then $0.14 |

Data In/Out (Ingress/Egress) $/GB | $0.05 |

Data Stored $/GB-month | $0.08 |

Estimated Monthly Starting Cost | $0/Month |

| Features | Standard |

|---|---|

eCKU $/eCKU-hour | $0.75 |

Data In/Out (Ingress/Egress) $/GB | $0.040 - $0.050 |

Data Stored $/GB-month | $0.08 |

Estimated Monthly Starting Cost | $385/Month |

| Features | Enterprise |

|---|---|

eCKU $/eCKU-hour | $2.25 |

Data In/Out (Ingress/Egress) $/GB | $0.020 - $0.050 |

Data Stored $/GB-month | $0.08 |

Estimated Monthly Starting Cost | $1,150/Month |

Features

Tiers | Basic | Standard | Enterprise | ||

|---|---|---|---|---|---|

| Service | |||||

Uptime SLA SLAs cover both infrastructure and software including Apache Kafka bug fixes on-demand. | 99.5% | 99.9% 1 eCKU 99.99% Min 2 eCKUs | 99.9% 1 eCKU 99.99% Min 2 eCKUs | ||

| Scale | |||||

Scaling | Autoscaling | Autoscaling | Autoscaling | ||

Throughput limit (ingress/egress) Click here for a more detailed cluster limit comparison. | 250/750 MBps | 250/750 MBps | 600/1,800 MBps | ||

Partition limit Click here for a more detailed cluster limit comparison. | 4,096 | 4,096 | 30,000 | ||

Storage limit Click here for a more detailed cluster limit comparison. | 5 TB | Infinite Storage | Infinite Storage | ||

Self-balancing clusters Optimize resource utilization through a rack-aware algorithm that rebalances partitions across a Kafka cluster. | |||||

| Managed Components | |||||

Connectors Easily stream data from/to common apps & data systems. View list of fully managed connectors. | |||||

Apache Flink Filter, join, and enrich data streams with simple, serverless stream processing | |||||

ksqlDB Build event streaming applications that use stream processing with a lightweight SQL syntax. | - | ||||

Stream Governance Industry's only governance suite purpose-built for data in motion, including Schema Registry, Stream Catalog, and Stream Lineage. | |||||

| Network Connectivity | |||||

Secure public endpoints | Private networking only | ||||

Private Link/Private Service Connect | - | - | |||

VPC/VNet peering Connect up to 5 VPCs/VNets to Confluent Cloud. | |||||

AWS Transit Gateway | |||||

| Monitoring & Visibility | |||||

Metrics API Consume pre-aggregated Kafka cluster and topic level metrics through an API interface. | |||||

| Security | |||||

Data encryption TLS v1.2 for in-transit data & native cloud provider disk encryption for data at-rest. | |||||

Kafka ACLs Enforce granular controls over Kafka topic access. | |||||

SAML/SSO Leverage existing Identity Provider (e.g. Okta, OneLogin, AD, Ping) for managing user authentication. | |||||

Role-Based Access Control (RBAC) Granular, resource-level authorization of access across user groups. | |||||

Audit logs User action logs to detect security threats & anomalies. | - | ||||

Customer managed keys (BYOK) Bring your own key (BYOK) for an additional layer of security for at-rest data with support for cloud provider’s native key management service. | - | - | |||

| Compliance | |||||

SOC 1, SOC 2, SOC 3 | |||||

ISO 27001, ISO 27701 | |||||

PCI | |||||

GDPR readiness | |||||

HITRUST CSF certified, HIPAA-ready | |||||

| Features | Basic |

|---|---|

| Service | |

Uptime SLA | 99.5% |

| Scale | |

Scaling | Autoscaling |

Throughput limit (ingress/egress) | 250/750 MBps |

Partition limit | 4,096 |

Storage limit | 5 TB |

Self-balancing clusters | |

| Managed Components | |

Connectors | |

Apache Flink | |

ksqlDB | |

Stream Governance | |

| Network Connectivity | |

Secure public endpoints | |

Private Link/Private Service Connect | - |

VPC/VNet peering | |

AWS Transit Gateway | |

| Monitoring & Visibility | |

Metrics API | |

| Security | |

Data encryption | |

Kafka ACLs | |

SAML/SSO | |

Role-Based Access Control (RBAC) | |

Audit logs | - |

Customer managed keys (BYOK) | - |

| Compliance | |

SOC 1, SOC 2, SOC 3 | |

ISO 27001, ISO 27701 | |

PCI | |

GDPR readiness | |

HITRUST CSF certified, HIPAA-ready | |

| Features | Standard |

|---|---|

| Service | |

Uptime SLA | 99.9% 1 eCKU 99.99% Min 2 eCKUs |

| Scale | |

Scaling | Autoscaling |

Throughput limit (ingress/egress) | 250/750 MBps |

Partition limit | 4,096 |

Storage limit | Infinite Storage |

Self-balancing clusters | |

| Managed Components | |

Connectors | |

Apache Flink | |

ksqlDB | |

Stream Governance | |

| Network Connectivity | |

Secure public endpoints | |

Private Link/Private Service Connect | - |

VPC/VNet peering | |

AWS Transit Gateway | |

| Monitoring & Visibility | |

Metrics API | |

| Security | |

Data encryption | |

Kafka ACLs | |

SAML/SSO | |

Role-Based Access Control (RBAC) | |

Audit logs | |

Customer managed keys (BYOK) | - |

| Compliance | |

SOC 1, SOC 2, SOC 3 | |

ISO 27001, ISO 27701 | |

PCI | |

GDPR readiness | |

HITRUST CSF certified, HIPAA-ready | |

| Features | Enterprise |

|---|---|

| Service | |

Uptime SLA | 99.9% 1 eCKU 99.99% Min 2 eCKUs |

| Scale | |

Scaling | Autoscaling |

Throughput limit (ingress/egress) | 600/1,800 MBps |

Partition limit | 30,000 |

Storage limit | Infinite Storage |

Self-balancing clusters | |

| Managed Components | |

Connectors | |

Apache Flink | |

ksqlDB | - |

Stream Governance | |

| Network Connectivity | |

Secure public endpoints | Private networking only |

Private Link/Private Service Connect | |

VPC/VNet peering | |

AWS Transit Gateway | |

| Monitoring & Visibility | |

Metrics API | |

| Security | |

Data encryption | |

Kafka ACLs | |

SAML/SSO | |

Role-Based Access Control (RBAC) | |

Audit logs | |

Customer managed keys (BYOK) | |

| Compliance | |

SOC 1, SOC 2, SOC 3 | |

ISO 27001, ISO 27701 | |

PCI | |

GDPR readiness | |

HITRUST CSF certified, HIPAA-ready | |

FAQ

What are Elastic CKUs (eCKUs) and why are they important?

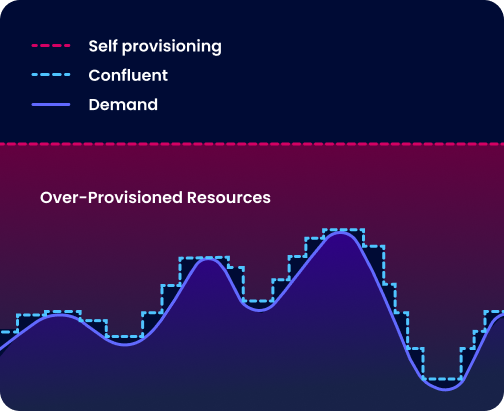

Elastic Confluent Units for Kafka (eCKUs) are a unit of horizontal scalability in Confluent Cloud. eCKUs autoscale up to meet spikes in demand and back down based on workload with no user intervention required, and you only pay for the resources you use when you actually need them. Never worry about cluster sizing and provisioning or overpaying for resources again. Autoscaling eCKUs provide a truly serverless experience to save you time and money, enabling you to focus on innovation and business logic while simultaneously lowering costs.

How is my monthly bill calculated?

Confluent monthly bills are based upon resource consumption, i.e., you are only charged for the resources you use when you actually use them:

- Stream: Kafka clusters are billed for eCKUs/CKUs ($/hour), networking ($/GB), and storage ($/GB-hour).

- Connect: Use of connectors is billed based on throughput ($/GB) and a task base price ($/task/hour).

- Process: Use of stream processing with Confluent Cloud for Apache Flink is calculated based on CFUs ($/minute).

- Govern: Use of Stream Governance is billed based on environment ($/hour).

Confluent storage and throughput is calculated in binary gigabytes (GB), where 1 GB is 2^30 bytes. This unit of measurement is also known as a gibibyte (GiB). Please also note that all prices are stated in United States Dollars unless specifically stated otherwise.

All billing computations are conducted in Coordinated Universal Time (UTC).

Prices vary by cloud region. Learn more about Confluent Cloud Billing.

How can I get Confluent discounts?

Confluent offers volume discounts based on usage. Please contact us or your Confluent account team for more details.

What are annual commitments?

Confluent Cloud offers the ability to make an annual commitment to a minimum amount of spend. This commitment gives you access to discounts and provides the flexibility to use this commitment across the entire Confluent Cloud stack, including any Kafka cluster type, Connect, Flink, Stream Governance, and Support. With self-serve provisioning and expansion, you have the freedom to consume only what you need from a commitment at any point in time. For more information about annual commitments, contact us.