OSS Kafka couldn’t save them. See how data streaming came to the rescue! | Watch now

Level Up Your KSQL

Now that KSQL is available for production use as a part of the Confluent Platform, it has never been easier to run the open-source streaming SQL engine for Apache Kafka®. Which is not to say that everything is entirely obvious to the new user. A beginning or even intermediate streaming SQL user might still need a hand, and we’re here to give you one!

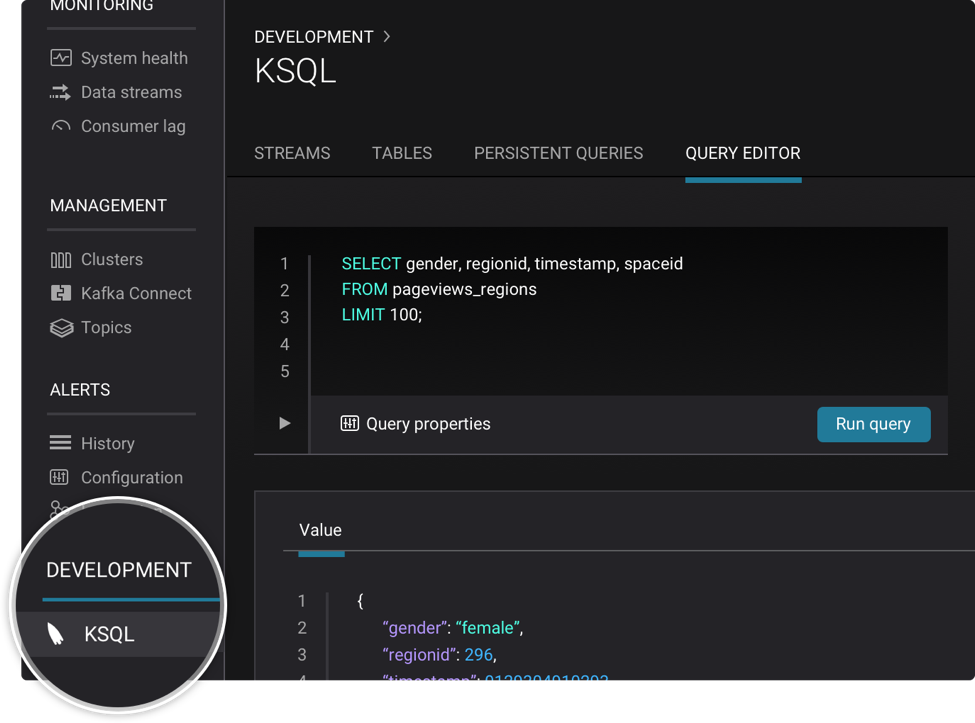

Maybe you’ve already been using KSQL, and you have fallen in love with its intuitive syntax for creating and enriching streams of real-time data. Maybe you run Confluent Platform, and you already love the handy KSQL user interface and Confluent Control Center’s stream monitoring capabilities to monitor the performance of your KSQL queries.

Or maybe not yet. Regardless, we can tell you that now is the time to level up your KSQL. Whether you are brand new to it or ready to take it to production, now you can dive deep on core KSQL concepts, streams and tables, enriching unbounded data and data aggregations, scalability and security configurations, and more. Stay tuned with us over the next few weeks as we release the Level Up Your KSQL video series that enables you to really understand KSQL.

There are more videos besides these. We also cover:

- Installing and Running KSQL

- KSQL Streams and Tables

- Reading Kafka Data from KSQL

- KSQL Use Cases

- Streaming and Unbounded Data in KSQL

- Enriching Data with KSQL

- Aggregations in KSQL

- Taking KSQL to Production

Interested in more? Learn more about what KSQL can do:

- Download the Confluent Platform and follow the Quick Start to get started with KSQL

- Watch the introductory video at the KSQL home page

- Check out the KSQL clickstream demo video or run it yourself

- Questions? Ask them in the #ksql channel on our community Slack group

Did you like this blog post? Share it now

Subscribe to the Confluent blog

New With Confluent Platform 8.0: Stream Securely, Monitor Easily, and Scale Endlessly

This blog announces the general availability (GA) of Confluent Platform 8.0 and its latest key features: Client-side field level encryption (GA), ZooKeeper-free Kafka, management for Flink with Control Center, and more.

Introducing the Next Generation of Control Center for Confluent Platform: Enhanced UX, Faster Performance, and Unparalleled Scale

This blog announces the general availability of the next generation of Control Center for Confluent Platform