MongoDB

IoT Reference Architecture and Implementation Guide Using Confluent and MongoDB Realm

The Internet of Things (IoT)—connecting physical objects to software components—has created several innovations and opportunities for enterprise companies, and continues to grow in top industries, including healthcare, smart factories, energy, […]

Apache Kafka and R: Real-Time Prediction and Model (Re)training

Machine learning on real-time data is a powerful combination because you gain direct insights into your data, can make powerful decisions, and consequently improve your business processes and outcomes. It […]

Create a Data Analysis Pipeline with Apache Kafka and RStudio

In Data Science projects, we distinguish between descriptive analytics and statistical models running in production. Overall, these can be seen as one process. You start with analyzing historical data to […]

Building Real-Time Event Streams in the Cloud, On Premises, or Both with Confluent

To the developer or architect seeking to provide their business with as much value as possible, what is the best way to start working with data in motion? Choosing Apache […]

Announcing the MongoDB Atlas Sink and Source Connectors in Confluent Cloud

Today, Confluent is announcing the general availability (GA) of the fully managed MongoDB Atlas Source and MongoDB Atlas Sink Connectors within Confluent Cloud. Now, with just a few simple clicks, […]

Using the Fully Managed MongoDB Atlas Connector in a Secure Environment

Since the MongoDB Atlas source and sink became available in Confluent Cloud, we’ve received many questions around how to set up these connectors in a secure environment. By default, MongoDB […]

Analysing Changes with Debezium and Kafka Streams

Change Data Capture (CDC) is an excellent way to introduce streaming analytics into your existing database, and using Debezium enables you to send your change data through Apache Kafka®. Although […]

Announcing the MongoDB Atlas Sink and Source Connectors in Confluent Cloud

We are excited to announce the preview release of the fully managed MongoDB Atlas source and sink connectors in Confluent Cloud, our fully managed event streaming service based on Apache […]

Real-Time Fleet Management Using Confluent Cloud and MongoDB

Most organisations maintain fleets, a collection of vehicles put to use for day-to-day operations. Telcos use a variety of vehicles including cars, vans, and trucks for service, delivery, and maintenance. […]

Building a Telegram Bot Powered by Apache Kafka and ksqlDB

Imagine you’ve got a stream of data; it’s not “big data,” but it’s certainly a lot. Within the data, you’ve got some bits you’re interested in, and of those bits, […]

Getting Started with the MongoDB Connector for Apache Kafka and MongoDB

Together, MongoDB and Apache Kafka® make up the heart of many modern data architectures today. Integrating Kafka with external systems like MongoDB is best done though the use of Kafka […]

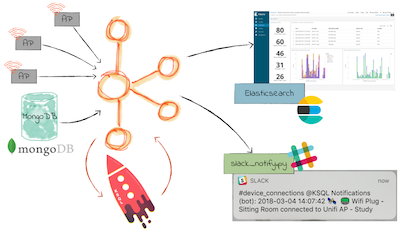

We ❤️ syslogs: Real-time syslog processing with Apache Kafka and KSQL – Part 3: Enriching events with external data

Using KSQL, the SQL streaming engine for Apache Kafka®, it’s straightforward to build streaming data pipelines that filter, aggregate, and enrich inbound data. The data could be from numerous sources, […]