Join us at Current New Orleans! Save $500 with early bird pricing until August 15 | Register Now

What is Event Sourcing?

Event sourcing is an approach for tracking changes to application state, stored as a sequence of events. Each change is associated with an event, which contains the timestamp and nature of the specific change. Instead of working with a sequence of static snapshots of our data, we use event sourcing to capture and store these events.

Using event sourcing when designing software puts a greater emphasis on how data evolves over time. It captures the journey, rather than just the destination. This allows systems based on event sourcing to have access to richer data that can be used for analytical purposes, but also to help develop new features. Event-sourced systems—and the developers who build them—are more capable of evolving rapidly to meet the changing needs of the business.

Why event sourcing

The business landscape is changing rapidly. Plans that were developed two years ago, or even six months ago, may not have the same relevance they once did. Businesses need to be able to adapt just as quickly. This requires them to have detailed analytics to study and predict customer behaviors. It also requires rich data models to build new use cases and adapt to customer needs.

However, traditional systems discard a lot of data. They capture the current state of the system, but not how it evolved over time.

How event sourcing works

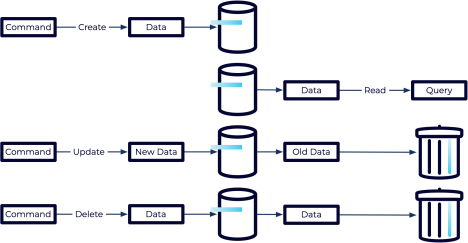

In a traditional system, users perform actions called commands. Commands trigger updates to data objects that are then persisted in a database. The interactions with the database can be categorized as either a create, read, update, or delete (CRUD) operation.

A create operation will add a new record to the database. A read operation will access data that is already there. But it's the update and delete that we are interested in because both operations are destructive. An update will overwrite the previous state with something new, essentially forgetting about the past history, while a delete actively destroys an existing record.

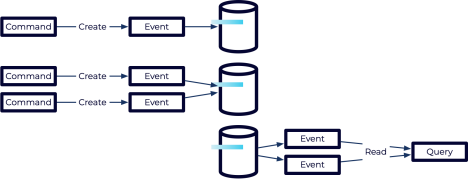

Event sourcing takes a different approach. When a command from a user is received, the event-sourced system will translate it into an event. The event can be used to update any state held in memory, but only the event is stored in the database. Events are considered to be something that happened in the past—and you can’t rewrite history. This means, they can be created or read, but they can’t be updated or deleted. This prevents any potential for data loss because all events are maintained from the very beginning.

For example, in a banking system a command might be “WithdrawMoney.” The corresponding event could be “MoneyWithdrawn.” Note the difference in naming. Events are always written as past tense to illustrate their historical nature. When a “MoneyWithdrawn” event is applied, the in-memory balance of the bank account can be adjusted by the appropriate amount. However, the balance itself isn’t stored in the database—only the events are.

Rebuilding the event-sourced state

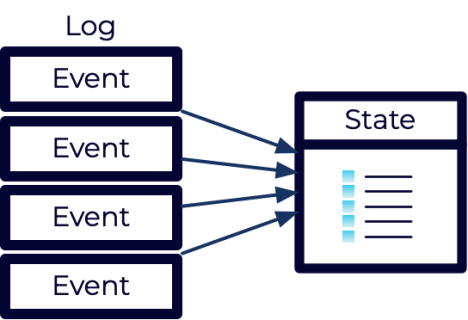

If the current state of the system isn’t stored in the database, then how do we get access to it? For example, if I’ve only stored deposits and withdrawals for the bank account, how do I determine the current balance?

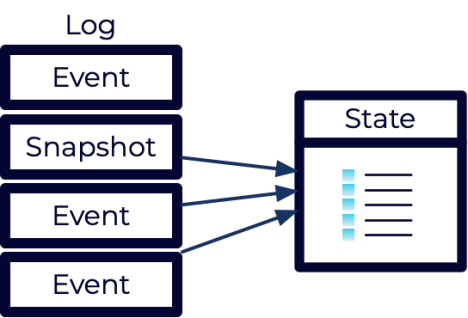

To determine the current state, the events are replayed from the beginning, applying the same changes as when the event was originally recorded. This allows the state to be reconstructed in memory. Starting from a balance of zero, all bank transactions would be reapplied to compute the current balance.

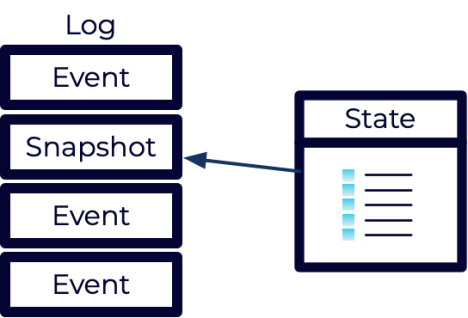

Over time, as the log of events grows, it may become inefficient to replay them all. In this case, event-sourced systems often leverage “snapshots”. These snapshots record the current state of the system at the time the snapshot is created.

When using snapshots, the system doesn’t need to replay all events. Instead, it can restore the most recent snapshot and only replay events that occurred after the snapshot was taken.

Once snapshots are introduced, this starts to look like a more traditional state based system. The traditional system was the equivalent of doing a snapshot on every write. However, those systems didn’t keep the event log and discarded any old snapshots.

With event sourcing, although old snapshots can be discarded, they don’t have to be. They can be kept if they are valuable. Furthermore, snapshots are simply an optimization. They shouldn’t be created on every write, and at any point they can be thrown away because they can always be reconstructed from the events.

Benefits of event sourcing

Because an event-sourced system maintains the full history, it enables a lot of powerful features. Some of these are outlined below, but there are more.

Audit trail

Every action taken is stored as an event which creates a natural audit trail for compliance and security. It should be possible to analyze the events and determine exactly how the current state was reached.

Historical queries

Events can be used to execute historical queries. Rather than looking only at the state as it currently exists, it can be replayed up to a specific time. This allows the exact state to be reconstructed at any point in the past.

Future-proofing

Businesses are always evolving and coming up with new ways to use data. The data that is thrown away today might be critical in the future. Because event-sourced systems never throw away data, they enable future developers to build features than nobody could have predicted would be necessary.

Performance and scalability

Event-sourced systems operate in an append-only fashion. This has implications for how the database is used because it eliminates the need for some types of database locks. These locks create contention which decreases scalability and can impact performance. By reducing the locks it improves the system's ability to perform at scale.

Resilience

What happens to a traditional system when a bug is introduced that miscalculates the current state? The old state is lost and the new state is corrupted. However, an event-sourced system doesn’t record the state. Instead, it records the intent. By capturing the intent we have the potential to fix problems that would otherwise be impossible. State can be restored to earlier points to clear corruption and once a bug is fixed, the events can be replayed to calculate the correct state.

When not to use event sourcing

Not every application is good for utilizing event sourcing. One major drawback is the fact it’s complex. Event sourcing integration involves a lot of consideration of the domain model and the event log, which can be hard for most teams to come up with, especially if they are new to the two concepts.

However, event sourcing also requires that systems are developed and maintained in ways that go against traditional practices, which may cause adoption issues due to the increased development cycle time.

Another parameter is storage requirements. Every state change is recorded as an event, therefore, the event log may contain a large number of records over a period resulting in high storage usage and even possible degradation of system performance if not controlled. This can be particularly difficult for high-velocity applications where event flows are fast and constant.

With the growth in the size of an event log, replaying events to reconstruct the current state can become inefficient as well. While snapshots can help with this by giving snapshots of the solution in between these states, managing snapshots and ensuring they are up to date is equally complex.

Lastly, event sourcing may not be applicable in a situation where simple create, read, update, and delete operations are adequate. For more simple cases where the focus is more on the current state of the application than on histories of changes, the implementation of event sourcing may not be justified.

Event streaming

A common event sourcing use case is to enable event or data streaming. The events that are stored can be pushed into an event-streaming platform like Apache Kafka where they can be consumed downstream.

These systems provide a number of benefits including better scalability, enhanced resiliency, and increased autonomy of event producers and consumers.

However, don’t assume that building an event streaming system requires event sourcing. There are other techniques for building event streaming systems such as the transactional outbox pattern or the listen to yourself pattern.

Furthermore, it is a mistake to assume that every event stored in an event-sourced system is also emitted to Kafka. In reality, the events that are emitted to Kafka can be quite different from what has been stored. The stored events are often called domain events and represent an internal implementation detail. Meanwhile the emitted events are often called integration events and represent a public-facing API.

It is quite common to have a translation step that converts the domain events into their corresponding integration events. This allows the application to evolve internally without impacting the public API.

How Can Confluent Help?

Confluent provides capabilities to ingest events from other systems through a wealth of custom or pre-built Kafka connectors. The events can be processed as real-time data streams where they are transformed and enriched for downstream consumption. As the number of streams increases, the governance features offered by Confluent Cloud allow developers to manage those event streams and ensure they are accessible, easy to use, and secure.