Join us at Current New Orleans! Save $500 with early bird pricing until August 15 | Register Now

What is a Database? Database Types, Examples, and Use Cases

A database is an organized collection of information that is stored electronically to be maintained, accessed, and analyzed efficiently. It can store various types of data, including text, numbers, images, videos, and files.

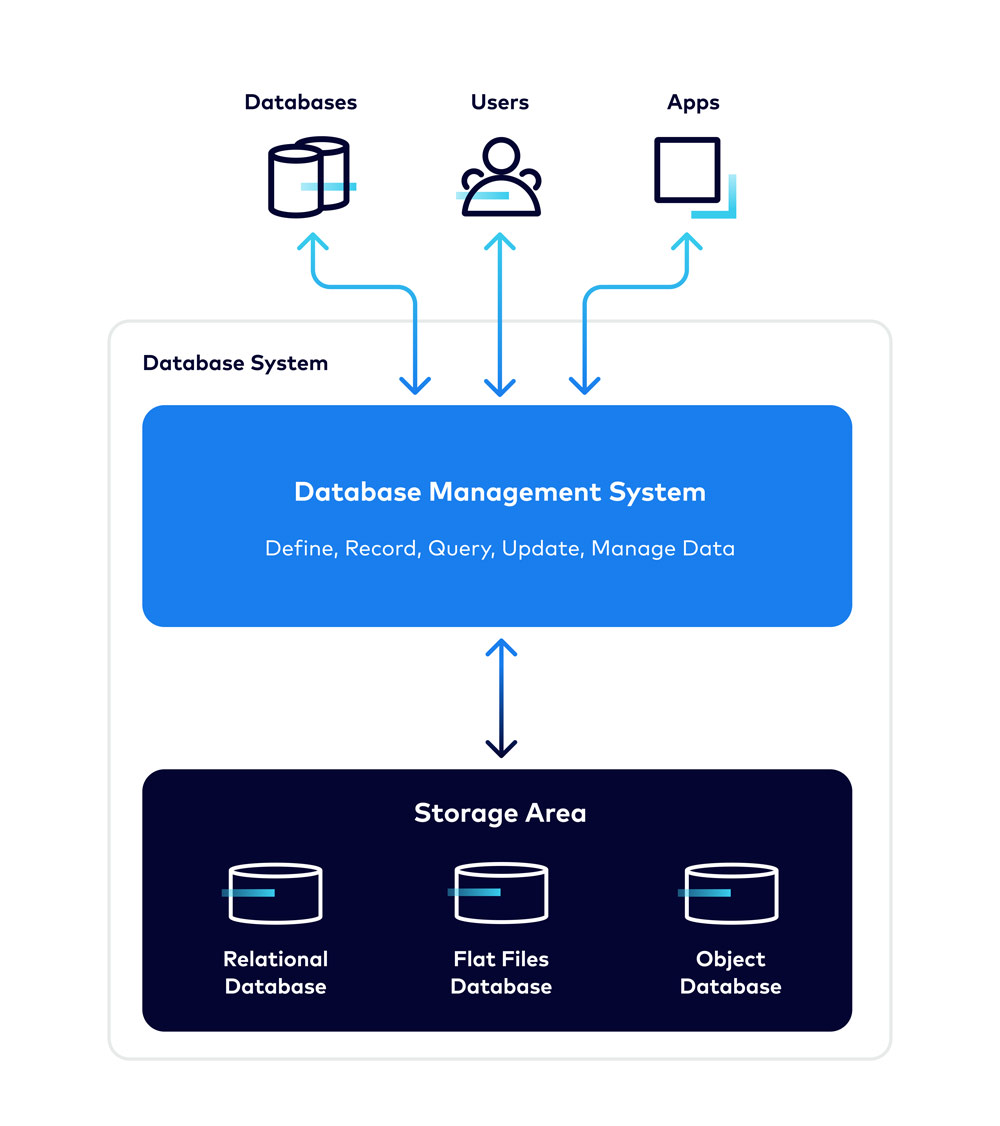

A Database Management System (DBMS) is the software used to manage and interact with the database, enabling users to store, retrieve, and edit data. The combination of the DBMS and the data it manages is often referred to as a “database system,” or simply a “database.”

What is a DBMS? (Database Management System)

A Database Management System (DBMS) is software that allows developers to create, modify, retrieve, and maintain information in a database. Database administrators (DBAs) use DBMSes to control users’ access to the database and perform security auditing functions. DBMSes can provide a wide range of capabilities, including:

Storing, retrieving, and modifying data (with efficient data handling and management)

Managing access through Access Control Lists (ACL) and Role-Based Access Control (RBAC)

Safeguarding against data loss by simplifying data backups and snapshots processes along with providing recovery tools to fully or partially restore databases

Continuously monitoring the database to automatically tune performance or alert developers and administrators to take recommended actions

History of databases

The database as we know it today dates back to the 1960s when the use of computers became popular. Below are some of the main milestones in the history of databases.

SQL in 1970s:

In the 1970s, IBM computer scientist Edgar Codd published his paper “A Relational Model of Data for Large Shared Data Banks.” This paper coined the term “relational database” and established a new way to store and access data.

Following Codd’s paper, Michael Stonebraker and Eugene Wong at the University of California in Berkeley created INGRES (Interactive Graphics and Retrieval System). INGRES was a relational database model that used QUEL query language. IBM released their version of a relational database called System R that used Structured Query Language (SQL) in 1974.

RDBMS in 1980s:

Relational databases grew in popularity during the 1980s, and SQL became the standard language for querying and managing the data. Database Management Systems (DBMSes) became essential tools for handling data storage, retrieval, and security for multiple users.

Internet in 1990s:

The rise of the internet in the 1990s fueled the next round of growth in the database industry. The Relational Database Management System (RDBMS) model, designed to manage the data of a single organization, wasn’t prepared to handle the volume of data that web applications were generating. Furthermore, with the decline in performance and increase in maintenance costs, developers looked for a new solution, and found MySQL, an open-source relational database.

This period also saw the need to organize data more efficiently, leading to advancements in database architecture and the management of structured and unstructured data.

NoSQL in 2000s:

NoSQL (“not only structured query language”) was initially coined in 1998 and referred to databases that used query languages other than SQL. However, as the internet continued to grow, there was a need for a new kind of database that could store unstructured and semi-structured data. This led to the emergence of NoSQL databases, which became popular due to their speed and flexibility in handling large amounts of unstructured data.

NoSQL databases support different data models, including document, key-value, graph, and column-family. They also provide solutions for modern applications that require scalability and fast access to data.

Today:

In recent years organizations have increasingly been adopting cloud-native and purpose-built databases. They are moving away from on-premises and legacy databases to cloud-native databases to improve agility, scalability, and decrease total cost of ownership.

Modern databases now support hybrid cloud computing platforms and integrated data stores with both structured and unstructured data. These advancements help manage distributed data across multiple users and systems. They also ensure data security and compliance.

Learn more how Confluent can help you with your database modernization journey by visiting our solution page.

What is SQL (Structured Query Language)?

SQL is a programming language that is used to communicate with relational databases. The American National Standards Institute (ANSI) has considered SQL the standard language for relational database management systems. SQL statements are used to add, remove, modify, and query data, and they can also be used to grant permissions to users or roles. Popular RDBMSes that use SQL are Oracle, Microsoft SQL Server, IBM, MySQL, PostgreSQL, Microsoft Access, Ingres, and more.

Types of databases

There are various types of databases that are designed and built for different purposes. When choosing a database, it’s important to consider how the data will be used, so that you choose the best database for your use case.

Relational database

A relational database is an organized collection of structured data that have a predefined relationship among them. In this database, rows (tuples) and columns (attributes) are used to store data, which together constitute a table. Each row in the database has a primary key, which is a unique identifier that distinguishes it from other rows in that table. The primary key of one row could be stored as foreign key in another row in a different table to indicate the relationship between two tables. Relational databases are an ideal solution for when data is structured and has a predefined schema.

Relational Database Characteristics

SQL: SQL is used to store, manipulate, and manage data in a relational database.

Transactions: A database transaction consists of one or more SQL statements and is considered a single unit of work that either is completed as a whole or not at all. In the relational database world, the result of a transaction is either COMMITTED to the database or it’s a ROLLBACK.

ACID Compliance: Relational databases are primarily optimized for transaction operations. And in order to ensure data integrity, all transactions must be ACID compliant. ACID refers to Atomicity, Consistency, Isolation, and Durability.

Atomicity

Means that the data operation will finish successfully or unsuccessfully. "All or nothing" is the directive principle here.

Consistency

Ensures that a transaction (whether it completes successfully or aborted) doesn’t invalidate the database’s state. Meaning the data that is written to the database can only bring the database from one valid state to another.

Isolation

Multiple transactions can be executed simultaneously and in parallel on a single table. Isolation is how relational databases can maintain data consistency while concurrent transactions are executed. Isolation ensures that concurrent execution of transactions leaves the database in the same state as if all those transactions were executed sequentially.

Durability

Durability guarantees that once the transaction is completed and data is committed to the database, the changes are permanent and written to a non-volatile memory. This means in case of a power outage or any system failure the state of the system is not lost.

NoSQL database

To simplify, NoSQL refers to any database that doesn't use SQL as its primary data access language. NoSQL databases rose in popularity among developers in the late 2000s when the Internet was on the rise and storage cost was decreasing significantly. Developers didn’t need to define complex data models. Rather, they had the flexibility to store any structured, semi-structured, or unstructured data. Each kind of NoSQL database has its own set of unique capabilities but overall they can be summarized as having:

Flexible schemas: Unlike their relational counterparts, NoSQL databases don’t require a schema in order to store data. Developers have the flexibility to store huge amounts of data and adapt quickly as application requirements change.

Ease of scalability: NoSQL databases are mostly distributed where several machines (nodes) work together as a single cluster. You can scale up by increasing resources or scale out by adding more nodes to the cluster. They can also replicate data to increase redundancy and improve availability. It’s easier and more cost efficient to scale NoSQL databases than relational ones.

BASE: NoSQL databases often follow a more relaxed consistency model called “BASE” whereas relational databases follow a more restricted one called “ACID.” BASE is the acronym for:

- Basically Available: the system is always available (it’s distributed, so even in the case of failure of some nodes, the other ones are still running) to accept read and write operations, but it might not be consistent. Meaning the write might not persist after conflict resolution, or the data that is read might not be the latest data.

- Soft state: even without application interactions, the state of the system could change because of eventual consistency.

- Eventual consistency: the system will become consistent over time. Once a write operation is completed, the updated value will be replicated to secondary nodes and eventually all nodes that store a copy of that data will have the same value.

Overall, the BASE model favors availability (since scalability is important) over consistency.

There are several types of NoSQL databases but the popular ones are Document-based (MongoDB), Key-value (Redis), Columnar (Apache Cassandra), and Graph (Neo4J).

Key-value database

A key-value database provides the simplest possible data model, as the name suggests. It stores information as a “key-value” pair. The key serves as a unique identifier, and is used to retrieve the associated value. Both keys and values can be simple types like strings and numbers, or complex objects. Key-value data stores are easy to use, highly scalable, and have low latency. Leaderboards, session stores, shopping carts, and caching are among popular use cases for this type of database. Redis, Amazon DynamoDB, and RocksDB are examples of key-value databases.

Document database

Document-based databases are designed to store semi-structured data in JSON-like objects. Each document has a unique identifier called a “key” that is used to retrieve data from the database. The values can be of any type, from strings and numbers, to arrays and objects. Unlike key-value stores, document-based databases support secondary indexes, which can be used to retrieve data. Catalogs, mobile applications, and content management systems are among the most popular use cases for document databases. MongoDB and Apache CouchDB are examples of this type of database.

Columnar database

Columnar databases, also known as “column-oriented databases,” store data in columns rather than rows. Columnar databases optimize data for aggregate functions and operate on columns of data by storing the same type of data in one column. You can easily add more columns to the table without affecting other columns, which makes access to data very efficient, since querying runs on a subset of columns instead of scanning the entire table. Let’s say we want to quickly calculate average GPAs grouped by grade level. The GPA and Grade columns can be scanned very quickly to compute the averages because the data is stored on disk in a columnar fashion.

Data warehousing, business intelligence (BI), and big data processing are popular use cases for columnar or column-oriented databases. Apache Cassandra is an example of a columnar database.

Graph database

Graph database (GDB) is a type of NoSQL database that is based on graph theory. A graph database stores entities as nodes and relationships between them as edges. To find the relationship between two nodes, you traverse along the edges, and since relationships or edges are persisted in the database and not calculated at the query time, the traversal is very quick. Graph databases are the perfect solution when you want to create or analyze relationships such as fraud detection, social networking, and recommendation engines. Neo4J is an example of a graph database.

Data warehouse

A data warehouse is a central repository for all the data coming from disparate sources. They allow organizations to store historical and current data in a single location for the purpose of creating analytical reports. A data warehouse typically contains databases to store data, ETL tools to prepare data for analysis, along with statistical analysis and reporting capabilities, dashboards, and visualization tools.

Cloud database

A cloud database is a database that is deployed on a public or private cloud. It can be any type of database (relational or NoSQL) and can be offered either as a self-managed or fully-managed service. Cloud databases can help organizations to reduce total cost of ownership, improve reliability and resiliency, and have faster time to market for new use cases.

Distributed database

In a distributed database, data is stored across multiple machines (physical or virtual) that are located in different (physical or logical) locations and connected through a network (intranet or internet). Network latency is often the biggest component in web application response time. This means the farther the end user is from a data center, the longer it takes for the application to respond to their actions. Distributed databases can help improve end user experience since they can be deployed in different geographical locations hence decreasing network latency.

Streaming database

Relational and NoSQL databases were designed to store the current state of the world in the form of tables, and to answer questions about this state via point-in-time queries against the tables, e.g., “What is Alice’s account balance right now?”. In other words, there is no way to determine from a table alone how that state originated—think: “How did we get here?” This is where streams come into play. Event streams model the world around us. By storing individual data records (events) non-destructively as an ordered sequence, they provide a detailed historical view of what happened. Here’s an analogy to playing chess: If you have a recording of all the moves on the chessboard, it is straightforward to know what the current state of the board is. You can also easily rewind the time, using the recorded stream of moves, to know all of the historical states of the board.

To work with data in our systems we need both streams and tables. But so far, technologies have supported one or the other, forcing a wedge between what are two naturally complementary ways of handling data. We could either have state, or we could have events, despite the many benefits of being able to use the semantics of both in one place.

That’s why Confluent developed ksqlDB, the database purpose-built for stream processing applications. ksqlDB offers a single solution for collecting streams of data, enriching them, and serving queries on newly derived streams and tables. That means less infrastructure to deploy, maintain, scale, and secure. With less moving parts in your data architecture, you can focus on what really matters—innovation.

What is ksqlDB?

ksqlDB is a database that's purpose-built for stream processing applications. It consolidates the many components found in virtually every stream processing architecture.

That is important because almost all streaming architectures today are piecemeal solutions cobbled together from different projects. At a minimum, you need a subsystem to acquire events from existing data sources, another to store them, another to process them, and another to serve queries against aggregated materializations. Integrating each subsystem can be difficult. Each has its own mental model. And it’s easy to wonder, given all this complexity: Is this all worth it?

With ksqlDB, the complex architecture once required to build stream processing applications is reduced to two components: ksqlDB and Kafka. Rather than needing to work across several complex systems, enterprises can leverage ksqlDB to unlock real-time business insights and rich customer experiences with just a few SQL statements. With fewer moving parts in the underlying architecture, developers can more easily build stream processing applications and get them to market faster, while platform operators can scale, monitor, and secure their architecture with a more uniform approach. Perhaps best of all, ksqlDB and Kafka are offered as a fully managed service on Confluent Cloud, enabling you to entirely offload the management of your stream processing architecture.