[Blog] New in Confluent Cloud: Queues for Kafka, new migration tooling, & more | Read Now

Project Metamorphosis Month 1: Elastic Apache Kafka Clusters in Confluent Cloud

A few weeks ago when we talked about our new fundraising, we also announced we’d be kicking off Project Metamorphosis.

What is Project Metamorphosis?

Let me try to explain. I think there are two big shifts happening in the world of data right now, and Project Metamorphosis is an attempt to bring those two things together.

The first one, and the one that Confluent is known for, is the move to event streaming.

Event streams are a real revolution in how we think about and use data, and we think they are going to be at the core of one of the most important data platforms in a modern company. Our goal at Confluent is to build the infrastructure that makes that possible and help the world take advantage of it. That’s why we exist.

But event streaming isn’t the only paradigm shift we’re in the midst of. The other change comes from the movement to the cloud.

I remember in 2008, spinning up my first EC2 instance—it was kind of magical. The idea that you could get computational capacity, data storage, and other core foundational ingredients on demand, and just pay for what you used was obviously going to be a big deal even then. But the second-order transformation this has had on how data systems are built, and how we consume them, is even bigger than I would have thought.

We expect a lot more from our data systems than we ever did in the past. A modern cloud data system should abstract away implementation details like servers, and let us scale transparently, across datacenters around the world.

If we’re honest, although these two things are both happening in the world, they aren’t necessarily happening together. Most people’s experience of event streaming is with Apache Kafka®, and Kafka is, well, not very “cloudy.” It involves configuring ZooKeeper, managing data placement, tuning garbage collection, and fundamentally managing a lot of individual servers.

It’s time to fundamentally reimagine what event streaming can be in the cloud and provide a seamless experience without all the incidental complexity and manual labor. That’s what we aim to do with Project Metamorphosis.

Systems built for the cloud are different in foundational ways, and the experience of using these systems is simply much, much better. We want to bring that to people’s experience of Kafka. Over the course of the rest of the year we’ll be announcing a set of product features and improvements to accomplish this goal. We’ve organized our announcements around what we think are the eight foundational traits of cloud-native data systems. We’ll do an announcement for each of these traits, one per month for the next eight months. Each of these traits on its own can seem like a small thing, but taken together, we think this is a real transformation.

Elasticity

The trait we’re kicking this off with is arguably one of the most important in the cloud, and that is elasticity.

In the cloud, we expect data systems to be seamlessly and transparently elastic. They should scale up when we need them and scale down when we don’t, and since we don’t know exactly what we need ahead of time, we should just pay for what we need and use.

This is particularly important right now, as we’re living in uncertain times. We’ve seen our customers needing to scale up significantly to meet growing demand, or scale down to manage cost as suddenly the digital side of their business is their primary (or only) form of customer interaction.

Confluent Cloud has had world-class elasticity and an easy-to-understand usage model for some time in our lower-tier products. Our Basic and Standard offerings allow instant and elastic scalability up to 100MB per second and can scale down to zero with no pre-configured infrastructure you have to manage.

We have a set of announcements that take this even further.

Usage-based billing

First, we’re moving all our products to a single usage-based model. Previously, our Dedicated clusters, which scale to support multi-GB-scale throughput for large scale workloads, were bought standalone with a fixed size that required a separate transaction to expand. Now all customers have a usage-based billing model, and customers with committed spend can provision any number of Dedicated clusters with just a few clicks.

This dynamic model makes it really easy to spin up new clusters for testing, spin them down when no longer needed, and not have to pre-plan all usage ahead of time.

All customers on this new usage model can now also add on usage of other capabilities such as ksqlDB and our connector ecosystem dynamically in their account.

If you have purchased clusters on the old model, we’re happy to convert you over any time, just reach out to your sales rep.

Elastic, self-balancing Kafka clusters

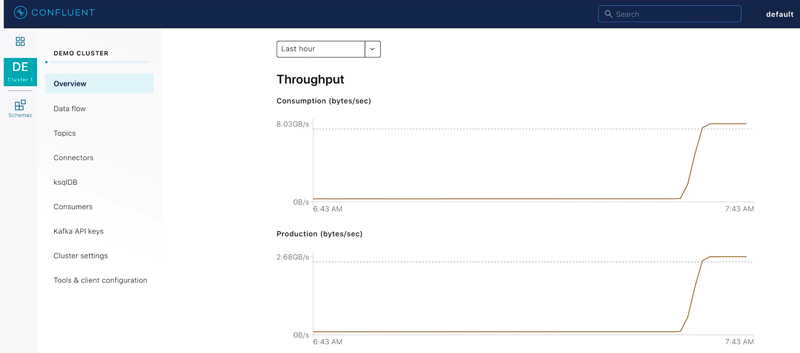

Today, we’re also announcing that our Dedicated cluster tier now supports self-service expansion and automatic data balancing.

You can now dynamically expand your Kafka clusters at any time with a few clicks to scale them up.

Automatic data balancing means that all Confluent Cloud Kafka clusters are continually optimizing data placement, to balance load. This ensures that newly provisioned capacity is immediately used and that skewed load profiles are seamlessly balanced away.

We’re bringing this elastic experience to more than just Kafka—we want it to cover the full ecosystem. Our offering of Kafka Connect, which gives you access to fully managed connectors, already scales elastically, allowing you to dynamically add and remove connectors and change capacity as needed. We will be doing the same thing with our offering of ksqlDB, where we’re working to allow you to scale up and down processing capacity for your queries, as they run, without needing to pause or restart them.

We aren’t stopping there. We don’t just want these to be scalable, we want the scaling to be done for you. Over the course of this year, we’ll be working on making these systems autoscale, so they can automatically expand and contract to meet your needs without the need for manual intervention.

On Prem

These capabilities aren’t just for Confluent Cloud, either.

In our next major release of Confluent Platform, we will support automatic data balancing and placement optimization within Kafka clusters. When combined with Confluent Operator, which supports operations on Kubernetes, you can get similar cloud-like elasticity in private cloud and on-prem environments, allowing you to dynamically launch and expand clusters on demand.

Improvements in Apache Kafka

We’re also working on contributions to better support elastic usage patterns in Apache Kafka itself. KIP-500 is an effort to remove Kafka’s biggest bottleneck, the dependency on the legacy component, ZooKeeper. Significant effort has gone into this effort, including a detailed design and prototype for the new Kafka-native Raft protocol that will maintain Kafka’s metadata in Kafka itself. With these changes, it will be possible to dramatically scale up the number of partitions and topics Kafka can support in a single cluster. In fact, we at Confluent have set a goal of contributing a working ZooKeeper-free Kafka that can scale to millions of partitions by the end of the year. We are working closely with the broader Kafka community to achieve this goal.

This also significantly simplifies operations for those self-managing Kafka by making it a single self-contained deployment without multiple tiers, each using different configurations and security that all have to be tuned and managed independently.

Give it a try

Go give it a try yourself with a free trial and get started with many of these features on Confluent Cloud. Use the promo code CL60BLOG to get an additional $60 of free Confluent Cloud usage.*

To learn more, also check out future demos on the Elastic page dedicated to this announcement.

Further reading

Did you like this blog post? Share it now

Subscribe to the Confluent blog

Empowering Customers: The Role of Confluent’s Trust Center

Learn how the Confluent Trust Center helps security and compliance teams accelerate due diligence, simplify audits, and gain confidence through transparency.

Unified Stream Manager: Manage and Monitor Apache Kafka® Across Environments

Unified Stream Manager is now GA! Bridge the gap between Confluent Platform and Confluent Cloud with a single pane of glass for hybrid data governance, end-to-end lineage, and observability.