[Webinar] How to Implement Data Contracts: A Shift Left to First-Class Data Products | Register Now

Confluent Blog

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

Protect Your Data With Self-Managed Keys (BYOK) Enhancements

Discover how Confluent’s BYOK for Enterprise Clusters and support for EKMs enhance security, and efficient data streaming in our latest blog.

Streaming Data Fuels Real-time AI & Analytics: Connect with Confluent Q1 Program Entrants

This is the Q1 2025 Connect with Confluent announcement blog—a quarterly installment introducing new entrants into the Connect with Confluent technology partner program. Every blog has a new theme, and this quarter’s focus is on powering real-time AI and analytics with streaming data.

Flink AI: Hands-On VECTOR_SEARCH()—Search a Vector Database with Confluent Cloud for Apache Flink®

Introduction to Flink SQL VECTOR_SEARCH() on Confluent cloud. VECTOR_SEARCH() along with ML_PREDICT() enables developers to execute GenAI use cases with data streaming technologies.

Cluster Linking for Azure Private Link is Now Available in Confluent Cloud

Confluent Cloud now supports Cluster Linking for Azure Private Link, enabling secure data replication between Kafka clusters in private Azure environments. With Cluster Linking, organizations can achieve real-time data movement, disaster recovery, migrations, and secure data sharing

Your AI Project Has a Data Liberation Problem

Most AI projects fail not because of bad models, but because of bad data. Siloed, stale, and locked in batch pipelines, enterprise data isn’t AI-ready. This post breaks down the data liberation problem and how streaming solves it—freeing real-time data so AI can actually deliver value.

Managing Data Contracts: Helping Developers Codify “Shift Left”

The concept of “shift left” in building data pipelines involves applying stream governance close to the source of events. Let’s discuss some tools (like Terraform and Gradle) and practices used by data streaming engineers to build and maintain those data contracts.

Building AI Agents and Copilots with Confluent, Airy, and Apache Flink

Airy helps developers build copilots as a new interface to explore and work with streaming data – turning natural language into Flink jobs that act as agents.

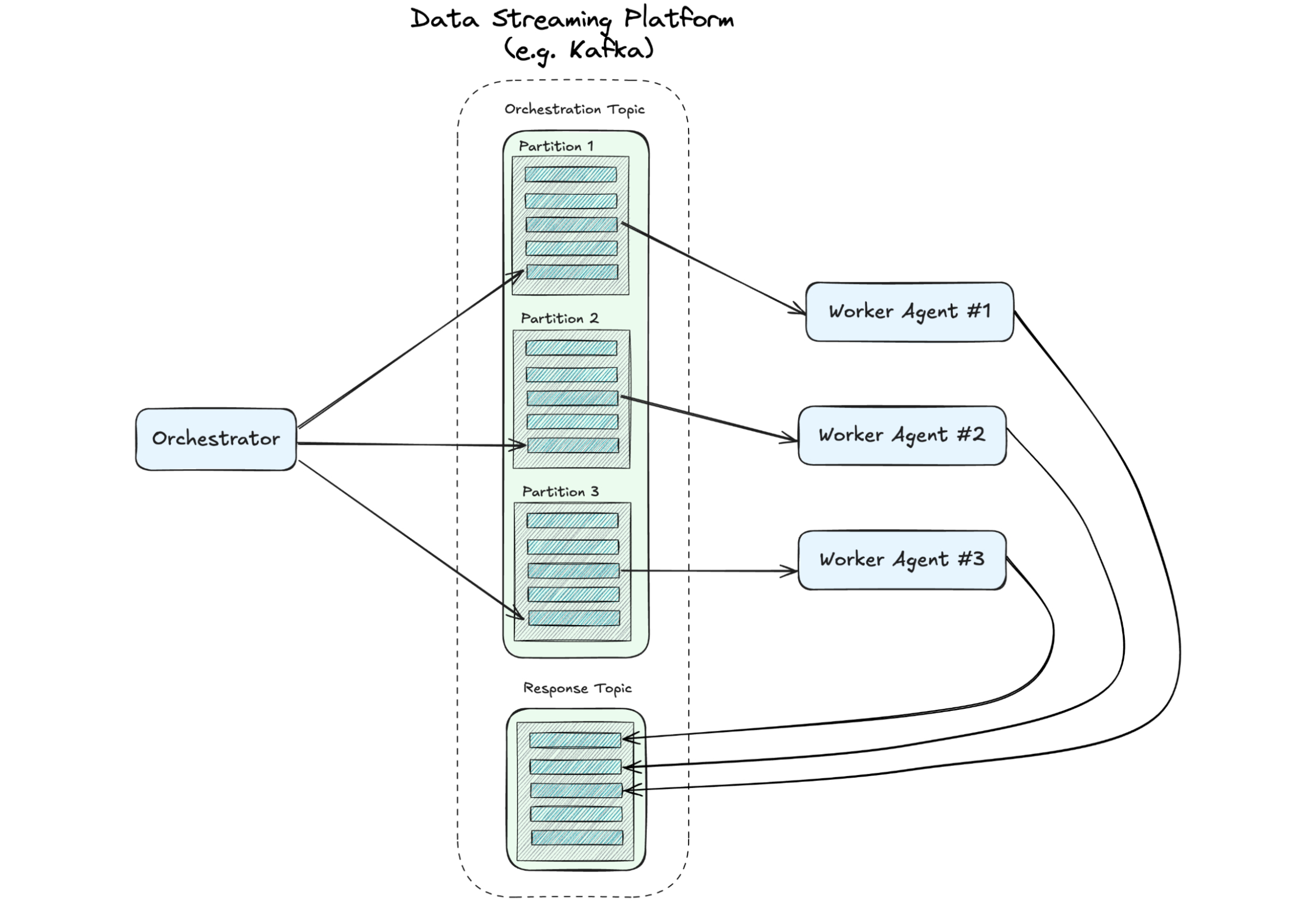

A Distributed State of Mind: Event-Driven Multi-Agent Systems

This article explores how event-driven design—a proven approach in microservices—can address the chaos, creating scalable, efficient multi-agent systems. If you’re leading teams toward the future of AI, understanding these patterns is critical. We’ll demonstrate how they can be implemented.

How Real-Time Data Streaming with GenAI Accelerates Singapore’s Smart Nation Vision

Real-time data streaming and GenAI are advancing Singapore's Smart Nation vision. As AI adoption grows, challenges from data silos to legacy infrastructure can slow progress - but Confluent, through IMDA's Tech Acceleration Lab, is helping orgs overcome hurdles and develop smarter citizen services.

Using Apache Flink® for Model Inference: A Guide for Real-Time AI Applications

Learn how Flink enables developers to connect real-time data to external models through remote inference, enabling seamless coordination between data processing and AI/ML workflows.

Building High Throughput Apache Kafka Applications with Confluent and Provisioned Mode for AWS Lambda Event Source Mapping (ESM)

Learn how to use the recently launched Provisioned Mode for Lambda’s Kafka ESM to build high throughput Kafka applications with Confluent Cloud’s Kafka platform. This blog also exhibits a sample scenario to activate and test the Provisioned Mode for ESM, and outline best practices.

Motivating Engineers to Solve Data Challenges with a Growth Mindset

Learn how Confluent Champion Suguna motivates her team of engineers to solve complex problems for customers—while challenging herself to keep growing as a manager.

Confluent Cloud for Government Is Now FedRAMP Ready

Confluent achieves FedRAMP Ready status for its Confluent Cloud for Government offering, marking an essential milestone in providing secure data streaming services to government agencies, and showing a commitment to rigorous security standards. This certification marks a key step towards full...

Bridging the Data Divide: How Confluent and Databricks Are Unlocking Real-Time AI

An expanded partnership between Confluent and Databricks will dramatically simplify the integration between analytical and operational systems, so enterprises spend less time fussing over siloed data and governance and more time creating value for their customers.