[Webinar] Master Apache Kafka Fundamentals with Confluent | Register Now

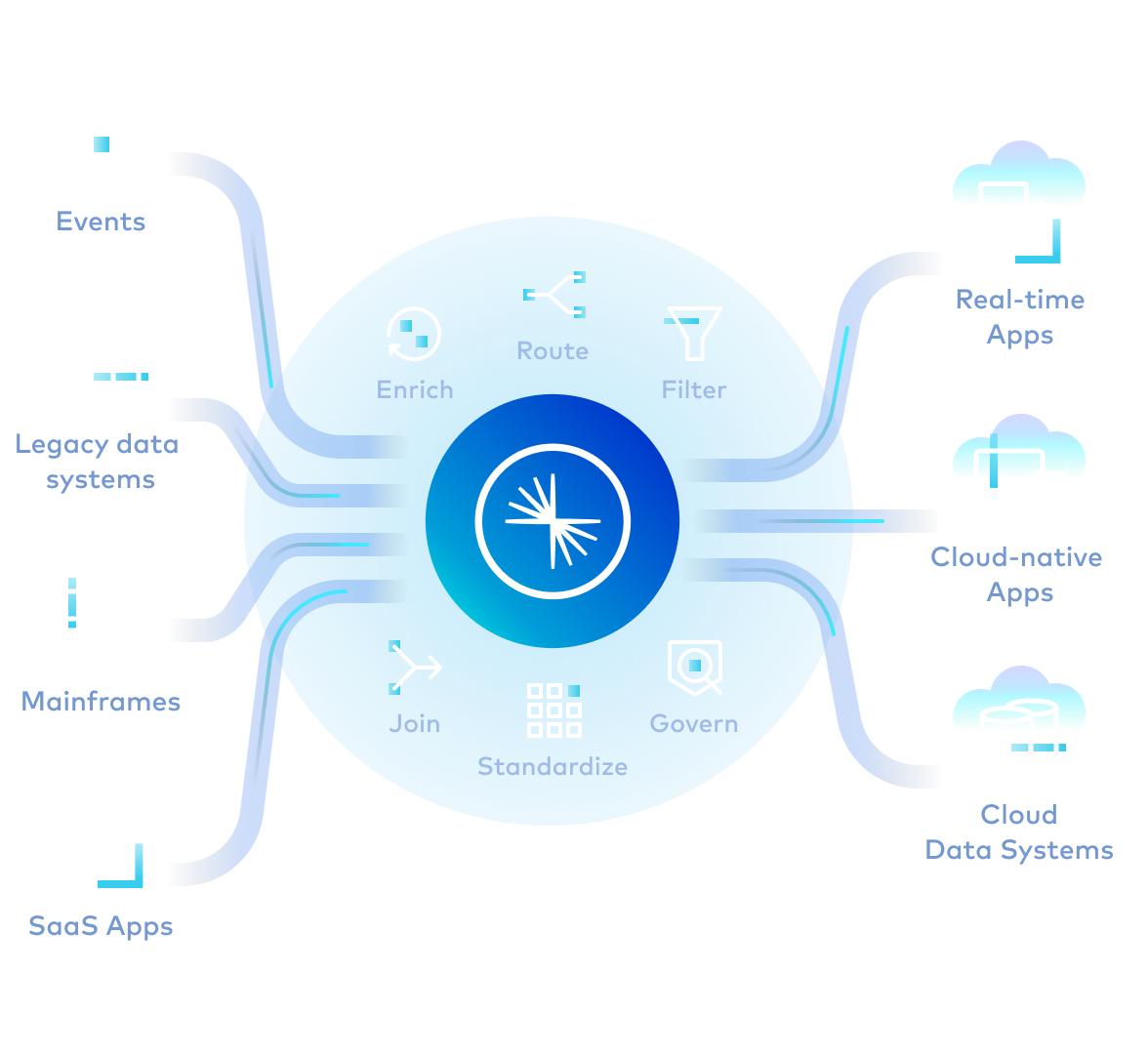

Streaming Data Pipelines

Get your data to the right place, in the right format, at the right time to build data products faster and unlock endless use cases.

Data Pipelines Done Right

Instant decision-making and agile development with uninterrupted streaming, continuous processing and self-service governed data access.

Streaming

Use continuously flowing and evolving high-fidelity real-time data for all your use cases

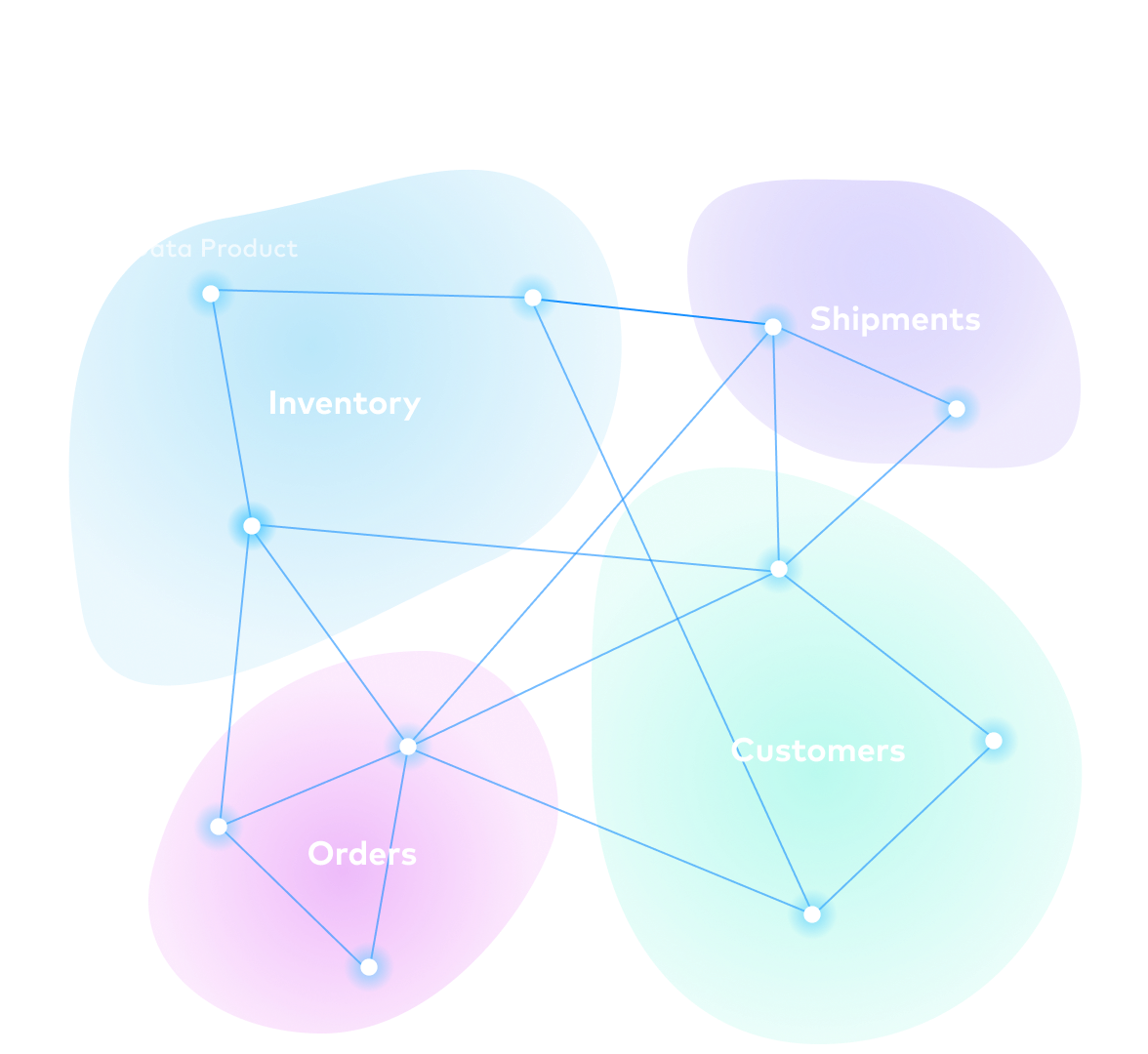

Decentralized

Allow teams closest to the data to create and share data streams across the enterprise

Declarative

Separate data flow and processing logic to optimize cost and performance at scale

Developer-oriented

Bring software delivery practices to pipelines to experiment, test and deploy with agility

Governed

Balance self-service access with security and compliance rules by governing end-to-end

Why Confluent

Build modern data flows to promote data reusability, engineering agility and greater collaboration, so more teams can use well-formed data to unlock its full potential.

Bridge the data divide

Power all your operational, analytical and SaaS use cases with high quality real-time data streams

Speed up time to market

Maintain data contracts and enable self-service search and discovery to trustworthy data products

Build for performance and scale

Express data flow logic and let the infrastructure flex automatically to process data at scale

Develop with agility

Easily iterate, evolve and reuse data flows with DevOps toolchain integrations and an open platform

Maintain trust and compliance

Track where your data goes, how it got there, and who has access to it with end-to-end governance

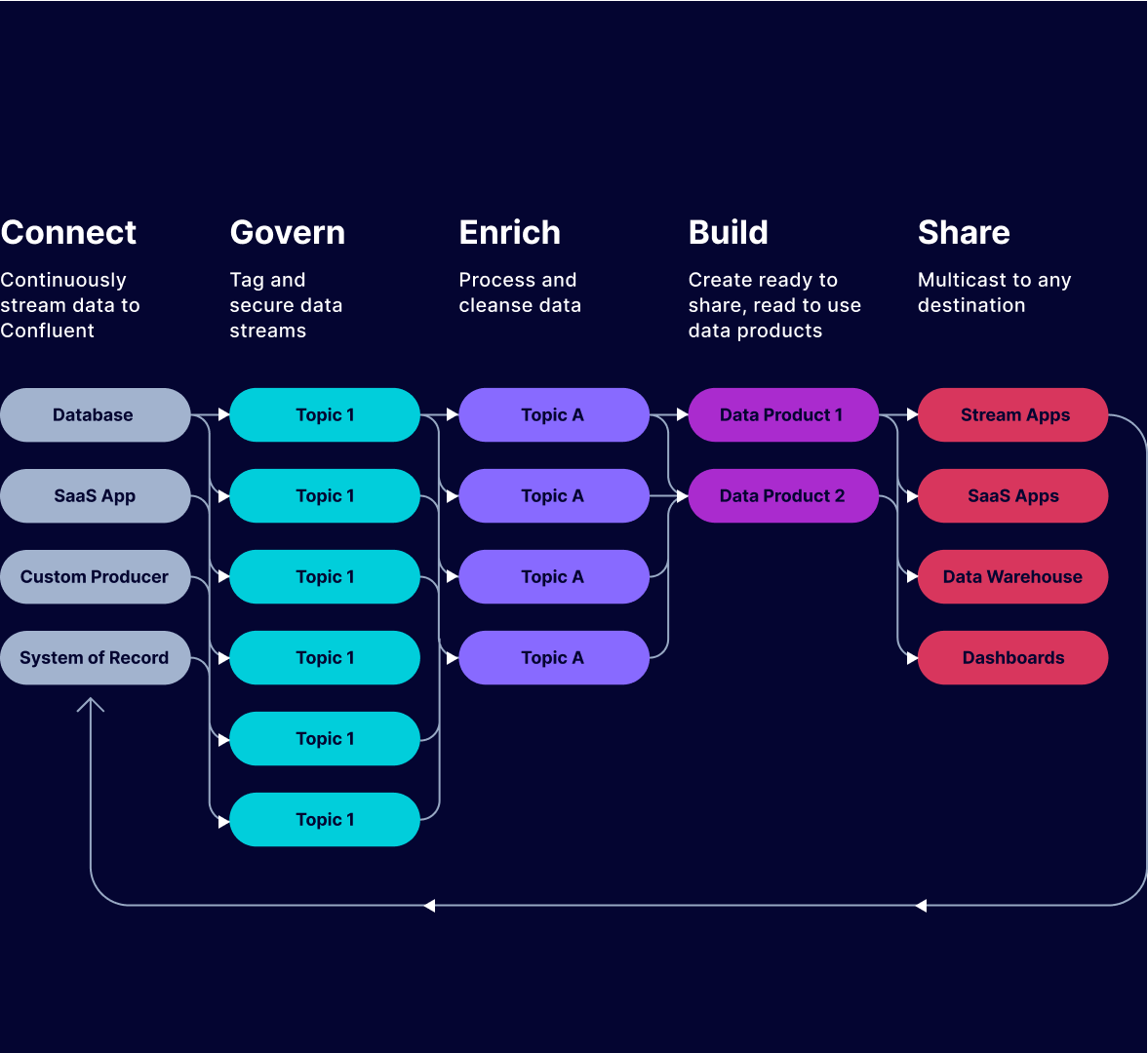

Fast and Easy Steps to Build Streaming Data Pipelines

Set up your data pipeline in minutes. Simplify the way you build real-time data flows and share your data everywhere.

1. Connect

Create and manage data flows with an easy-to-use UI and pre-built connectors

2. Govern

Centrally manage, tag, audit and apply policies for trusted high-quality data streams

3. Enrich

Use SQL to combine, aggregate, clean, process and shape data in real-time

4. Build

Prepare well-formatted, trustworthy data products for downstream systems and apps

5. Share

Securely collaborate on live streams with self-service data discovery and sharing