[Webinar] How to Implement Data Contracts: A Shift Left to First-Class Data Products | Register Now

Technology

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

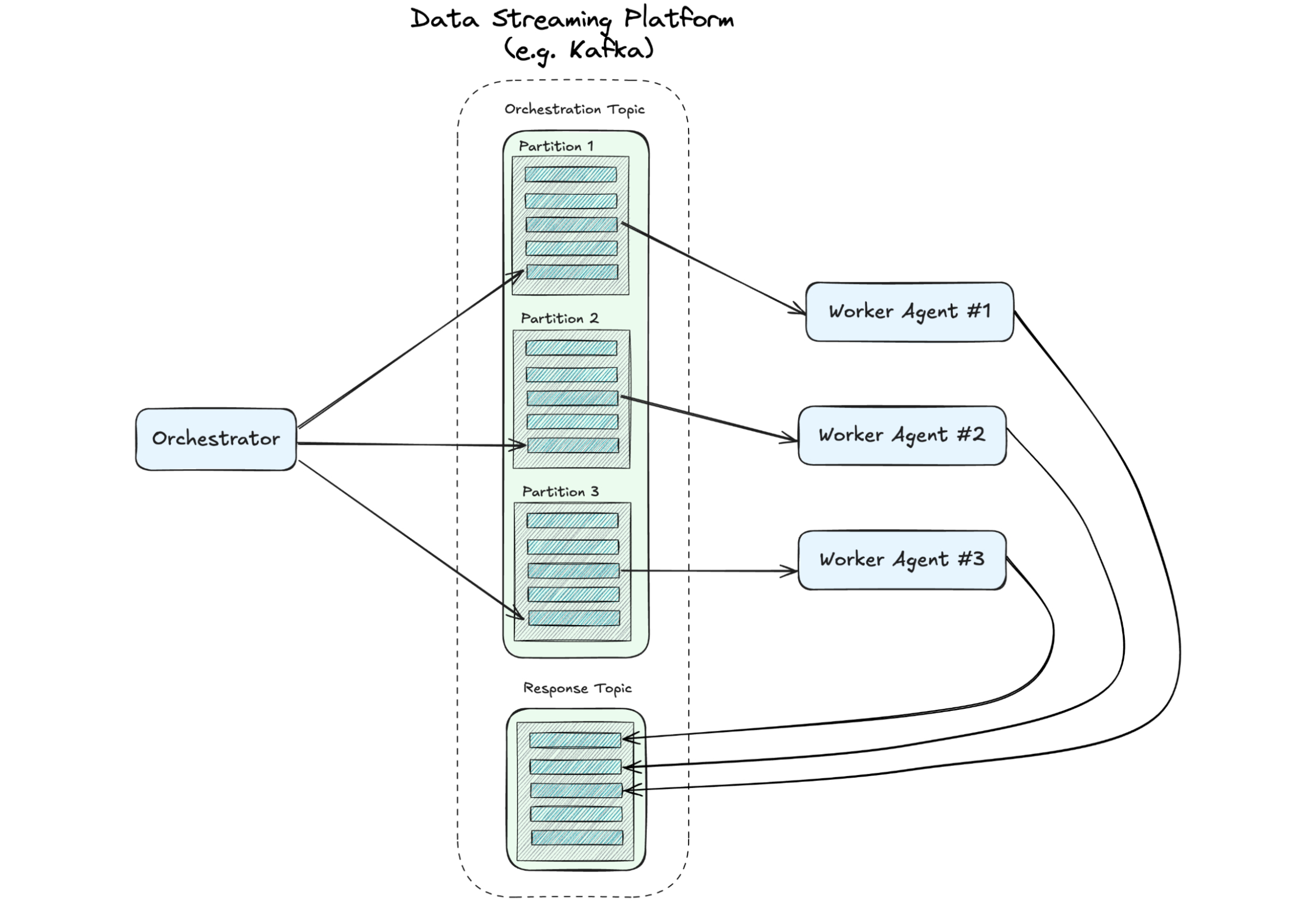

A Distributed State of Mind: Event-Driven Multi-Agent Systems

This article explores how event-driven design—a proven approach in microservices—can address the chaos, creating scalable, efficient multi-agent systems. If you’re leading teams toward the future of AI, understanding these patterns is critical. We’ll demonstrate how they can be implemented.

Using Apache Flink® for Model Inference: A Guide for Real-Time AI Applications

Learn how Flink enables developers to connect real-time data to external models through remote inference, enabling seamless coordination between data processing and AI/ML workflows.

Building High Throughput Apache Kafka Applications with Confluent and Provisioned Mode for AWS Lambda Event Source Mapping (ESM)

Learn how to use the recently launched Provisioned Mode for Lambda’s Kafka ESM to build high throughput Kafka applications with Confluent Cloud’s Kafka platform. This blog also exhibits a sample scenario to activate and test the Provisioned Mode for ESM, and outline best practices.

Automating Podcast Promotion with AI and Event-Driven Design

We built an AI-powered tool to automate LinkedIn post creation for podcasts, using Kafka, Flink, and OpenAI models. With an event-driven design, it’s scalable, modular, and future-proof. Learn how this system works and explore the code on GitHub in our latest blog.

Revolutionizing Failure Management in Apache Flink: Meet FLIP-304's Pluggable Failure Enrichers

FLIP 304 lets you customize and enrich your Flink failure messaging: Assign types to failures, emit custom metrics per type, and expose your failure data to other tools.

Agentic AI: The Top 5 Challenges and How to Overcome Them

Before deploying agentic AI, enterprises should be prepared to address several issues that could impact the trustworthiness and security of the system.

Optimize SaaS Integration with Fully Managed HTTP Connectors V2 for Confluent Cloud

Learn how an e-commerce company integrates the data from its Stripe system with the Pinecone vector database using the new fully managed HTTP Source V2 and HTTP Sink V2 Connectors along with Flink AI model inference in Confluent Cloud to enhance its real-time fraud detection.

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

New Security Tools to Protect Your New Year’s Resolutions

Confluent's advanced security and connectivity features allow you to protect your data and innovate confidently. Features like Mutual TLS (mTLS), Private Link for Schema Registry, and Private Link for Flink, not only bolster security but also streamline network architecture and improve performance.

Introducing Real-Time Embeddings: Any Model, Any Vector Database—No Code Needed

Confluent’s Create Embeddings Action for Flink helps you generate vector embeddings from real-time data to create a live semantic layer for your AI workflows.

Event-Driven AI: Building a Research Assistant with Kafka and Flink

The rise of agentic AI has fueled excitement around agents that autonomously perform tasks, make recommendations, and execute complex workflows. This blog post details the design and architecture of PodPrep AI, an AI-powered research assistant that helps the author prepare for podcast interviews.

New in Confluent Cloud: Extending Private Networking Across the Data Streaming Platform

Confluent Cloud 2024 Q4 adds private networking and mTLS authentication, follower fetching, Flink updates, WarpStream features to support migration and governance, and more!

Stop Treating Your LLM Like a Database

GenAI thrives on real-time contextual data: In a modern system, LLMs should be designed to engage, synthesize, and contribute, rather than to simply serve as queryable data stores.

Three AI Trends Developers Need to Know in 2025

Continuing issues with hallucinations, the increasing independence of agentic AI systems, and the greater usage of dynamic data sources, are three AI trends you may want to monitor in 2025.