[Webinar] How to Implement Data Contracts: A Shift Left to First-Class Data Products | Register Now

Announcing ksqlDB 0.11.0

We’re pleased to announce ksqlDB 0.11.0, which takes a big step forward toward improved production stability. This is becoming increasingly important as companies like Bolt and PushOwl use ksqlDB for mission-critical use cases. This release includes numerous critical bug fixes and a few feature enhancements. We’ll step through the most notable changes, but see the changelog for the complete list.

Stability improvements

ksqlDB 0.11.0 contains improvements and fixes spanning stranded transient queries, overly aggressive schema compatibility checks, confusing behavior around casting nulls, bad schema management, and more. Here, we highlight a couple of additional, notable improvements.

HTTP client caching for inter-node requests

ksqlDB supports horizontal scaling by having multiple ksqlDB nodes coordinate to process data. As such, materialized state for persistent queries may be spread among multiple nodes. When a pull query request is issued, if the receiving ksqlDB server does not host the requested data, the request is forwarded to a node that does. Starting with ksqlDB 0.11.0, the HTTP clients used for such forwarding requests are cached and reused, greatly improving pull query performance in multi-node clusters. More details may be found on GitHub.

Windowed table Apache Kafka® topic retention

As of ksqlDB 0.9.0, windowed aggregations have configurable retention—simply add a RETENTION clause to the SQL query that creates the windowed table. The specified retention controls how long aggregate state for a particular window is available in the materialized state store. Prior to ksqlDB 0.11.0, retention clauses had no impact on the retention of the underlying Kafka topic for the windowed table, which led to confusing behavior. ksqlDB 0.11.0 also fixes a long-standing bug where old windows were not properly expired from the underlying Kafka topic.

New Java client methods

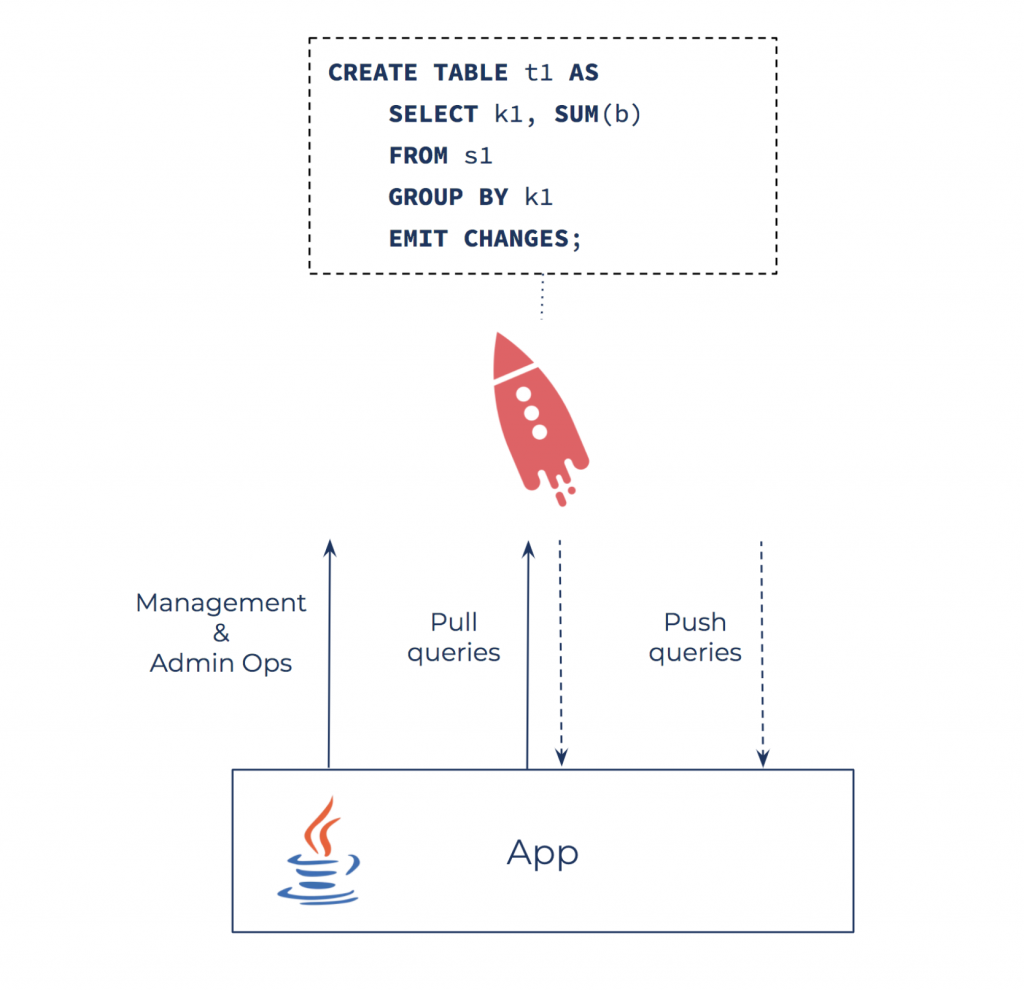

ksqlDB 0.10.0 saw the introduction of ksqlDB’s first-class Java client, including support for pull and push queries, as well as inserting new rows into existing ksqlDB streams. We’re happy to unveil expanded functionality in ksqlDB 0.11.0, including methods to create and manage new streams, tables, and persistent queries, as well as admin operations such as listing existing streams, tables, topics, and queries.

Create and manage new streams, tables, and persistent queries

ksqlDB statements for creating and managing new streams, tables, and persistent queries include:

- CREATE STREAM/TABLE

- CREATE STREAM/TABLE … AS SELECT

- INSERT INTO … AS SELECT

- DROP STREAM/TABLE

- TERMINATE <QUERY_ID>

As of ksqlDB 0.11.0, these statements are now supported by the Java client via its executeStatement() method. For example:

String sql = "CREATE STREAM ORDERS "

+ "(ORDER_ID BIGINT, PRODUCT_ID VARCHAR, USER_ID VARCHAR)"

+ "WITH (KAFKA_TOPIC='orders', VALUE_FORMAT='json');";

client.executeStatement(sql).get();

The executeStatement() method may also be used to easily retrieve the query ID for a newly created query. This query ID may later be used to terminate the query.

String sql = "CREATE TABLE ORDERS_BY_USER AS "

+ "SELECT USER_ID, COUNT(*) as COUNT "

+ "FROM ORDERS GROUP BY USER_ID EMIT CHANGES;";

ExecuteStatementResult result = client.executeStatement(sql).get();

String queryId = result.queryId().get();

client.executeStatement("TERMINATE " + queryId + ";").get();

See the documentation for additional options and usage notes.

List streams, tables, topics, and queries

As of ksqlDB 0.11.0, the Java client also supports listStreams(), listTables(), listTopics(), and listQueries() methods for retrieving information about available ksqlDB streams and tables, Kafka topics, and running queries. For details and examples, see the documentation.

Enhanced pull query support

ksqlDB’s pull queries allow users to fetch the current state of a materialized view. When the materialized state contains the results of a windowed query, it’s useful to filter state on not only the rowkey but also the window bounds.

Prior to ksqlDB 0.11.0, this filtering was enabled by the WINDOWSTART keyword. Starting with ksqlDB 0.11.0, the WINDOWEND keyword may be used in pull query filters as well. This new functionality is especially useful in the case of session windows, where the size of the time windows is not known in advance.

SELECT WINDOWSTART, WINDOWEND, count, alert_types

FROM alerts_per_session

WHERE location = 'DataCenter1'

AND '2020-07-02T20:00:00' <= WINDOWSTART

AND WINDOWEND <= '2020-07-03T08:00:00';

Get started

Get started with ksqlDB today, via the standalone distribution or with Confluent Cloud, and join the community in our #ksqldb Confluent Community Slack channel.

Ist dieser Blog-Beitrag interessant? Jetzt teilen

Confluent-Blog abonnieren

Confluent Deepens India Commitment With Major Expansion on Jio Cloud

Confluent Cloud is expanding on Jio Cloud in India. New features include Public and Private Link networking, the new Jio India Central region for multi-region resilience, and streamlined procurement via Azure Marketplace. These features empower Indian businesses with high-performance data streaming.

From Pawns to Pipelines: Stream Processing Fundamentals Through Chess

We will use Chess to explain some of the core ideas behind Confluent Cloud for Apache Flink. We’ve used the chessboard as an analogy to explain the Stream/Table duality before, but will expand on a few other concepts. Both systems involve sequences, state, timing, and pattern recognition and...