[Live Lab] Streaming 101: Hands-On with Kafka & Flink | Secure Your Spot

Real-Time AI Sales Coaching

Deliver consistent, high-impact sales conversations with a real-time GenAI-powered meeting coach. Using retrieval-augmented generation (RAG), this system taps into your company’s internal knowledge base to provide tailored coaching during live sales calls. Built on Confluent Cloud, Flink, and Azure OpenAI, it’s like giving every rep your best sales enablement materials–right when they need it most.

Boost Sales Rep Performance and Win Rates

Empower your sales teams with a GenAI meeting coach that delivers live, contextual guidance based on your internal sales documents such as battlecards, playbooks, and objection handling tips. By combining RAG with Confluent Cloud, Apache Flink, and Azure OpenAI, this solution streams and listens to calls in real time, processes conversations, and queries an internal knowledge base to generate highly relevant responses to keep reps on message, improve conversion rates, and drive consistent execution across sales teams.

Real-time, context-aware coaching with real-time vector search and LLM inference for precise sales guidance that adapts to real-time conversation flow

Automatically and continuously updated vector store keeps coaching aligned with the latest sales documents

Scalable streaming data pipelines ensure low-latency, reliable performance

Stored insights enable analytics, model retraining, and audit trail for compliance tracking

Build with Confluent

This use case leverages the following building blocks in Confluent Cloud:

Reference Architecture

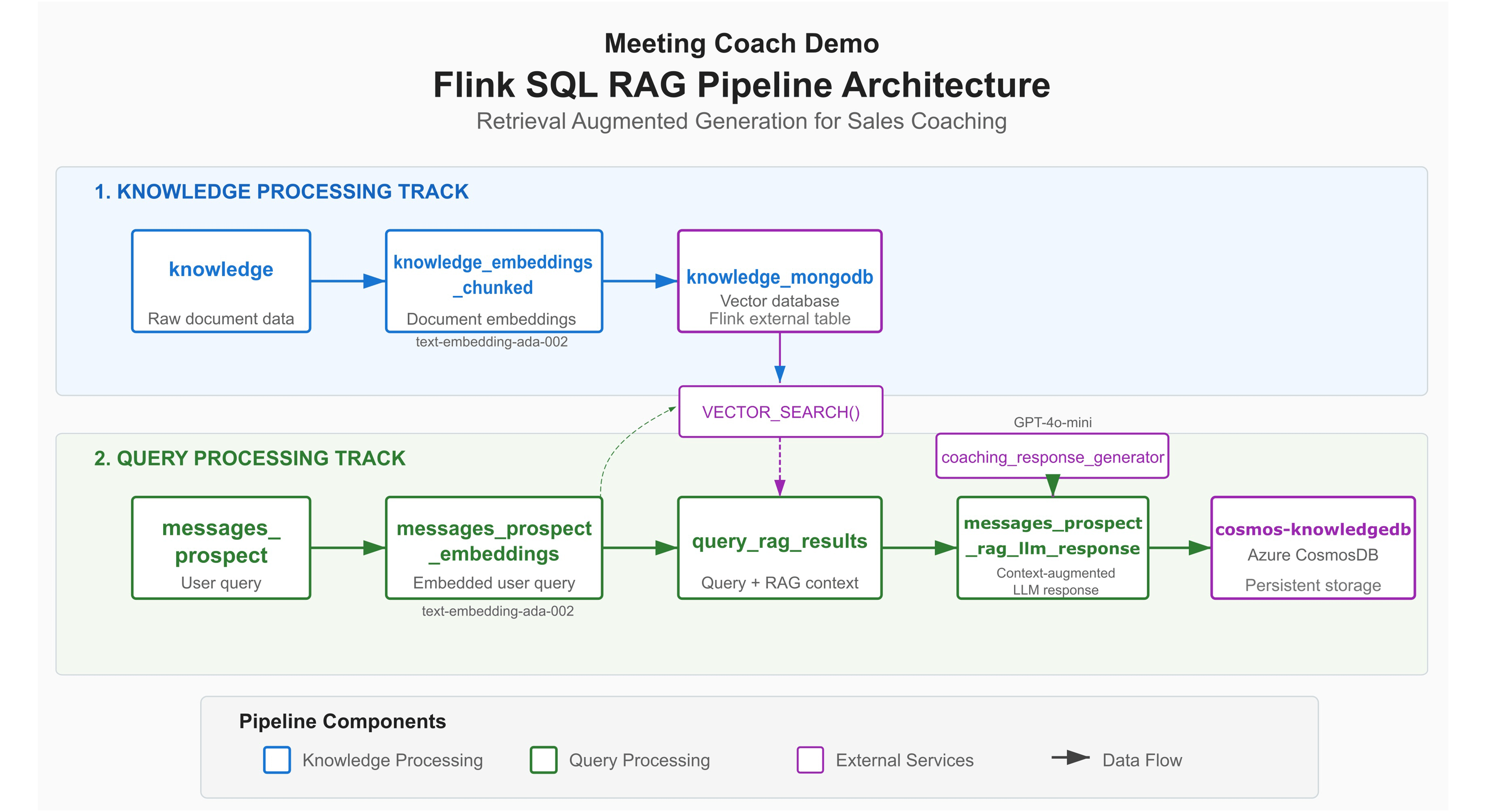

This Flink SQL RAG pipeline architecture consists of a knowledge processing track, which streams and processes unstructured document data from an internal knowledge base, chunks data, and turns them into vector embeddings before storing in a vector database such as Azure Cosmos DB or MongoDB Atlas.

At inference–which triggers occur during sales conversations–the query processing track uses Flink to perform vector search and retrieve relevant embeddings from the vector database and pass it with conversational context to OpenAI’s GPT to generate a real-time coaching response.

Fully managed source connectors continuously ingest conversation data from meetings while sink connectors stream vector embeddings into vector stores to keep them updated. Sink connectors also stream enriched data to other downstream systems including dashboards and CRMs.

Flink stream processes sales conversation events in real time, using AI model inference to call embedding models to generate embeddings, enable vector search, and provide context to prompts for LLMs to generate accurate responses without hallucinations.

Stream Governance ensures data quality, security, compliance, and traceability by enforcing schemas with Schema Registry, providing data lineage and metadata, granular access controls, and audit logging.