[Blog] New in Confluent Cloud: Queues for Kafka, new migration tooling, & more | Read Now

ANWENDUNGSFALL | SHIFT-LEFT ANALYTICS

Weniger Datenbereinigung. Mehr Entwicklung.

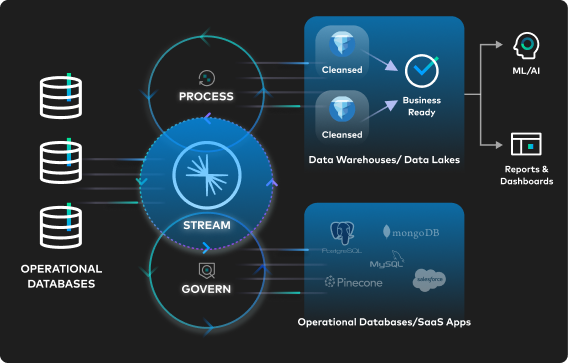

|Bereit, die unnötige Datenvermehrung und manuelle Fehlerbehebung in Daten-Pipelines zu beenden? Daten an der Quelle mit einer Daten-Streaming-Plattform verarbeiten und verwalten – innerhalb von Millisekunden nach ihrer Erstellung.

Durch die Vorverlagerung der Verarbeitung und Governance können Probleme mit der Datenqualität um bis zu 60 % reduziert, die Rechenkosten um 30 % gesenkt und die Produktivität der Entwickler sowie der ROI des Data-Warehouse maximiert werden. Jetzt weitere Ressourcen für den Einstieg entdecken oder Shift Left: Unifying Operations and Analytics With Data Products herunterladen.

Warum die Verarbeitung und Governance nach links verlagern?

In der Datenintegration bezeichnet „Shifting-Left“ einen Ansatz, bei dem die Datenverarbeitung und -verwaltung näher an der Quelle der Datengenerierung durchgeführt werden. Durch die Bereinigung und Verarbeitung der Daten zu einem früheren Stadium im Datenlebenszyklus können Datenprodukte erstellt werden, die allen nachgelagerten Consumers – einschließlich Cloud-Data-Warehouses, Data Lakes und Data Lakehouses – eine einzige Quelle für klar definierte und gut formatierte Daten bieten.

Wiederverwendbare, zuverlässige Datenprodukte bereitstellen

Daten einmalig an der Quelle verarbeiten und verwalten, um sie in verschiedenen Kontexten wiederzuverwenden. Mit Apache Flink® können Daten umgehend geformt werden.

Datenanalyse mit den aktuellsten und hochwertigsten Daten

Mit Tableflow hochpräzise Daten gewährleisten, die kontinuierlich ins Lakehouse fließen und sich nahtlos weiterentwickeln.

ROI-Maximierung bei Data-Warehouses und Data Lakes

Datenqualitätsprobleme um 40–60 % reduzieren, damit sich das Data-Engineering-Team auf strategisch wichtigere Projekte konzentrieren kann.

„Confluent hat uns dabei geholfen, einen Shift-Left-Ansatz für unsere Daten zu verfolgen, so dass die Geschäftsbereiche nun die vollständige Kontrolle über ihre Daten haben – von der Quelle bis zum Output. Jetzt sind die Daten von vornherein bereinigt, validiert und sofort einsatzbereit, was Nacharbeiten reduziert und die Bereitstellung beschleunigt.“

„Confluent Tableflow vereinfacht unsere Datenarchitektur, indem es Kafka-Topics mit nur wenigen Klicks in zuverlässige, analysebereite Tabellen umwandelt. Wir können nun eine zuverlässige Datenbasis für Echtzeit-Kundeninformationen und KI-Innovationen gewährleisten.“

Zazzle hat das vollständig verwaltete Flink-Angebot von Confluent implementiert, um ihre größte Datenpipeline zu transformieren. Durch die Vorverlagerung der Stream-Verarbeitung in der Pipeline vor dem Schreiben in Google BigQuery konnte Zazzle die Speicher- und Rechenkosten senken und gleichzeitig relevantere Produktempfehlungen liefern, was sich direkt auf den Umsatz auswirkte.

„Wir haben heute über 40.000 ETL-Jobs. Ein Chaos ... der Shift-Left-Ansatz bei der Datenverwaltung wäre transformativ für unser Unternehmen.“

„[Datenbereinigung] ist eine kostspielige Methode, Daten in den Delta Lake zu verschieben. Die Deduplizierung in Confluent ist die günstigere Variante. Wir müssen es nur einmal machen.“

„Ich liebe den Ansatz von [Shift Left Analytics]. Auf diese Weise würden wir Datensätze leichter auffindbar machen. Ich wusste, dass Confluent eine Integration mit Alation hat, aber es ist großartig zu hören, dass es [mit Data Portal] noch weitere Möglichkeiten gibt, diese Funktionen zu nutzen.“

Trends in Shift-Left-Analytics

Wiederverwendbare Datenprodukte erstellen mit einem Shift-Left-Ansatz

Jetzt alles über Shift-Left-Analytics erfahren und wie dieser einfache, aber wirkungsvolle Ansatz zur Datenintegration moderne Unternehmen bei der Innovation unterstützt.

So geht's: Shift-Left-Verarbeitung aus Data Warehouses

Jetzt ansehen

Wie die Datenaufnahme in Lakehouses und Warehouses optimiert werden kann

Guide herunterladen

Tableflow ist allgemein verfügbar: Vereinheitlichung von Apache Kafka®-Topics und Apache Iceberg™️-Tabellen

Blog-Beitrag lesen

Mit universellen Datenprodukten das Datenchaos beseitigen

E-Book herunterladenDaten-Streaming-Projekte beschleunigen mithilfe unserer Partner

Wir arbeiten mit unserem umfangreichen Partner-Ökosystem zusammen, um es Kunden zu erleichtern, unternehmensweit hochwertige Datenprodukte zu entwickeln, darauf zuzugreifen, sie zu entdecken und zu teilen. Jetzt herausfinden, wie innovative Unternehmen wie Notion, Citizens Bank und DISH Wireless die Daten-Streaming-Plattform sowie unsere nativen Cloud-, Software- und Serviceintegrationen nutzen, um die Datenverarbeitung und Governance nach links zu verschieben und den Wert ihrer Daten zu maximieren.

Notion reichert Daten umgehend an, um generative KI-Funktionen zu unterstützen

Citizens Bank erhöht die Verarbeitungsgeschwindigkeit um 50 % für CX und mehr

DISH Wireless entwickelt wiederverwendbare Datenprodukt für Industrie 4.0

Data-Warehouses in 4 Schritten maximieren

Durch die Bereitstellung aktueller, zuverlässiger Daten können Data Warehouses und Data Lakehouses optimal genutzt werden. Voraussetzung ist eine umfassende Daten-Streaming-Plattform, die es ermöglicht, Daten zu streamen, zu verbinden, zu verwalten und zu verarbeiten (und sie in offenen Tabellenformaten zu materialisieren), egal wo sie sich befinden.

Confluent hilft Unternehmen, einen Shift-Left-Ansatz zu implementieren und den Wert von Data-Warehouse- und Data-Lake-Workloads zu maximieren. Jetzt Kontakt zu uns aufnehmen, um zu erfahren, wie Shift-Left-Architekturen eingeführt und Analyse- und KI-Anwendungsfälle vorangetrieben werden können.