[Webinar] Die Grundlagen von Apache Kafka mit Confluent meistern | Jetzt registrieren

Optimize Search and Analytics with Real-Time Data Streaming

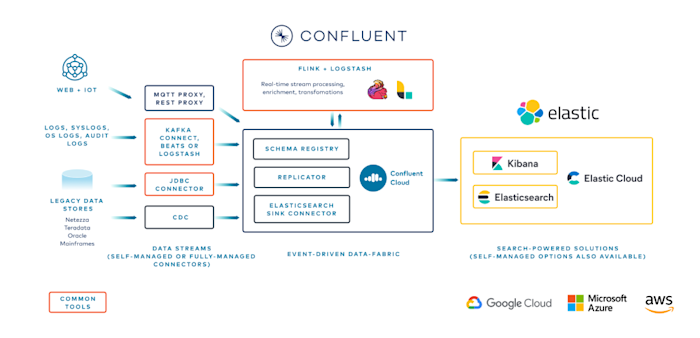

Build powerful, data-driven applications with Confluent and Elastic—leveraging real-time data streaming to optimize search, analytics, and customer experiences. Confluent provides distributed, scalable, and secure data delivery that can scale to handle trillions of events a day with Confluent Platform and Confluent Cloud. Elastic offers secure and flexible data storage, aggregation, and search & real-time analytics platform — that can be deployed either on-premise or Elastic Cloud.

Why Confluent with Elastic?

Ingest, Aggregate & Store Sensor Data

Integrate security event and sensor data into a single distributed, scalable, and persistent platform.This unified approach improves data consistency and facilitates more comprehensive analysis across various data sources.

Transform, Process & Analyze

Blend varied data streams using Flink for richer threat detection, investigation, and real-time analysis. This advanced processing allows for more nuanced insights and faster response times to potential security issues.

Share Data to Any Source

Send aggregated data to any connected source, including SIEM indexes, search, & custom apps. Ensure that critical information is available where needed most, and improve system interoperability and decision-making processes.

Enable New Anomaly Detection Capabilities

Unlock insights in SIEM data by running new machine learning and artificial intelligence models. You can identify subtle patterns and anomalies that traditional analysis methods might miss.

How Confluent and Elastic Integration Works

The Elasticsearch sink connector helps you integrate Apache Kafka® and Elasticsearch with minimum effort. It enables you to stream data from Kafka directly into Elasticsearch, where it can be used for log analysis, security analytics, or full-text search. Additionally, you can perform real-time analytics on this data, or use it with other visualization tools like Kibana, in order to derive actionable insights from your data stream.

Key Features

Flexible Data Handling

Supports JSON data with and without schemas, as well as Avro format.

Version Compatibility

Works with Elasticsearch 2.x, 5.x, 6.x, and 7.x.

Index Rotation

Use TimestampRouter SMT to create time-based indexes, which are perfect for log data management.

Error Handling

Configure how to handle malformed documents, with options to ignore, warn, or fail.

Tombstone Message Support

Specify behavior for null values, enabling deletion of records in Elasticsearch.

Performance Tuning

Optimize throughput with configurable batch size, buffer size, and flush timeout.