Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Multi-Agent Sales Development Representative (SDR)

Leverage Confluent, AutoGen, OpenAI, and MongoDB to automate the SDR workflow with a multi-agent system. Enable real-time AI agents to process leads and personalize outreach to maximize conversion.

Transform Lead Engagement with Event-driven AI Agents

Accelerate sales development with an agentic SDR system where specialized AI agents handle every step – from lead ingestion to personalized outreach. Instead of relying on brittle, rule-based logic, this multi-agent system uses real-time intelligence and dynamic task orchestration to engage leads with precision.

At the core is Confluent Data Streaming Platform acting as an orchestrator that manages agent communication without rigid dependencies. Kafka serves as the backbone while Flink processes incoming events, invokes a large language model (LLM) for decision-making, and dispatches messages to appropriate agents. This enables real-time execution, scalable coordination, and adaptability as new leads enter the system.

Free SDRs to spend time on higher-value tasks

Enable a dynamic, personalized lead outreach instead of a rigid nurture campaign

Track performance, run A/B tests, and improve automatically over time

Build with Confluent

This use case leverages the following building blocks in Confluent Cloud:

Reference Architecture

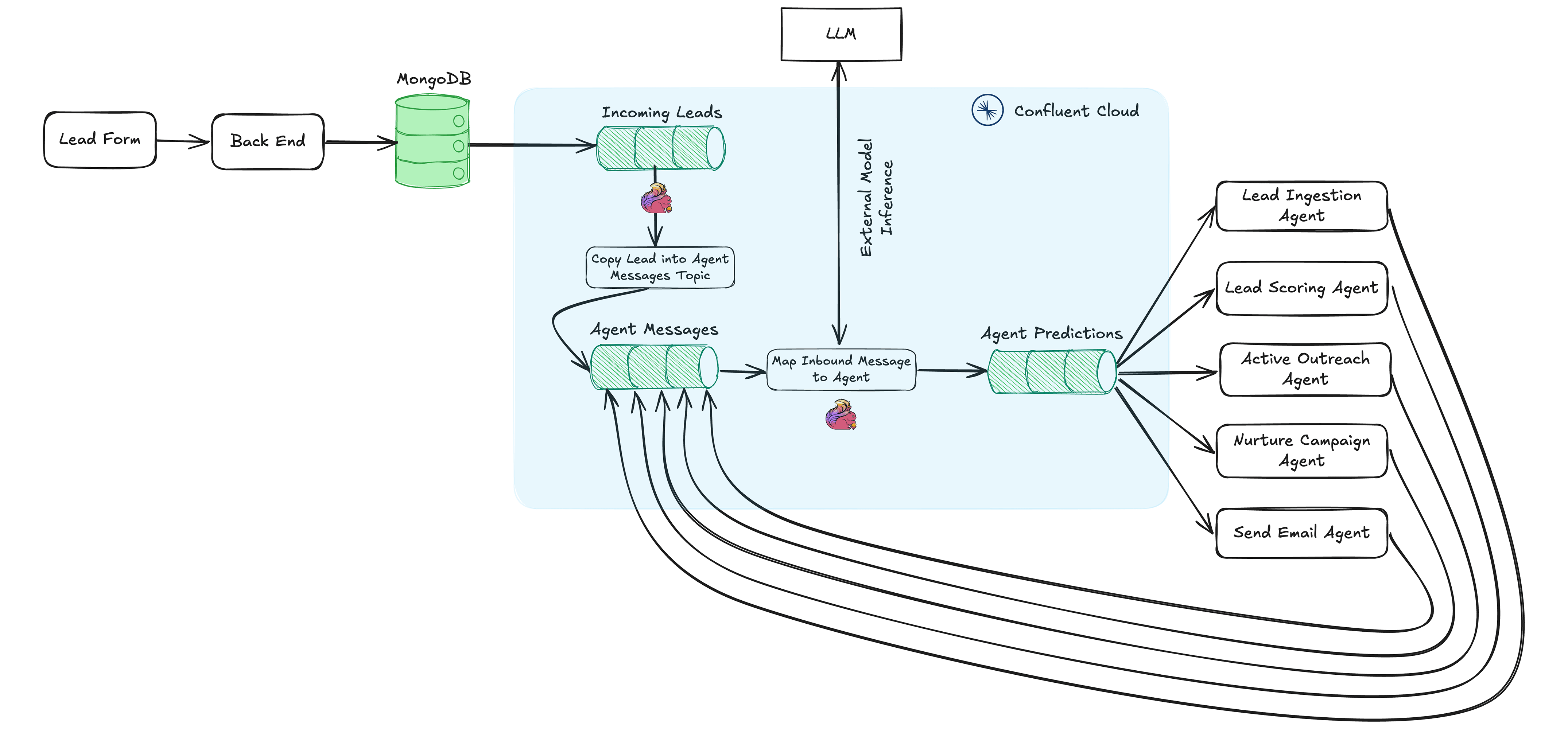

This architecture uses MongoDB for lead data storage, Confluent for streaming, Flink for real-time agent orchestration, and OpenAI’s GPT LLM to route tasks to specialized AI agents based on message context. The system consists of the following agents:

- Lead Ingestion Agent: Captures incoming leads from web forms, enriches them with external data (e.g., company website, Salesforce), and generates a report that can be used for scoring.

- Lead Scoring Agent: Uses enriched lead information to score leads and generate a short summary for how to best engage. Determines the appropriate next step and triggers downstream agents.

- Active Outreach Agent: Creates personalized outreach emails, incorporating insights from the lead’s online presence, in order to book a meeting.

- Nurture Campaign Agent: Dynamically creates a sequence of emails based on where the lead originated, and what their interest was.

- Send Email Agent: Sends via email relay or email service.

Continuously capture lead data from a web form to MongoDB, then ingest into Kafka using a MongoDB source connector.

Use Flink stream processing to enrich and transform data streams and call an LLM to determine which agent should handle each task in real time as well as generate personalized outreach and nurture content.

Schema Registry and data contracts ensure that all agent messages adhere to a consistent format, ensuring data compatibility and allowing allowing AI agents to effectively process, interpret, and act on information across heterogeneous systems.