[Webinar] From Fire Drills to Zero-Loss Resilience | Register Now

STREAM GOVERNANCE AUF ENTERPRISE-NIVEAU

Daten entdecken, verstehen und ihnen vertrauen

Stream Governance bietet Überblick und Kontrolle über die Struktur, Qualität und den Fluss von Daten in allen Anwendungen, Analysen und KI-Prozessen.

Acertus senkt die Gesamtbetriebskosten und gewährleistet hohe Datenqualität

Vimeo erstellt Datenprodukte in Stunden, nicht in Tagen oder Wochen

SecurityScorecard schützt die sensibelsten Daten

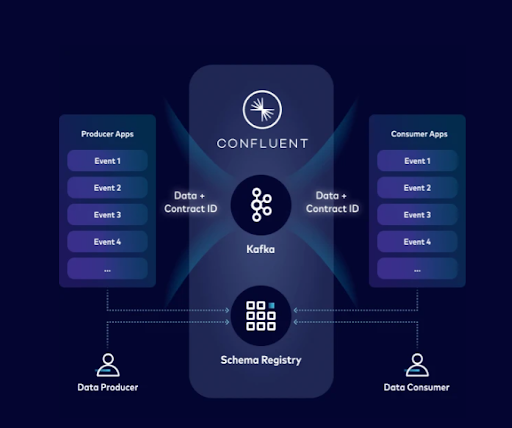

Stream Governance vereint Datenqualität, Auffindbarkeit und Nachvollziehbarkeit. Datenprodukte können in Echtzeit erstellt werden, indem Datenverträge einmalig definiert und sie bei der Datenerzeugung durchgesetzt werden – nicht erst nach der Batch-Verarbeitung.

Wie personenbezogene Daten in Apache Kafka® dank Schema Registry und Datenverträgen geschützt werden können

Wie sensible Daten geschützt werden können

Datenqualität direkt an der Quelle gewährleisten, nicht erst nach der Batch-Verarbeitung

Stream Quality verhindert, dass fehlerhafte Daten in die Datenströme gelangen.

Es verwaltet und setzt Datenverträge – Schema, Metadaten und Qualitätsregeln – zwischen Producern und Consumern innerhalb des privaten Netzwerks durch, mit:

Schema Registry

Universelle Standards für alle Daten-Streaming-Topics in einem versionierten Repository definieren und durchsetzen

Datenverträge

Semantische Regeln und Geschäftslogik für Datenströme durchsetzen

Schema-Validierung

Brokers überprüfen, ob Messages gültige Schemata verwenden, wenn sie einem bestimmten Topic zugewiesen werden

Schema Linking

Schemata in Cloud- und Hybridumgebungen in Echtzeit synchronisieren

Client-Side Field Level Encryption (CSFLE)

Sensible Daten optimal schützen dank der Verschlüsselung bestimmter Felder in Messages auf Client-Ebene

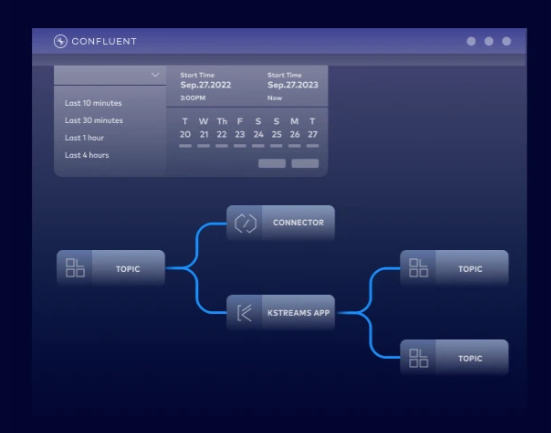

Datenströme überwachen und Probleme in nur wenigen Minuten beheben

Beliebig viele Daten-Producer können Events in ein gemeinsames Log schreiben, und beliebig viele Consumer können diese Events unabhängig und parallel lesen. Producer oder Consumer können hinzugefügt, weiterentwickelt, wiederhergestellt und skaliert werden – ohne Abhängigkeiten.

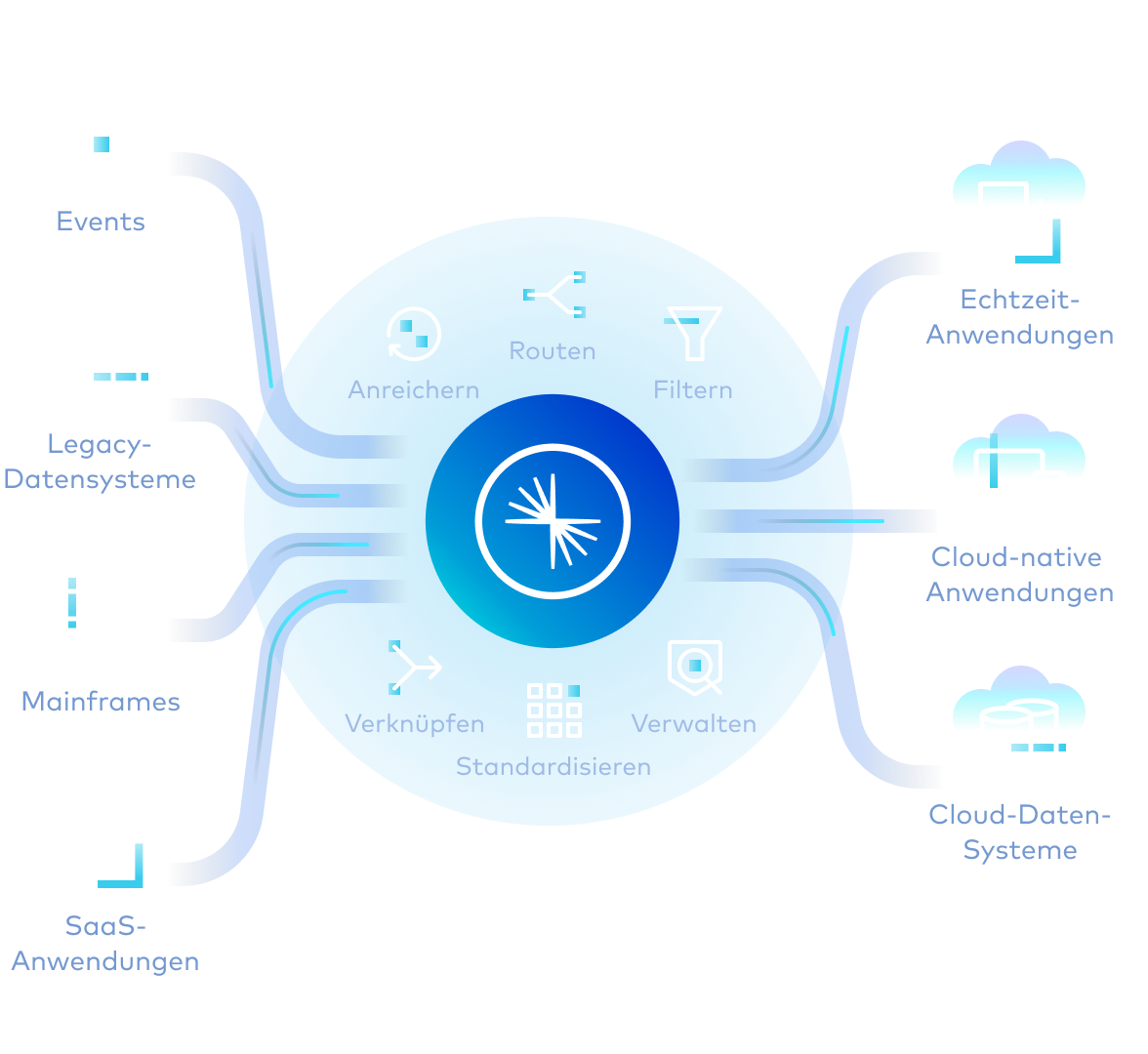

Confluent integriert Legacy- und moderne Systeme:

Client-Bibliotheken

In nur wenigen Minuten eine Beispiel-Client-App in der bevorzugten Sprache erstellen.

Vorgefertigte Connectors

Pro Connector können 3‑6 Entwicklungsmonate für Design, Erstellung und das Testen eingespart werden.

Custom Connectors

Eigene Integrationen erstellen, wir übernehmen die Verwaltung der Connect-Infrastruktur

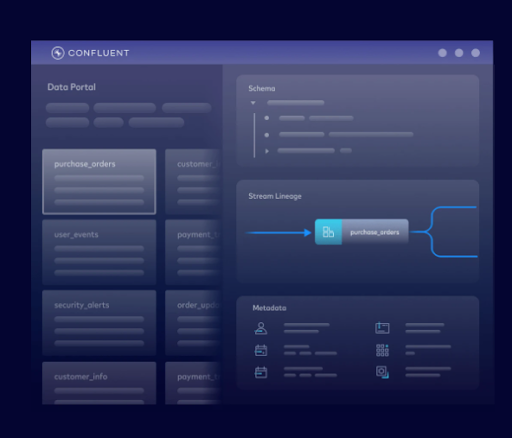

Markteinführungszeiten beschleunigen dank Self-Service-Zugriff

Stream Catalog organisiert Daten-Streaming-Topics als Datenprodukte, auf die jedes Betriebs-, Analyse- oder KI-System zugreifen kann.

Metadaten-Tagging

Topics mit Geschäftsinformationen zu Teams, Dienstleistungen, Anwendungsfällen, Systemen und mehr anreichern

Data Portal

Über eine Benutzeroberfläche können Endnutzer jedes Datenprodukt suchen, abfragen, entdecken, Zugang beantragen und anzeigen lassen

Datenanreicherung

Datenströme nutzen und anreichern, Queries starten und Daten-Streaming-Pipelines aufbauen ‑ direkt in der Benutzeroberfläche

Programmgesteuerter Zugriff

Topics mithilfe einer REST-API, die auf Apache Atlas basiert, und einer GraphQL-API suchen, erstellen und taggen

Zukunftssichere Daten für jede Umgebung oder Architektur

Jederzeit auf sich ändernde Geschäftsanforderungen reagieren, ohne Downstream-Workloads für Anwendungen, Analysen oder KI zu beeinträchtigen.

Sichere Schema-Evolution

Neue Felder hinzufügen, Datenstrukturen ändern oder Formate aktualisieren, während die Kompatibilität mit bestehenden Dashboards, Reports und ML-Modellen gewahrt bleibt

Automatisierte Kompatibilitätsprüfung

Schemaänderungen vor dem Deployment validieren, um zu verhindern, dass nachgelagerte Analyseanwendungen und KI-Pipelines beeinträchtigt werden

Flexible Migrationsoptionen

Rückwärts-, Vorwärts- oder vollständige Kompatibilitätsmodi wählen, je nach den spezifischen Upgrade-Anforderungen und betrieblichen Einschränkungen

ACERTUS nutzt Schema Registry, um die Bearbeitung und Ergänzung von Auftragsverträgen zu erleichtern, ohne dass der zugrunde liegende Code geändert werden muss. Änderungen an Schemata und Topic-Daten werden in Echtzeit erfasst, so dass Datennutzer darauf vertrauen können, dass die gesuchten Daten von Anfang an korrekt und zuverlässig sind.

Confluent gewährleistet mit Stream Governance Datenqualität und -sicherheit und ermöglicht Vimeo die sichere Skalierung und gemeinsame Nutzung von Datenprodukten im gesamten Unternehmen.

„Es ist erstaunlich, wie viel mehr wir erledigen können, wenn wir uns nicht selbst um alles kümmern müssen. Wir können darauf vertrauen, dass Confluent eine sichere und absolut zuverlässige Kafka-Plattform mit einer Vielzahl von hochwertigen Funktionen wie Sicherheit, Connectors und Stream Governance bietet.“

Neue Entwickler erhalten Credits im Wert von 400 $ für die ersten 30 Tage – kein Kontakt zum Vertrieb erforderlich.

Confluent bietet alles Notwendige zum: – Entwickeln mit Client-Libraries für Sprachen wie Java und Python, Code-Beispielen, über 120 vorgefertigten Connectors und einer Visual Studio Code-Extension. – Lernen mit On-Demand-Kursen, Zertifizierungen und einer globalen Experten-Community. – Betreiben mithilfe einer CLI, IaC-Unterstützung für Terraform und Pulumi und OpenTelemetry-Observability.

Die Registrierung ist über das Cloud-Marketplace-Konto unten möglich oder direkt bei uns.

Confluent Cloud

Ein vollständig verwalteter cloud-nativer Service für Apache Kafka®

Stream Governance With Confluent | FAQS

What is Stream Governance?

Stream Governance is a suite of fully managed tools that help you ensure data quality, discover data, and securely share data streams. It includes components like Schema Registry for data contracts, Stream Catalog for data discovery, and Stream Lineage for visualizing data flows.

Why is Stream Governance important for Apache Kafka?

As Kafka usage scales, managing thousands of topics and ensuring data quality becomes a major challenge. Governance provides the necessary guardrails to prevent data chaos, ensuring that the data flowing through Kafka is trustworthy, discoverable, and secure. This makes it possible to democratize data access safely.

What components are included in Confluent’s Stream Governance?

While open source provides basic components like Schema Registry, Confluent offers a complete, fully managed, and integrated suite. Stream Governance combines data quality, catalog, and lineage into a single solution that is deeply integrated with the Confluent Cloud platform, including advanced features like a Data Portal, graphical lineage, and enterprise-grade SLAs.

How is Confluent’s approach different from open source Kafka governance?

Yes. Confluent Schema Registry supports Avro, Protobuf, and JSON Schema, giving you the flexibility to use the data formats that best suit your needs.

Does Stream Governance support industry standards like Avro and Protobuf?

Yes, Confluent Stream Governance provides robust support for industry-standard data serialization formats through its integrated Schema Registry.

Schema Registry centrally stores and manages schemas for your Kafka topics, supporting the following formats: Apache Avro, Protobuf, JSON Schema.

This ensures that all data produced to a Kafka topic adheres to a predefined structure. When a producer sends a message, it serializes the data according to the registered schema, which typically converts it into a compact binary format and embeds a unique schema ID. When a consumer reads the message, it uses that ID to retrieve the correct schema from the registry and accurately deserialize the data back into a structured format. This process not only enforces data quality and consistency but also enables safe schema evolution over time.

Can I use Stream Governance on-premises?

The complete Stream Governance suite, including the Data Portal and interactive Stream Lineage, is exclusive to Confluent Cloud. However, core components like Schema Registry are available as part of the self-managed Confluent Platform.

Is Stream Governance suitable for regulated industries?

Yes. Stream Governance is suitable for regulated industries and provides the tools you need to ensure data security and compliance with industry and regional regulations. Its Stream Lineage features help with audits, allowing you to use schema enforcement and data quality rules to ensure data integrity. Across the fully managed data streaming platform, Confluent Cloud also holds numerous industry certifications like PCI, HIPAA, and SOC 2.

How can I get started with Stream Governance?

You can get started with Stream Governance by signing up for a free trial of Confluent Cloud. New users receive $400 in cloud credit to apply to any of the data streaming, integration, governance, and stream processing capabilities on the data streaming platform, allowing you try Stream Governance features firsthand.

We also recommended exploring key Stream Governance concepts in Confluent documentation, as well as following the Confluent Cloud Quick Start, which takes you through how to deploy your first cluster, product and consume messages, and inspect them with Stream Lineage.