[Webinar] How to Implement Data Contracts: A Shift Left to First-Class Data Products | Register Now

Connector

Apache Kafka and SAP Integration with the Kafka Connect ODP Source Connector

SAP is a German multinational software corporation that develops and markets enterprise software to manage business operations and customer relations. SAP is most famous for its enterprise resource planning (ERP) […]

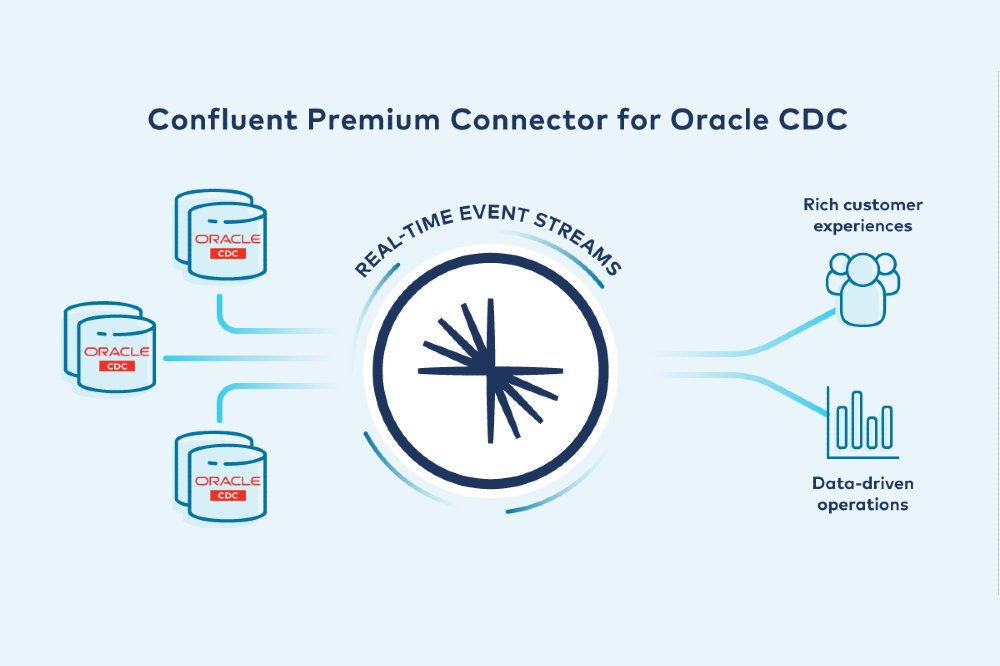

Oracle CDC Source Premium Connector is Now Generally Available

One of the most common relational database systems that connects to Apache Kafka® is Oracle, which often holds highly critical enterprise transaction workloads. While Oracle Database (DB) excels at many […]

How to Write a Connector for Kafka Connect – Deep Dive into Configuration Handling

Kafka Connect is part of Apache Kafka®, providing streaming integration of external systems in and out of Kafka. There are a large number of existing connectors, and you can also […]

How Apache Kafka Enables Podium to “Ship It and See What Happens”

This a summary of Podium’s technological journey and an example of how our engineering team is tooling ourselves to scale well into the years to come. Here at Podium, we’re […]

Project Metamorphosis Month 8: Complete Apache Kafka in Confluent Cloud

This is the eighth and final month of Project Metamorphosis: an initiative that brings the best characteristics of modern cloud-native data systems to the Apache Kafka® ecosystem, served from Confluent […]

Getting Started with Kafka Connect for New Relic

It’s 3:00 am and PagerDuty keeps firing you alerts about your application being down. You need to figure out what the issue is, if it’s impacting users, and resolve it […]

Using the Fully Managed MongoDB Atlas Connector in a Secure Environment

Since the MongoDB Atlas source and sink became available in Confluent Cloud, we’ve received many questions around how to set up these connectors in a secure environment. By default, MongoDB […]

Streaming Data from Apache Kafka into Azure Data Explorer with Kafka Connect

Near-real-time insights have become a de facto requirement for Azure use cases involving scalable log analytics, time series analytics, and IoT/telemetry analytics. Azure Data Explorer (also called Kusto) is the […]

Enabling the Deployment of Event-Driven Architectures Everywhere Using Microsoft Azure and Confluent Cloud

Hybrid cloud architecture and accelerated cloud migrations are becoming the norm rather than the exception, as our increasingly digital world introduces certain challenges along the way, including modernizing existing application/architecture, […]

Creating a Serverless Environment for Testing Your Apache Kafka Applications

If you are taking your first steps with Apache Kafka®, looking at a test environment for your client application, or building a Kafka demo, there are two “easy button” paths […]

Streaming Heterogeneous Databases with Kafka Connect – The Easy Way

Building a Cloud ETL Pipeline on Confluent Cloud shows you how to build and deploy a data pipeline entirely in the cloud. However, not all databases can be in the […]

Analysing Changes with Debezium and Kafka Streams

Change Data Capture (CDC) is an excellent way to introduce streaming analytics into your existing database, and using Debezium enables you to send your change data through Apache Kafka®. Although […]

Data Privacy, Security, and Compliance for Apache Kafka

Why data privacy for Apache Kafka®? As companies seek to leverage all forms of data for competitive advantage, there is a growing regulatory and reputational risk that calls for the […]

Announcing the Elasticsearch Service Sink Connector for Apache Kafka in Confluent Cloud

We are excited to announce the preview release of the fully managed Elasticsearch Service Sink Connector in Confluent Cloud, our fully managed event streaming service based on Apache Kafka®. Our […]