Apache Kafka®️ 비용 절감 방법 및 최적의 비용 설계 안내 웨비나 | 자세히 알아보려면 지금 등록하세요

Announcing Tutorials for Apache Kafka

We’re excited to announce Tutorials for Apache Kafka®, a new area of our website for learning event streaming. Kafka Tutorials is a collection of common event streaming use cases, with each tutorial featuring an example scenario and several complete code solutions. It’s the fastest way to learn how to use Kafka with confidence.

We’re building this because we know that event streaming is a radically different way of thinking. It causes us to rethink the way we architect our programs and systems. Although it has heaps of benefits (immutability, information sharing, and fault tolerance, to name a few), it can be surprisingly difficult for newcomers to learn.

It doesn’t need to be that way.

For beginners, Kafka Tutorials reveals the “shape” of the problems that event streaming can solve. It makes it easier to recognize the domain of things that you might use event streaming for. Moreover, each tutorial reliably takes you from zero to working code by following each of the steps.

For the experienced, it’s a crucial reference guide that makes your work easier. Easily look up how to join a stream and a table together when you’re rusty, or quickly recall how to merge discrete streams together. Over time, we’ll introduce more advanced material that makes use of the entire stack.

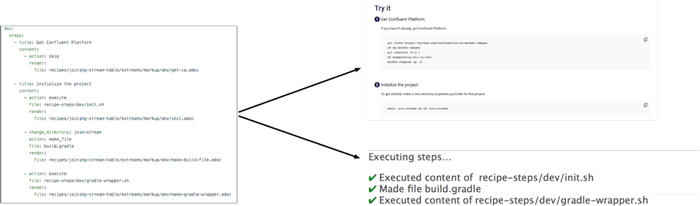

Although it’s early, we’re building Kafka Tutorials for the long term. That’s why we’ve intelligently engineered the site to use a unique flavor of literate programming. Each tutorial that you see on a page is backed by a single data structure. We’ve built programs that understand this shared structure—namely one to render the page, and another to test the content of the page. That means that when we make changes to each tutorial, they are automatically validated on a continuous integration system to ensure that we’re giving you actual code that works.

Lastly, Kafka Tutorials is a community-driven site. Its source code is available on GitHub. If you have a great idea for a new tutorial or can make an existing open better, we’d love your contributions.

Happy learning!

Apache, Apache Kafka, Kafka and the Kafka logo are trademarks of the Apache Software Foundation. The Apache Software Foundation has no affiliation with and does not endorse the materials provided.

이 블로그 게시물이 마음에 드셨나요? 지금 공유해 주세요.

Confluent 블로그 구독

Introducing KIP-848: The Next Generation of the Consumer Rebalance Protocol

Big news! KIP-848, the next-gen Consumer Rebalance Protocol, is now available in Confluent Cloud! This is a major upgrade for your Kafka clusters, offering faster rebalances and improved stability. Our new blog post dives deep into how KIP-848 functions, making it easy to understand the benefits.

How to Query Apache Kafka® Topics With Natural Language

The users who need access to data stored in Apache Kafka® topics aren’t always experts in technologies like Apache Flink® SQL. This blog shows how users can use natural language processing to have their plain-language questions translated into Flink queries with Confluent Cloud.