[Blog] New in Confluent Cloud: Queues for Kafka, new migration tooling, & more | Read Now

Report: Why IT Leaders Are Prioritizing Data Streaming Platforms

In the age of AI, the value of data isn’t just in having it—it’s in how fast you can use it. That’s why real-time data is quickly becoming the new standard.

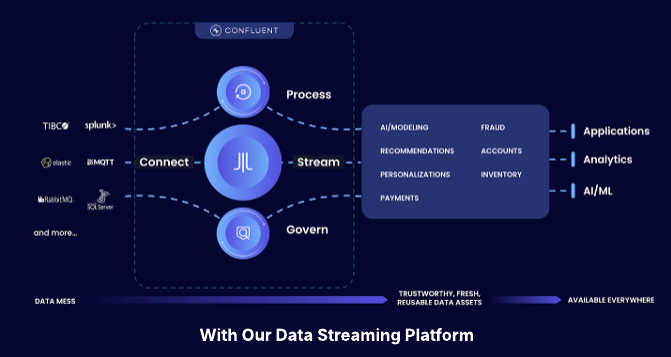

Data streaming platforms (DSPs) are at the center of this shift, helping simplify access and reuse of this data—whenever and wherever it’s needed. Findings from the 2025 Data Streaming Report: Moving the Needle on AI Adoption, Speed to Market, and ROI underscore how DSPs are building the foundation for real-time, data-driven businesses that move faster, deliver smarter, and unlock new opportunities.

In fact, 89% of IT leaders see DSPs as key to achieving their data-related goals and 64% cite higher investments in DSP this year. It’s both validating and exciting to see how data streaming platforms are emerging as a business imperative that help organizations solve some of their most persistent data challenges—including data silos and governance-related disjoints—ultimately helping businesses unlock the true value of their data and operate at the speed of modern business.

A complete data streaming platform provides the minimum set of capabilities needed to turn data into a truly reusable asset. It ensures that data is:

Inherently reusable: Ready to use across multiple teams for varied use cases

Discoverable: Easy for teams to find and use

Secure and trustworthy: Beefed up with strong governance to ensure build confidence

In this blog post, I’ll share my perspective on the report’s findings. You can view the full report for a more in-depth look at how DSPs are powering the next wave of innovation by turning data into real-time value. The report also zooms in on how with standout ROI—44% of IT leaders report an ROI of 5x—and competitive gains, tech leaders already on board are outpacing the rest.

The Foundation for AI Success

AI systems in a business context are only as good as the data that powers them and how quickly and reliably data can be delivered. As GenAI goes mainstream and AI agents become more capable, the demand for continuous, real-time data access will only accelerate.

Data streaming platforms are emerging as critical infrastructure—with 87% of IT leaders saying DSPs will be increasingly used to feed AI systems with real-time, contextual, and trustworthy data. DSPs are ideally suited for these uses to help organizations tackle the data challenges that constrain AI operationalization. 89% of IT leaders see DSPs easing AI adoption by helping them directly address hurdles in areas of data access, quality assurance, and governance.

Notably, the specific benefits attributed to DSPs—simplified data integration, data quality validation, data lineage tracking, and data governance—correspond directly with the core requirements for building trustworthy AI systems that deliver consistent business value.

Start With Governance and Build the Culture

One critical piece of advice for those just beginning with data streaming platforms: prioritize governance early. That’s because once you have consumers for your data—apps, services, teams leveraging it—retrofitting governance becomes extremely painful and expensive.

The good news is we’re seeing more IT leaders seeing value in shifting left in data processing and governance. The concept of shifting left in data integration means embedding data processing, quality checks, and governance closer to the data source. The ultimate goal of shifting left is to provide clean, reliable, secure, and timely access to important data, treating it as a first-class building block for services, analytics, and AI capabilities.

In fact, the 2025 Data Streaming Report found 93% of IT leaders cite at least four potential benefits of embracing a shift-left approach. Benefits include, improved data quality and reduced data processing costs for operational and analytical workloads, reduced effort for downstream consumers, and reduced costs and risks.

It’s important to remember that technology is only part of the equation—real value comes when it’s paired with the right organizational mindset and a strategy for change. This entails organizations rethinking how they work with data across teams, systems, and processes. Plus, enabling a culture where data is treated as a shared, reusable asset. Without this shift, even the best technology will fall short.

Avez-vous aimé cet article de blog ? Partagez-le !

Abonnez-vous au blog Confluent

The Business Value of the DSP: Part 2 – A Framework for Measuring Impact

Discover how Confluent transformed from a self-managed Kafka solution into a fully managed data streaming platform and learn what this evolution means for modern data architecture.

Confluent Recognized in 2025 Gartner® Magic Quadrant™ for Data Integration Tools

Confluent is recognized in the 2025 Gartner Data Integration Tools MQ. While valued for execution, we are running a different race. Learn how we are defining the data streaming platform category with our Apache Flink® service and Tableflow to power the modern real-time enterprise.