Apache Kafka®️ 비용 절감 방법 및 최적의 비용 설계 안내 웨비나 | 자세히 알아보려면 지금 등록하세요

Compare

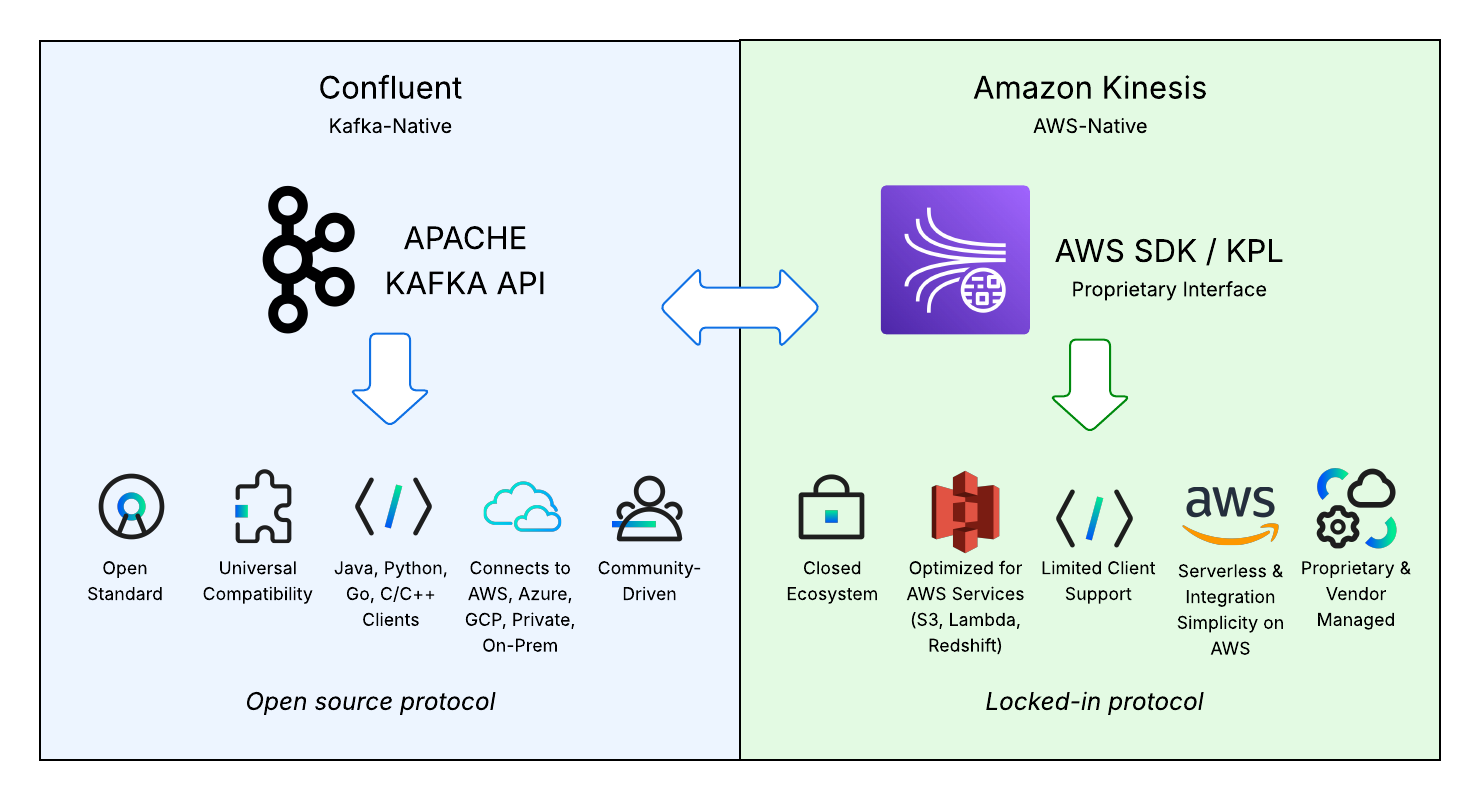

Confluent vs Amazon Kinesis: Choosing the Right Event Streaming Platform

In the modern cloud ecosystem, the decision between Confluent and Amazon Kinesis often defines the flexibility and reach of your data architecture. Both platforms are capable of handling high-throughput, real-time workloads, but they solve the problem of "data in motion" from different architectural perspectives.

Comparing them isn't about finding a "winner," but about identifying a data streaming technology that aligns with your specific operational needs. In this guide, we’ll walk you through a comparison of the core components, architecture, and ideal use cases to help you in your evaluation process.

A Quick Comparison: Confluent vs. Amazon Kinesis

-

Amazon Kinesis is the AWS-native choice. It is a proprietary service deeply embedded in the AWS fabric, optimized for teams that need to pipe data rapidly between AWS services (like S3, Redshift, and Lambda) with zero infrastructure management.

- Confluent is the Kafka-native choice. Based on the open source industry standard Apache Kafka, it is re-engineered as a fully managed, cloud-native platform. It is optimized for teams building a "central nervous system" (i.e., using data streaming as an data integration layer for continuous information exchange, processing, and action across all systems) that requires data portability across clouds (e.g., AWS, Azure, Google Cloud), on-premises, and hybrid environments, as well as a broader ecosystem of pre-built integrations.

For many mature enterprises, this is not a binary choice. It is increasingly common to see cloud-native data pipelines that leverage both technologies where they shine best: Confluent acts as the central hub, aggregating business-critical data from across the enterprise (Salesforce, Oracle, mainframes) while Kinesis acts as a specialized spoke, receiving filtered streams from Confluent to trigger AWS-specific serverless functions or low-latency analytics within a single region.

When Should I Choose Kinesis over Confluent ?

If your team is building a net-new application that lives 100% inside AWS, has no need to share data with other clouds or on-premise systems, and you want to minimize configuration, Kinesis is often the logical starting point. Its proprietary API allows for rapid "pipe-building" between AWS services with a pay-as-you-go model that appeals to strictly AWS-native projects.

How Do I Know if Confluent is a Better Fit ?

If your architecture demands Kafka-native streaming compatibility to avoid vendor lock-in, or if you anticipate the need to scale across regions and clouds, Confluent is the strategic choice. It decouples your data from the underlying infrastructure provider, allowing you to run the same streaming applications on AWS today and Azure or Google Cloud tomorrow without rewriting code.

Confluent vs. Kinesis: What Are the Core Components of Each Platform?

To understand the architectural trade-offs, we must look at the specific services that make up each platform. While they share functional similarities ingestion, processing, and sinking their underlying technology differs significantly.

The Storage and Ingestion Layer

Confluent uses Kafka topics and partitions as a persistent log of events that can be retained and replayed indefinitely . It speaks the open Kafka protocol, meaning any Kafka-compatible client (Java, Python, C++, Go) can write to it natively.

Amazon Kinesis Data Streams (KDS) uses stream and shards for its proprietary ingest service. While highly durable, it typically has a retention limit that defaults 24 hours and can be configured to a maximum of 365 days. It is accessed via the proprietary AWS SDK or Kinesis Producer Library (KPL).

Difference Between Kinesis Firehose and Kafka Connect

The ingestion layer is where the ecosystem difference between Confluent and Kinesis is most visible.

-

Confluent offers 120+ pre-built Kafka connectors. These allow you to capture data changes (CDC) from Oracle, Salesforce, or Mongo, and sink them into Snowflake, Elastic, or Google BigQuery. It works bi-directionally across clouds.

-

Amazon Data Firehose is optimized for one specific job: loading streaming data into AWS destinations (S3, Redshift, OpenSearch, Splunk) with zero code. It is excellent for “dumping” logs to a data lake but lacks the broad, bi-directional connectivity of Kafka Connect.

Think of Kinesis Firehose as a specialized courier service that only delivers packages to the AWS warehouse (e.g., Amazon S3, Redshift). It is unbeatable for simplicity if that is your only destination.

On the other hand, Kafka Connect is a universal logistics network. It can move data from anywhere (e.g., on-prem mainframe, Azure CosmosDB) to anywhere (e.g., AWS S3, Google Cloud Storage, MongoDB Atlas, Databricks, Snowflake). If you need to "unlock" data from legacy systems, Kafka Connect makes that possible.

The Processing Layer

Both platforms now rely on Apache Flink®, the industry standard for stateful stream processing, but the delivery models differ.

-

Confluent Cloud for Apache Flink® is a truly serverless Flink implementation. You write Flink SQL statements directly against your streams. Flink on Confluent handles the resource provisioning instantly. It is tightly integrated with the Schema Registry to ensure data quality during transformation.

- Amazon Managed Service for Apache Flink (formerly KDA) is a provisioned service. You deploy Flink applications (JARs) or use Studio notebooks. While powerful, it often requires managing "Kinesis Processing Units" (KPUs) and VPC networking configurations manually.

Is KDA the Same as Flink ?

Technically, no, but operationally, yes. Amazon Managed Service for Apache Flink (formerly KDA) is a hosted version of open source Flink that lives inside your AWS VPC. You are still responsible for managing, maintaining, and scaling your Flink clusters.

In contrast, Confluent Cloud for Apache Flink is a re-engineered, cloud-native Flink that abstracts away the infrastructure entirely—allowing you to filter, join, and aggregate streams using simple SQL without worrying about clusters or checkpoints.

How Do Confluent and Kinesis Handle Data Governance ?

Confluent uses Stream Governance, a fully managed governance suite that includes Schema Registry, Stream Lineage, and Data Catalog. It provides a visual map of your data flow showing you exactly where data came from and who is consuming it out of the box.

Kinesis relies on the AWS Glue ecosystem. You connect Kinesis to the AWS Glue Schema Registry for validation and use AWS Glue Data Catalog or Amazon DataZone for metadata management. It is a powerful, composable approach but requires stitching multiple AWS services together.

How Do Confluent and Kinesis Compare on Architecture and Protocol Compatibility?

Kinesis uses a proprietary API. Code written for Kinesis (using the AWS SDK or Kinesis Producer Library) cannot be ported to another cloud or on-premise system without rewriting it. It is designed to be the most efficient way to move data within AWS, but it speaks a language only AWS understands.

Since Kafka is the de facto standard for event streaming, using Confluent ensures your architecture is portable. You can move a producer application from Confluent Cloud on AWS to a self-managed Kafka cluster on-premise with zero code changes—only a configuration update.

Architecture Comparison Matrix:

|

Feature |

Confluent Cloud (Kafka-Native) |

Amazon Kinesis (AWS-Native) |

|

Protocol Support |

Apache Kafka Protocol (Universal standard) |

Proprietary AWS API (Locked to AWS) |

|

Multi-Cloud |

Yes (AWS, Azure, GCP, On-Prem) |

No (AWS Only) |

|

Data Retention |

Infinite (Tiered Storage moves cold data to object storage seamlessly) |

Limited (Default 24 hrs; Max 365 days; expensive at scale) |

|

Processing Engine |

Flink SQL (Serverless, SQL-first) |

Managed Flink (KDA) (Provisioned, Java/Scala-first) |

|

Ordering Guarantee |

Per Partition (Strict ordering) |

Per Shard (Strict ordering) |

Retention and Storage

In Amazon Kinesis, storage is ephemeral. It is designed as a "moving pipe," not a database. Data is stored on expensive compute nodes. While you can extend retention up to 365 days, it becomes cost-prohibitive compared to standard storage, forcing you to dump data into S3 for long-term history.

In Confluent, storage can be infinite. Confluent separates compute from storage. Hot data lives on fast disks, while warm/cold data offloads automatically to object storage (like S3) while remaining queryable. This allows you to replay events from years ago without managing S3 buckets manually or paying for expensive provisioned storage.

Stream Processing Engines

Both platforms leverage Apache Flink, the gold standard for stream processing, but the developer experience differs:

-

Amazon Managed Service for Apache Flink: You upload a JAR file (Java/Scala application). You manage the application state, checkpoints, and scaling parameters (KPUs). It is powerful but "heavy" for simple tasks.

-

Confluent Cloud for Apache Flink: You write SQL. The platform manages the Flink cluster entirely. You can filter a stream, join it with a database table, and aggregate results using standard SQL syntax in the browser, making real-time processing accessible to data analysts, not just Java engineers.

When Should You Use Confluent?

While Kinesis is an excellent tactician for AWS-specific tasks, Confluent is the strategist for your entire data estate. You choose Confluent when your requirements outgrow a single cloud provider or when "good enough" data piping evolves into a need for a governed, mission-critical central nervous system.

The Multi-Cloud and Hybrid Reality:

Is Confluent Kafka-compatible? Yes, it is the original Kafka. If your organization operates in a "Cloud + On-Prem" reality (e.g., a bank with legacy mainframes and a mobile app on AWS), Confluent is the bridge. It allows you to implement multi-cloud stream processing where data produced in an on-premise Oracle database can be consumed instantly by a Google Cloud AI service or an Azure analytics dashboard. Kinesis cannot cross these boundaries without significant custom engineering.

Governance, Security and Control:

Schema Registry: Confluent ensures that if a producer changes a data format, it doesn't crash the consumer applications.

Stream Lineage: You get a Google Maps-style view of your data flow, showing exactly where data originated and where it is going.

Security: Confluent extends beyond basic IAM to provide granular governance and RBAC (Role-Based Access Control) down to the topic and field level, which is often a hard requirement for GDPR, HIPAA, and PCI compliance.

Unified real-time and batch pipeline:

Stream Processing: With serverless Flink, you can build applications that react to fraud or customer clicks in milliseconds.

Data Lake Integration: With Tableflow, Confluent can materialize Kafka topics directly as Apache Iceberg tables. This means your Snowflake or Athena data warehouse can query your streaming data as if it were a static table, zero ETL required. This Apache Iceberg + Kafka integration is a major differentiator for teams building modern data lakehouses.

When Should You Use Amazon Kinesis?

You should choose Amazon Kinesis when your entire data lifecycle from creation to consumption lives comfortably within the "Walled Garden" of AWS. It is the tactical choice for teams that prioritize speed of implementation over architectural portability.

If your goal is to get data from an EC2 instance into an S3 bucket or Redshift table with zero code and zero maintenance, Kinesis is unmatched.

The “Zero-Ops” Ingestion Engine

It is the best tool for the job to stream data to the S3 bucket. Using Amazon Data Firehose (earlier known as Amazon Kinesis Data Firehose), you can configure a delivery stream that automatically batches, compresses, and encrypts streaming data before landing it in S3. This requires no custom code, just configuration. This makes it ideal for log aggregation and data lake feeding where complex event processing isn't required.

Serverless Event Triggers

Kinesis Data Streams pushes events to AWS Lambda natively. You don't need to write a poller or manage a consumer group. You simply map the stream to the function, and AWS handles the batching and retries. This enables true serverless streaming architectures where you pay only for the compute used to process each event, scaling to zero when traffic stops.

Realtime Analytics with AWS

If you need to calculate a rolling average of website clicks and store the result in DynamoDB, Amazon Managed Service for Apache Flink (formerly KDA) allows you to do this without leaving the AWS console. While Confluent offers similar capabilities, the Kinesis ecosystem allows you to bill everything through your existing AWS credits and manage permissions via standard AWS IAM roles.

The “Better Together” Strategy

It is rarely a zero-sum game. The most mature cloud architectures leverage a [Kafka + Kinesis coexistence] pattern:

-

Core Nervous System (Confluent): Handles high-value business events (Orders, Inventory) that need to be shared across clouds and on-prem systems.

-

Edge Distribution (Kinesis): Confluent "sinks" specific filtered streams into Kinesis for AWS-native teams to consume via Lambda or Firehose.

Can You Use Confluent and Kinesis Together?

This is one of the most common architectural patterns for enterprises running on AWS. Rather than viewing them as competitors, successful teams often treat them as complementary tools in a broader data ecosystem.

The “Better Together” Pattern

The most robust method is using Kafka Connect integrations. Confluent offers pre-built, fully managed connectors that act as a bridge between the two worlds:

-

Amazon Kinesis Source Connector: Pulls data from Kinesis streams into Confluent topics. This is ideal when you have AWS-native producers (like IoT Core or CloudWatch Logs) that need to be processed by a central fraud detection engine in Confluent.

-

Amazon Kinesis Sink Connector: Pushes data from Confluent topics into Kinesis. This is perfect for "fan-out" scenarios where you need to trigger serverless AWS Lambda functions that are already wired up to listen to a Kinesis stream.

Scenario: The “Ingestion & Process” Split

A popular pattern involves leveraging the strengths of each platform at different stages of the pipeline:

-

Ingest (Kinesis): Use Kinesis Data Streams at the edge to capture massive volumes of clickstream data from AWS-hosted web apps. It requires zero configuration and scales automatically.

-

Process (Confluent): Sink that raw data into Confluent Cloud. Here, you use Flink SQL to join the clickstreams with customer inventory data (from an on-prem database). You apply governance, schema validation, and filter out noise.

-

Archive (Firehose): Send the refined, high-value data back to Confluent on AWS Marketplace or directly to Kinesis Firehose to be archived in Amazon S3 for long-term compliance storage.

While Confluent has its own S3 sink connector, many teams prefer Amazon Data Firehose because it handles batching, compression, and encryption for S3 natively. You can pipe data from Confluent to Firehose to leverage this existing AWS plumbing without writing custom S3 handling code.

Summary

-

Choose Amazon Kinesis if: You are building a net-new application 100% on AWS, your data does not need to leave the AWS ecosystem, and you prioritize "zero-ops" serverless simplicity over portability.

-

Choose Confluent if: You need a streaming data layer that connects AWS to on-premise systems or other clouds, want to use the open standard Kafka protocol to avoid vendor lock-in, need to process data with Flink SQL, or require strict enterprise governance.

Confluent vs. Kinesis – FAQs

What’s the difference between Amazon Kinesis and Apache Kafka?

Amazon Kinesis is a proprietary, AWS-native streaming service designed for rapid integration with other AWS tools (Lambda, Redshift) with zero infrastructure management. Apache Kafka is an open source distributed event streaming platform that has broader ecosystem compatibility, longer retention, and multi-cloud portability.

Is Confluent compatible with Kinesis?

Yes, Confluent and Kinesis are compatible and are often used together. Confluent offers fully managed Kinesis Source and Sink Connectors. You can ingest data from Kinesis Data Streams at the edge (e.g., IoT data landing in AWS) and pipe it into Confluent for central processing, or sink processed data from Confluent back into Kinesis Firehose for delivery to S3.

Can I use Kafka clients directly with Kinesis?

No, you cannot point a standard Kafka producer or consumer client (Java, Python, Go) at a Kinesis stream as Kinesis uses a proprietary API. You must use the AWS SDK or the Kinesis Producer Library (KPL). To bridge the two, you need a Kafka connector, not just a client configuration change.

Which platform offers better support for schema management?

Confluent offers a more integrated experience. Confluent Schema Registry is the industry standard for enforcing data quality (Avro, Protobuf, JSON) and preventing "bad data" from breaking downstream consumers. AWS offers the AWS Glue Schema Registry, which integrates with Kinesis, but it requires stitching together separate services (Glue + Kinesis) rather than having governance built into the platform itself.

Does Kinesis support exactly-once semantics?

No, Kinesis does not support exactly once semantics natively in the stream itself. Kinesis Data Streams supports "at-least-once" delivery. Achieving "exactly-once" processing requires an external processing engine like Amazon Managed Service for Apache Flink to handle the deduplication and state management. In contrast, both open source and Confluent's cloud-native Kafka engine support transactional writes natively at the protocol level.

Can I run Flink with both Confluent and Kinesis?

Yes, but the models differ.

- AWS offers Amazon Managed Service for Apache Flink (formerly Kinesis Data Analytics), which typically requires you to manage provisioned clusters and deploy Java/Scala JARs.

- Confluent Cloud for Apache Flink offers a serverless, SQL-first experience where you can write SQL queries directly against your streams without managing clusters or versions.

Which tool works better across multiple clouds?

Confluent is the clear choice for multicloud architectures. It runs on AWS, Azure, and Google Cloud, allowing you to replicate data across providers (e.g., active-active disaster recovery) with Cluster Linking. Kinesis is strictly available only within AWS regions.

How does Firehose compare to Kafka Connect?

Firehose is a specialized delivery tool optimized for loading data into AWS destinations (S3, Redshift, OpenSearch) with zero code. Kafka Connect is a universal integration framework that can connect to almost anything (Salesforce, MongoDB, Snowflake, Mainframes), both within and outside of AWS.

What’s the retention period for Kinesis vs. Confluent?

In Kinesis, data retention defaults to 24 hours and can be extended up to 365 days, but costs increase significantly as you store more data.

Confluent supports infinite retention. By offloading older data to object storage (Tiered Storage), you can keep years of data in a topic for event replay without the high cost of provisioned disk storage.

Is KDA the same as Flink on Confluent Cloud?

Kinesis Data Analytics or KDA is the former of Amazon Managed Service for Apache Flink on AWS. It is a partially managed Flink service only available on AWS, which is not the same as Confluent Cloud for Apache Flink, a serverless SQL engine tightly integrated with Confluent's complete data streaming platform which can span multicloud architectures. Notably, AWS's legacy KDA for SQL Applications is being discontinued (end of life January 2026).