Apache Kafka®️ 비용 절감 방법 및 최적의 비용 설계 안내 웨비나 | 자세히 알아보려면 지금 등록하세요

사용 사례 | Shift Left 분석

정리 시간을 줄여서 엔지니어링에 투자하세요

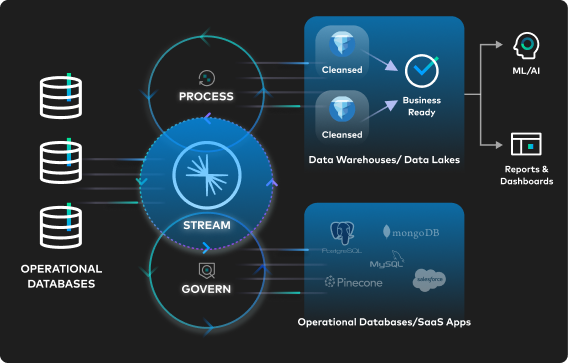

|불필요한 데이터 확산과 수정을 수동으로 진행하는 데이터 파이프라인을 제거하고 싶으신가요? 데이터 스트리밍 플랫폼을 사용하여 데이터가 생성되는 즉시 소스에서 데이터를 처리하고 관리하세요.

처리와 거버넌스를 조기에 진행하면 데이터 품질 문제를 최대 60% 줄이고, 컴퓨팅 비용을 30% 절감하며, 엔지니어링 생산성과 데이터 웨어하우스 ROI를 극대화할 수 있습니다. 더 많은 자료를 보면서 시작하는 방법을 알아보거나 Shift Left: 데이터 제품을 통한 운영 및 분석 통합을 다운로드하세요.

Why Shift Processing & Governance Left?

In data integration, “shifting left” refers to an approach where data processing and governance are performed closer to the source of data generation. By cleaning and processing the data earlier in the data lifecycle, you can build data products that give all downstream consumers—including cloud data warehouses, data lakes, and data lakehouses—a single source of well-defined and well-formatted data.

Deliver Reusable, Trustworthy Data Products

Process data and govern data once at the source, and reuse in multiple contexts. Use Apache Flink® to shape data on the fly.

Power Analytics With the Freshest, High-Quality Data

Maintain high-fidelity data that’s continuously flowing into your lakehouse, and evolving seamlessly with Tableflow.

Maximize the ROI of Data Warehouses and Data Lakes

Reduce data quality issues by 40-60% and free up your data engineering team to work on more strategic projects.

“Confluent helped us shift left on our data—giving teams full ownership of their data from source to output. Now it’s clean, validated, and production-ready upstream, reducing rework and accelerating delivery."

"Confluent Tableflow simplifies our data architecture, turning Kafka topics into trusted, analytics-ready tables in just a few clicks. We can now ensure a reliable data foundation for real-time customer insights and AI innovations."

Zazzle implemented Confluentʼs fully-managed Flink offering to transform their largest data pipeline. By shifting stream processing earlier in the pipeline before writing to Google BigQuery, Zazzle reduced storage and computation costs while delivering more relevant product recommendations, directly impacting revenue.

“We have 40,000+ ETL jobs today. It’s chaos…shifting data governance left would be transformational for our organization.”

“[Data cleaning] is a pricey way of pushing it down to the Delta Lake. De-duplication within Confluent is a cheaper way of doing it. We can only do it once.”

“I love the vision of [shift-left analytics]. This is how we would make datasets more discoverable. I knew that Confluent had an integration with Alation but it's awesome to hear that [with Data Portal] you have other ways of enabling those capabilities”

Shift-Left 분석 트렌드

Shift Left를 사용하여 재사용 가능한 데이터 제품을 만드는 방법

Shift Left 분석에 대한 심층 탐구를 보면서, 현대 사회의 기업 혁신에 기여하는 간단하지만 강력한 데이터 통합 접근 방식에 대해 알아보세요.

Show Me How: Shift Left Processing From Data Warehouses

Watch now

How to Optimize Data Ingestion Into Lakehouses & Warehouses

Get the guide

Tableflow Is GA: Unifying Apache Kafka® Topics & Apache Iceberg™️ Tables

Read blog

Conquer Your Data Mess With Universal Data Products

Download ebook파트너와 함께하는 데이터 스트리밍 여정 가속화

Confluent는 광범위한 파트너 에코시스템과 협력하여 고객의 조직 전체가 고품질 데이터 제품을 쉽게 구축하고 이에 접근하며 검색과 공유를 할 수 있게 합니다. Notion, Citizens Bank, DISH Wireless와 같은 혁신적인 조직들이 데이터 스트리밍 플랫폼과 네이티브 클라우드, 소프트웨어, 서비스 통합을 활용하여 데이터 처리 및 거버넌스를 전환하고 데이터의 가치를 극대화하는 방법을 알아보세요.

Notion Enriches Data Instantly to Power Generative AI Features

Citizens Bank Improves Processing Speeds by 50% for CX & More

DISH Wireless Creates Reusable Data Products for Industry 4.0

Maximize Your Data Warehouses in 4 Steps

Maximize your data warehouses and data lakehouses by feeding them fresh trustworthy data. It all starts when you have a complete data streaming platform that lets you stream, connect, govern, and process your data (and materialize it in open table formats) no matter where it lives.

Learn how Confluent can help your organization shift left and maximize the value of your data warehouse and data lake workloads. Connect with us today to learn how to adopt shift-left architectures and accelerate your analytics and AI use cases.