Apache Kafka®️ 비용 절감 방법 및 최적의 비용 설계 안내 웨비나 | 자세히 알아보려면 지금 등록하세요

엔터프라이즈급 STREAM GOVERNANCE

데이터 검색, 이해 및 신뢰

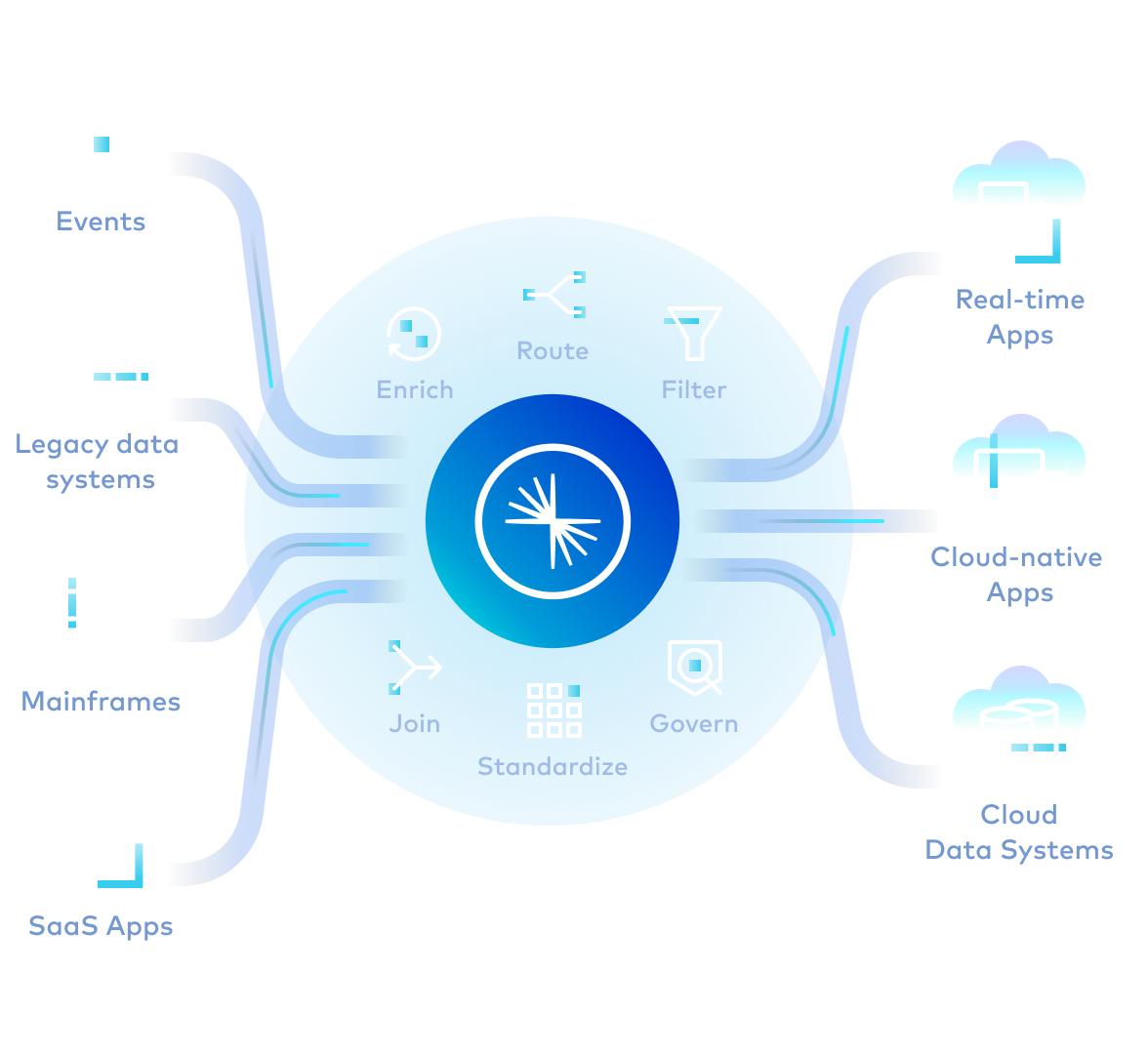

Stream Governance는 애플리케이션, 분석, AI 전반에 걸쳐 데이터의 구조, 품질, 흐름에 대한 가시성과 제어 기능을 제공합니다.

Acertus는 TCO를 절감하고 데이터 품질을 보장합니다.

Vimeo는 며칠이나 몇 주가 아닌, 몇 시간 만에 데이터 제품을 큐레이션합니다.

SecurityScorecard는 가장 중요한 데이터를 보호합니다.

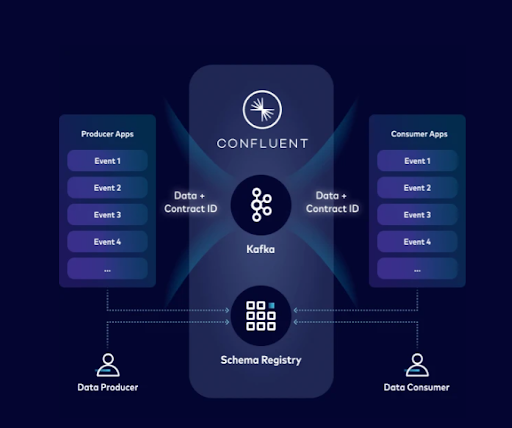

__Stream Governance는 데이터 품질, 검색 및 계보를 통합합니다. __ 데이터 계약을 한 번 정의하고, 데이터가 일괄 처리된 후가 아니라 데이터가 생성될 때마다 이를 적용하여 실시간으로 데이터 제품을 만들 수 있습니다.

일괄 처리 후가 아닌, 소스에서 데이터 품질 적용

__스트림 품질은 잘못된 데이터가 데이터 스트림에 유입되는 것을 방지__합니다.

프라이빗 네트워크 내에서 producers와 consumers 간의 데이터 계약(스키마, 메타데이터, 품질 규칙)을 관리하고 시행합니다.

Schema registry

버전이 관리되는 리포지토리에서 모든 데이터 스트리밍 토픽에 대한 범용 표준을 정의하고 적용합니다.

데이터 계약

데이터 스트림에 시맨틱 규칙과 비즈니스 로직을 적용합니다.

Schema Validation

브로커는 메시지가 특정 토픽에 할당될 때 유효한 스키마를 사용하는지 확인합니다.

Schema Linking

클라우드 및 하이브리드 환경 전반에서 실시간으로 스키마를 동기화합니다.

클라이언트 측 필드 수준 암호화(CSFLE)

클라이언트 수준에서 메시지 내 특정 필드를 암호화하여 가장 민감한 데이터를 보호합니다.

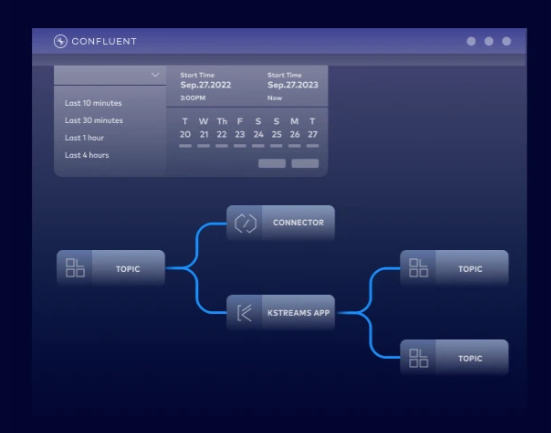

몇 분 안에 데이터 흐름 모니터링 및 문제 해결

여러 데이터 producers가 공유 로그에 이벤트를 기록할 수 있으며, 여러 consumers가 독립적이고 병렬적으로 해당 이벤트를 읽을 수 있습니다. __의존성 없이 producers 또는 consumers를 추가하고, 발전시키며, 복구하고, 확장 가능__합니다.

__Confluent는 레거시 시스템과 최신 시스템을 통합__합니다.

셀프 서비스 액세스로 시장 출시 기간 단축

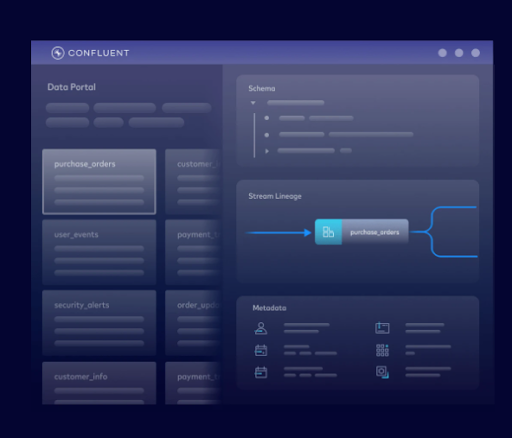

Stream Catalog는 데이터 스트리밍 토픽을 운영, 분석 또는 AI 시스템이 액세스할 수 있는 데이터 제품으로 구성합니다.

메타데이터 태깅

팀, 서비스, 사용 사례, 시스템 등에 대한 비즈니스 정보로 토픽을 강화합니다.

Data Portal

최종 사용자가 UI를 통해 각 데이터 제품을 검색, 쿼리, 발견, 액세스 요청하고 볼 수 있도록 합니다.

데이터 강화

UI에서 직접 데이터 스트림을 소비 및 보강하고, 쿼리를 실행하며, 스트리밍 데이터 파이프라인을 생성합니다.

프로그래밍 방식 접근

Apache Atlas 기반의 REST API와 GraphQL API를 통해 토픽을 검색하고, 생성하고, 태그를 지정합니다.

모든 환경 또는 아키텍처에 맞도록 미래에 대비된 데이터

애플리케이션, 분석 또는 AI를 위한 __다운스트림 워크로드를 손상시키지 않으면셔 변화하는 비즈니스 요구 사항에 대응__할 수 있습니다.

안전한 스키마 진화

기존 대시보드, 보고서, ML 모델과의 호환성을 유지하면서 새로운 필드를 추가하고, 데이터 구조를 수�정하며, 형식을 업데이트할 수 있습니다.

자동 호환성 검사

배포 전에 스키마 변경 사항을 검증하여 다운스트림 분석 애플리케이션 및 AI 파이프라인의 중단을 방지합니다.

유연한 마이그레이션 경로

특정한 업그레이드 요구 사항과 조직의 제약 조건에 따라 하위 호환, 상위 호환 또는 전체 호환 모드를 선택할 수 있습니다.

ACERTUS는 스키마 레지스트리를 활용하여 기본 코드를 변경할 필요 없이 주문 계약을 수월하게 편집하고 추가합니다. 스키마와 토픽 데이터의 변경 사항은 실시간으로 기록되므로 데이터 사용자가 원하는 데이터를 찾으면 정확하고 신뢰할 수 있는 데이터라고 믿을 수 있습니다.

Confluent는 Stream Governance로 데이터 품질과 보안을 보장해 Vimeo가 비즈니스 전반에서 데이터 제품을 안전하게 확장하고 공유할 수 있도록 지원합니다.

"어떤 일을 어떻게 해야 하는지를 걱정할 필요가 없어질 때 우리가 얼마나 더 많은 일을 할 수 있는지 알게 되면 정말 놀랍습니다. 우리는 보안, 커넥터 및 스트림 거버넌스와 같은 수많은 부가 가치 기능을 갖춘 안전하고 견고한 Kafka 플랫폼을 제공하는 Confluent를 신뢰할 수 있습니다."

신규 개발자는 첫 30일 동안 $400 상당의 크레딧을 받습니다(영업 담당자 불필요).

Confluent가 다음에 필요한 모든 것을 제공합니다.

- Java, Python 같은 언어를 위한 클라이언트 라이브러리, 샘플 코드, 120개 이상의 사전 구축된 커넥터, Visual Studio Code 확장 프로그램을 사용하여 빌드

- 주문형 학습과정, 증명서, 글로벌 전문가 커뮤니티에서 학습

- CLI, Terraform 및 Pulumi에 대한 IaC 지원, OpenTelemetry 관측 가능성을 가지고 운영

아래에서 클라우드 마켓플레이스 계정으로 가입하거나 Confluent에 직접 가입하세요.

Confluent Cloud

Apache Kafka®를 위한<br />클라우드-네이티브 서비스

Stream Governance With Confluent | FAQS

What is Stream Governance?

Stream Governance is a suite of fully managed tools that help you ensure data quality, discover data, and securely share data streams. It includes components like Schema Registry for data contracts, Stream Catalog for data discovery, and Stream Lineage for visualizing data flows.

Why is Stream Governance important for Apache Kafka?

As Kafka usage scales, managing thousands of topics and ensuring data quality becomes a major challenge. Governance provides the necessary guardrails to prevent data chaos, ensuring that the data flowing through Kafka is trustworthy, discoverable, and secure. This makes it possible to democratize data access safely.

What components are included in Confluent’s Stream Governance?

While open source provides basic components like Schema Registry, Confluent offers a complete, fully managed, and integrated suite. Stream Governance combines data quality, catalog, and lineage into a single solution that is deeply integrated with the Confluent Cloud platform, including advanced features like a Data Portal, graphical lineage, and enterprise-grade SLAs.

How is Confluent’s approach different from open source Kafka governance?

Yes. Confluent Schema Registry supports Avro, Protobuf, and JSON Schema, giving you the flexibility to use the data formats that best suit your needs.

Does Stream Governance support industry standards like Avro and Protobuf?

Yes, Confluent Stream Governance provides robust support for industry-standard data serialization formats through its integrated Schema Registry.

Schema Registry centrally stores and manages schemas for your Kafka topics, supporting the following formats: Apache Avro, Protobuf, JSON Schema.

This ensures that all data produced to a Kafka topic adheres to a predefined structure. When a producer sends a message, it serializes the data according to the registered schema, which typically converts it into a compact binary format and embeds a unique schema ID. When a consumer reads the message, it uses that ID to retrieve the correct schema from the registry and accurately deserialize the data back into a structured format. This process not only enforces data quality and consistency but also enables safe schema evolution over time.

Can I use Stream Governance on-premises?

The complete Stream Governance suite, including the Data Portal and interactive Stream Lineage, is exclusive to Confluent Cloud. However, core components like Schema Registry are available as part of the self-managed Confluent Platform.

Is Stream Governance suitable for regulated industries?

Yes. Stream Governance is suitable for regulated industries and provides the tools you need to ensure data security and compliance with industry and regional regulations. Its Stream Lineage features help with audits, allowing you to use schema enforcement and data quality rules to ensure data integrity. Across the fully managed data streaming platform, Confluent Cloud also holds numerous industry certifications like PCI, HIPAA, and SOC 2.

How can I get started with Stream Governance?

You can get started with Stream Governance by signing up for a free trial of Confluent Cloud. New users receive $400 in cloud credit to apply to any of the data streaming, integration, governance, and stream processing capabilities on the data streaming platform, allowing you try Stream Governance features firsthand.

We also recommended exploring key Stream Governance concepts in Confluent documentation, as well as following the Confluent Cloud Quick Start, which takes you through how to deploy your first cluster, product and consume messages, and inspect them with Stream Lineage.