Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

CASO DE USO | PIPELINES CDC

Creación de pipelines Change Data Capture (CDC) con Apache Flink®

Change Data Capture (CDC o «captura de datos de cambio») se utiliza para copiar datos en bases de datos relacionales, lo que hace posibles algunas de las operaciones más importantes del back end, como la sincronización de datos, la migración y la recuperación ante desastres. Además, gracias al procesamiento en streaming, puedes crear pipelines de datos CDC que impulsen aplicaciones event-driven y productos de datos fiables, con datos frescos y procesados que se integran en todos tus sistemas distribuidos: tanto heredados como modernos.

Descubre cómo Confluent permite que Apache Kafka® y Apache Flink® trabajen de la mano para crear pipelines CDC en streaming y alimentar análisis de datos en tiempo real con datos frescos y de alta calidad.

Pasa de tomar decisiones con datos obsoletos a reaccionar en tiempo real

Go from making decisions on stale data to reacting in real time

Reduce los costes de procesamiento en un 30 %

Reduce your processing costs by 30%

Proporciona datos fiables y limpios sin necesidad de correcciones manuales

Deliver trusted, clean data without manual break-fix work

Los 3 grandes desafíos de las arquitecturas CDC tradicionales

La mayoría de empresas ya usa sistemas CDC basados en registros para convertir los cambios de las bases de datos en eventos.

- Alta latencia en los datos provocada por el procesamiento por lotes. En lugar de streams de eventos, la mayoría de empresas usa el procesamiento por lotes para materializar los datos de registro en sistemas posteriores. Eso significa que los sistemas de datos no están sincronizados durante varias horas (o incluso días), mientras esperan a que se ejecute el siguiente proceso por lotes.

- Costes de procesamiento redundante. Los costes adicionales provienen tanto de tener que desarrollar y mantener integraciones punto a punto como de todo el procesamiento redundante que ocurre a lo largo de estas pipelines.

- Pérdida de confianza por culpa de las constantes correcciones manuales. Garantizar la exactitud de los datos en todas estas pipelines conlleva un gran esfuerzo manual y hace que el proceso sea propenso a errores humanos. Además, este enfoque obliga a los equipos a solucionar de forma reactiva los problemas que solo pueden identificarse cuando afectan a otro consumer.

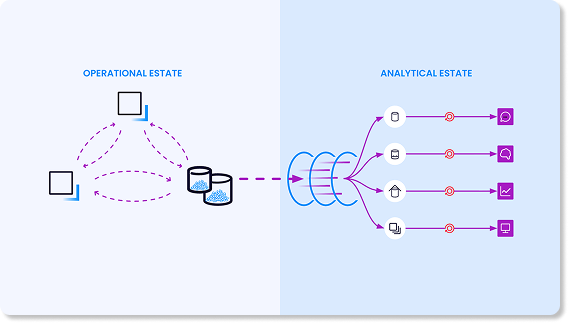

Crear pipelines Change Data Capture (CDC) con Kafka y Flink te permite unificar tus cargas de trabajo de CDC y análisis por lotes, lo que permite eliminar los silos de procesamiento. En lugar de esperar a procesar tus datos por lotes, hacer frente a costes de procesamiento redundante o depender de pipelines frágiles, esta arquitectura ofrece ventajas imporantes:

- Capturar datos de CDC como streams de eventos

- Usar Flink para procesar esos streams en tiempo real

- Materializar los flujos de CDC al instante en tus entornos operativos y analíticos

Cómo sacarle el máximo partido a tus datos y pagar menos por ello

Con Apache Flink® serverless en la plataforma de streaming de datos de Confluent, puedes desplazar el procesamiento a la izquierda —antes de la ingesta de datos— para mejorar la latencia, la portabilidad de los datos y la rentabilidad.

- Enriquecimiento de datos: mejora los datos con contexto adicional para mejorar su precisión.

- Reutilización de datos: comparte streams de datos consistentes en todas las aplicaciones.

- En tiempo real, en cualquier lugar: permite que las aplicaciones de baja latencia respondan a los eventos al instante.

- Reducción de costes: optimiza el uso de recursos y elimina redundancias en el procesamiento.

Los equipos de desarrollo de aplicaciones pueden crear pipelines de datos para activar acciones en el momento ideal

Al desplazar a la izquierda la ingesta de datos en data lakes y almacenes de datos, puedes realizar análisis, crear índices de búsqueda en tiempo real, desarrollar pipelines de ML y optimizar la administración de eventos e información de seguridad (SIEM).

Los equipos de análisis pueden preparar y moldear datos para alimentar aplicaciones event-driven con triggers para cálculos, actualizaciones de estado y otras acciones externas

Por ejemplo, pueden usarlo en aplicaciones creadas para soluciones de IA generativa (GenAI), detección de fraude, alertas y notificaciones en tiempo real, personalización de marketing y mucho más.

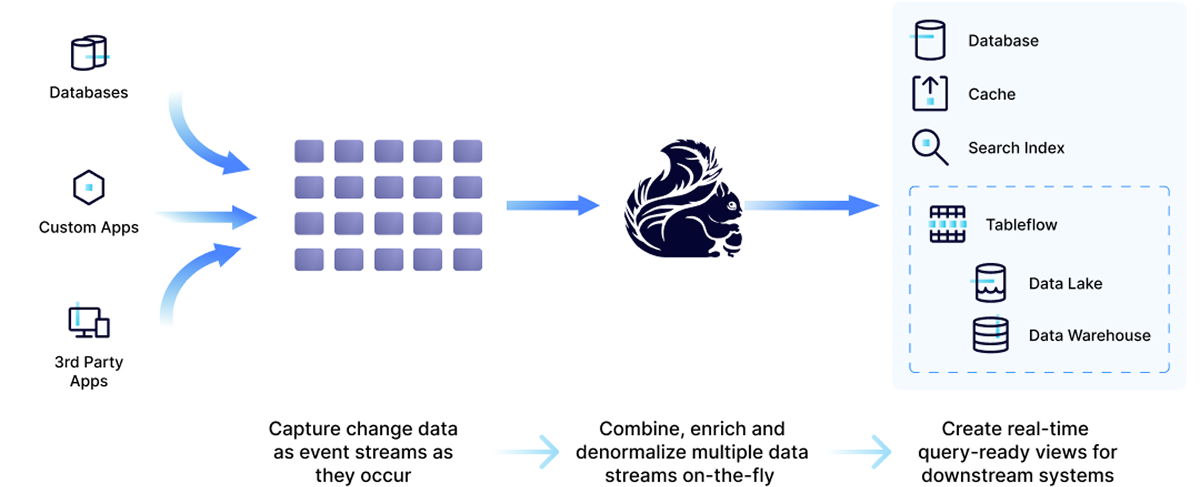

Tres pasos para crear pipelines de CDC con procesamiento en streaming de Confluent

Con Confluent, puedes procesar tus streams de CDC antes de materializarlos en tu entorno de análisis. Es muy sencillo: basta con filtrar, unir y enriquecer los datos de cambios capturados en tus topics de Kafka con Flink SQ. A continuación, podrás materializar streams de datos tanto en tu entorno operativo como en el analítico.

CDC en streaming: Demos y estudios de caso

Los clientes de Confluent están usando Flink para mejorar sus casos de uso de CDC, como la sincronización de datos y la recuperación ante desastres, y hacer realidad nuevas funcionalidades en tiempo real.

Explora el repositorio de GitHub y descubre cómo implementar análisis en tiempo real para distintos casos de uso: Customer 360, ventas de productos o tendencias de compra.

Puedes elegir entre dos laboratorios:

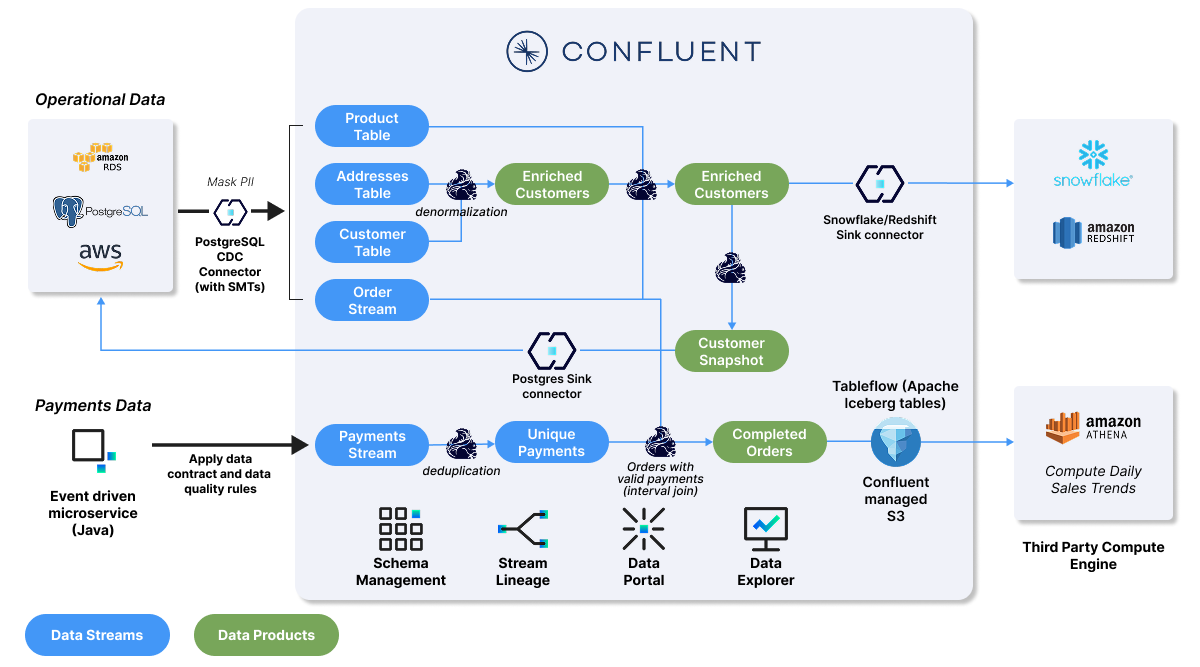

Laboratorio de agregación de ventas de productos y Customer360

Limpia y agrega los datos de ventas de productos, ingiere los datos enriquecidos en Snowflake o Redshift y luego crea un producto de datos para que las bases de datos operativas lo consuman.

Laboratorio de tendencias de ventas diarias

Valida los pagos, analiza los patrones de ventas para identificar las tendencias diarias y materializa el topic de Kafka como una tabla de Iceberg en Amazon Athena para extraer conclusiones detalladas.

«La adopción de Change Data Capture nos ha sacarle el máximo partido a los datos en tiempo real. La consecuencia directa ha sido poder migrar de procesos por lotes al procesamiento en streaming».

«Con Flink, ahora tenemos la oportunidad de desplazar el procesamiento a la izquierda y realizar muchas transformaciones y cálculos de nuestros datos antes de que lleguen a Snowflake. Esto optimizará nuestros costes de procesamiento de datos para aumentar la cantidad de datos que tenemos disponibles».

«Con Confluent, ahora podemos desarrollar fácilmente las pipelines de CDC que necesitamos para adquirir datos en tiempo real en lugar de recuperarlos por lotes cada 10 minutos, lo que nos permite detectar el fraude rápidamente».

«Lo más difícil era no disponer de suficientes recursos internos para desarrollar los sistemas de CDC y los procesos de procesamiento en streaming. Ahora, podemos crear nuestros propios sistemas de CDC, y el equipo de desarrolladores pudo reducir su carga de trabajo mientras desarrollaba el proceso de streaming».

«Con Confluent Cloud, ahora podemos proporcionar datos operativos en tiempo real a cualquier equipo que los necesite. Disponer de esa habilidad es realmente importante y reduce significativamente nuestra carga operativa».

¿Quieres empezar a procesar datos de CDC en tiempo real con Flink? Crea una cuenta de Confluent e implementa una arquitectura de procesamiento en streaming lista para cualquier entorno.

Prueba Confluent Cloud para Apache Flink ®—disponible en AWS, Google Cloud, Microsoft Azure— para crear aplicaciones que aprovechen Kafka y Flink de forma mucho más rentable y con toda la simplicidad de un entorno cloud-native y sin servidor.

Además, con Confluent Platform para Apache Flink®, puedes trasladar tus cargas de trabajo actuales de Flink a una plataforma de streaming de datos self-managed, lista para desplegarse en tus instalaciones on-prem o en tu nube privada.

Confluent Cloud

Servicio cloud-native totalmente gestionado para Apache Kafka®

Streaming CDC With Flink | FAQs

How does a streaming approach improve on batch ELT/ETL pipelines?

A streaming approach allows you to "shift left," processing and governing data closer to the source. Instead of running separate, costly ELT jobs in multiple downstream systems, you process the data once in-stream with Flink to create a single, reusable, high-quality data product. This improves data quality, reduces overall processing costs and risks, and gets trustworthy data to your teams faster.

Why use Apache Flink® for processing real-time CDC Data?

Apache Flink® is the de facto standard for stateful stream processing, designed for high-performance, low-latency workloads—making it ideal for CDC. Its ability to handle stateful computations allows it to accurately interpret streams of inserts, updates, and deletes to maintain a correct, materialized view of data over time. Confluent offers a fully managed, serverless Flink service that removes the operational burden of self-management.

How do you handle data consistency and quality in real-time CDC pipeline?

Data consistency is maintained by processing CDC events in-flight to filter duplicates, join streams for enrichment, and aggregate data correctly before it reaches any downstream system. Confluent's platform integrates Flink with Stream Governance, including Schema Registry, to define and enforce universal data standards, ensuring data compatibility, quality, and lineage tracking across your organization.

How does Confluent Cloud handle changes to the source database schema?

When your CDC pipeline is integrated with Confluent Schema Registry, it can automatically and safely handle schema evolution. This ensures that changes to the source table structure—like adding or removing columns—do not break downstream applications or data integrity. The platform manages schema compatibility, allowing your data streams to evolve seamlessly.

What are the main benefits of using a Fully managed service for Apache Flink® like Confluent Cloud?

A fully managed service eliminates the significant operational complexity, steep learning curve, and high in-house support costs associated with self-managing Apache Flink®. With Confluent, you get a serverless experience with elastic scalability, automated updates, and pay-as-you-go pricing, allowing your developers to focus on building applications rather than managing infrastructure. In addition, native integration between Apache Kafka® and Apache Flink® and pre-built connectors allow teams to build and scale fast.

How does Confluent Cloud simplify processing Debezium CDC events?

Confluent Cloud provides first-class support for Debezium, an open source distributed platform for change data capture. Pre-built connectors can automatically interpret the complex structure of Debezium CDC event streams, simplifying the process of integrating with Kafka and Flink.