[Webinar] Why Real-time Data Streaming is the Foundation of Modern AI Strategies | Register Now

How Data Asset Management Addresses Modern Data Challenges

The evolution of software applications and proliferation of smartphones and sensors has generated data at great volumes and faster speeds than in the past. Businesses can use this data to unlock insights, develop and improve features for customers faster, generate revenue, save money, and more. That is, if they have the right data strategy.

The challenge is that many businesses still have their data in silos, and send it from where it’s generated in batches to data lakes so that it can be analyzed later. This means they are slower to act on the data flowing into their business, and risk falling behind competitors.

Read on to learn how data asset management tools and techniques can help your organization ensure the quality and data accessibility of batch and real-time data. We’ll explain how you can enable your teams to take swift action on the latest information, while avoiding the pitfalls common to DAM programs today by going beyond managing data assets to building data products.

What is Data Asset Management?

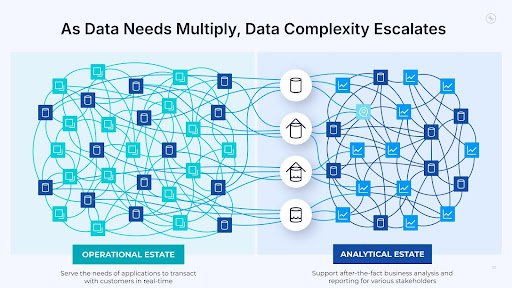

Historically, many organizations and technology leaders viewed data management as a cost center. Businesses must spend money on data infrastructure—warehouses, databases, and data lakes— to keep track of and move the data they generate across to domains: the operational and analytical estates.

Each estate has its own traditional approaches to integrating, governing, and processing data. And that reality only makes taking a more holistic approach to managing that data more complicated. Despite these challenges, competitive organizations today see the value of data—particuarly its importance in delivering real-time insights and event-driven integration that drive product innovation, differentiation, and competitive advantage. That’s why data asset management (DAM) is so crucial.

The operational-analytical divide in most modern organization only makes data management more fragmented and worsens data consistency and accessibility across the two domains

DAM treats data as a valuable, managed asset with a distinct life cycle. The key components of a robust DAM strategy include technologies and processes to accurately and securely discover, classify, govern, and manage data through its life cycle of creation, use, storage, and potential deletion.

While DAM is crucial, it’s not without its own challenges. These include siloed and fragmented data, data security and privacy, inconsistent governance, integration with other systems, data accuracy, and more. Let’s compare DAM against some related terms to explore how each approaches these common data challenges from different angles.

What's the Difference Between DAM and Data Management?

Data management and data asset management sound pretty similar but are actually different concepts. Understanding the differences between them is quite crucial to having a robust data strategy.

Broadly, data management is the practice of collecting, organizing, storing, protecting, and using data within an organization to enhance data-driven decision-making.

DAM on the other hand generally represents a more focused and intentional approach to treating data as a strategic asset. DAM sees data not just as bits of information to be moved, stored, and protected. Instead, DAM looks at data as a highly valuable resource that needs to be carefully curated in order to unlock—think of it as the difference between parking money in a simple checking or savings account (data management) vs putting your money to work in a managed fund (data asset management).

DAM is about actively increasing the utility of data—and therefore unlocking more data value, faster— rather than just putting policies in place about how it's moved and stored.

In essence, DAM encompasses elements of traditional data management but adds a strategic layer that helps organizations organize and leverage data assets to create value. Done correctly, DAM facilitates data governance, streamlines quality assurance, enhances data integration, and improves data lifecycle management.

This last element is key, as data is much less valuable if it cannot be easily and securely accessed, streamed, shared, and used across the business.

Where Does Data Governance Fit Within DAM?

DAM is also different from data governance, which is an aspect of both data asset management and data management.

Data governance is a specific discipline that focuses on the policies, processes, and standards organizations use to ensure the responsible and ethical use of data, often in compliance with region-, industry-, and organization-specific privacy regulations.

This means creating regulatory frameworks for handling and using data within the organization, ultimately ensuring that data is securely handled and that the organization is aware of and in compliance with relevant rules and regulations surrounding data use.

Data governance also helps ensure data quality by establishing clear policies and processes that address accuracy, completeness, consistency across systems, and validity based on established business rules, data formatting requirements.

Ultimately, it’s virtually impossible to have effective DAM without strong data governance. Data governance provides a framework for “how” data is used, while DAM serves as the layer that focuses on identifying “what” can and should be done with data so it can be managed as an asset to explicitly achieve those purposes.

For example, organizations that operate in regulated industries like healthcare or financial services need clearly communicated goals and objectives for data use, as well as robust policies and controls, to ensure data can be used as a secure and strategic asset.

Data governance is especially useful when dealing with real-time streams of data, which can be harnessed using Apache Kafka®, an open-source distributed streaming system used for stream processing, real-time data pipelines, and data integration at scale.

For example, AO.com, an electronics retailer, used Confluent’s data governance capabilities to define and enforce universal data standards that enable scalable data compatibility, to support effective data governance. Being able to trust and reuse data across multiple contexts has unlocked use cases that the team hadn’t even considered when first adopting Kafka or Confluent.

An engineer lead at the company shared: “Once we made data available as an event stream via Confluent Cloud, we soon saw two or three other teams coming onboard to access that data for multiple use cases that weren’t even considered as part of the original plan. Those teams can rapidly achieve their goals in a decoupled way, that is, without creating new point to-point integrations. As a result, we’re more agile and our teams can move much faster because they’re less dependent on other parts of the organization.”

What Are the Benefits of DAM?

Through technology and clear policies and procedures, strong and consistently enforced DAM policies can help organizations:

-

Ensure data compliance and reduce regulatory risks by providing a structured, transparent, and controlled approach to managing data.

-

Significantly improve data quality and reliability. DAM processes do this by ensuring data accuracy, completeness, consistency, and accessibility. That is critical for meeting various legal and regulatory requirements, such as the European Union's General Data Protection Regulation (GDPR) or the U.S. Health Insurance Portability and Accountability Act (HIPAA).

-

Accelerate data-driven decision-making and innovation. It can allow organizations to integrate data from an array of sources (for example, in a retailer, from logistics, point-of-sale, and inventory systems) and then create analytical workflows that drive faster, more informed business decisions.

By improving data quality and consistency, and turning data into a strategic asset, DAM helps business leaders streamline the process to locate, access and make more informed decisions based on the data. And by understanding operational performance and being able to analyze that data more quickly, DAM helps organizations develop new features and respond to customer behavior more efficiently.

DAM also helps reduce costs and drive efficiencies by creating a central repository for data, helping avoid the creation and maintenance of multiple, often outdated, copies of the same asset. This single source of truth means that data can be tagged and categorized effectively and help eliminate duplicative or stale data, optimizing storage costs. DAM also makes it faster to search for and retrieve data, leads to more efficient workflows and approvals, and also data to be more securely and effectively shared and reused.

More broadly, DAM drives efficiencies by breaking down data silos, improving data quality, making data more secure and accessible, enhancing decision-making, and in general providing a more scalable and flexible data architecture.

However, there are challenges associated with DAM, including an overemphasis on technology solutions and a lack of focus on changing internal organizational culture, trying to do too much data management at once, and an inability to demonstrate clear value. Let’s dive deeper and see how these challenges

Common Challenges That DAM Alone Struggles to Solve

DAM programs have the potential to deliver a multitude of benefits to organizations, but they often fall short, for a variety of reasons. Data can be turned into an “asset” on paper in a DAM program, but if those data assets are not actively made accessible, discoverable, and usable for diverse business needs, then the organization is doing DAM in name only.

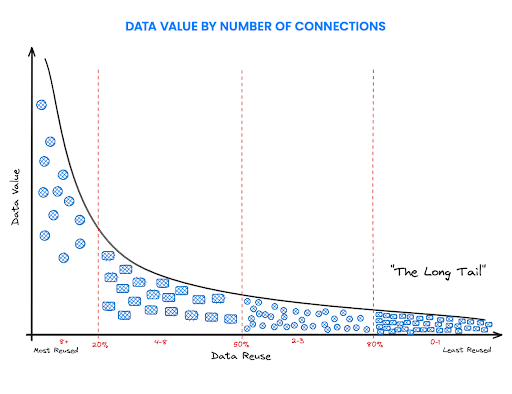

The more that data assets are accessed, integrated, and reused, the more value those data assets actually generate for the business

So what stops organizations from applying DAM principles in a way that maximizes data quality, data accessibility, and data reuse?

Firstly, DAM programs often become too focused on the technology solutions employed to manage data rather than the systems used to access data. In such cases, technology leaders often fail to effectively communicate with and understand the needs of the business users who most benefit from access to the data. For example, an IT department might invest heavily in a cutting-edge data lake solution without fully grasping that what the marketing and sales team need most are easily accessible, clean analytics for campaign performance, customer segmentation—not raw, unstructured data.

And just as there is often an overemphasis on IT solutions, there is not enough focus and rigor put into changing an organization’s data culture: how teams, on average, approach to storing, analyzing, using, and managing data. DAM programs cannot truly succeed unless leaders improve data literacy in their organization, change the way users see and treat data, and make data a strategic asset that can help the business.

DAM programs also can suffer from complexity and scope creep, as leaders try to implement wide-ranging management changes to all their data at once. This can lead to data and engineering teams becoming overwhelmed, delays in implementation, and thus delays in the return on investment that DAM has the potential to bring.

This can lead to many users and even business leaders coming to see DAM as not worth the time and cost. If DAM programs cannot demonstrate clear return on investment (ROI) and measurably improved business outcomes, executive support will likely wither.

Ultimately, DAM should not be about managing data as an end in itself. A successful DAM program needs to be a way to use data strategically to improve the performance of the organization.

Data Assets vs. Data Products

As organizations think about DAM programs, a concept leaders should consider is managing data as a product, a related but distinct concept from managing data assets.

Data products are well-governed, reusable, and accessible data assets designed with specific users and ultimate business use cases in mind. Building data products not only ensures data quality, usability, and availability for their intended consumers, it can also help bridge data integration, governance, and processing across operational or analytical environments.

Unlike raw datasets, a data product is purpose-built with governance, structure, and maintenance to ensure it is reliable and ready for use within an organization. Confluent’s data streaming platform helps organizations like Victoria’s Secret, Acertus, DISH Wireless, and Globe Group build universal data products.

With universal data products, businesses can streamline access, enhance data quality, and drive innovation. This means organizations can fundamentally shift data governance and quality from a separate, often reactive, bureaucratic function to an inherent part of the data's development and life cycle. This involves documenting the data’s purpose, schema, quality, and ownership, thus making it easy for consumers to find, understand, and trust the data they need.

Data product strategies help ensure that data is born of high quality and governed from the outset, rather than being “cleaned up” later in its life cycle. In the The Practical Guide to Building Data Products for IT Leaders, we explain how to determine which data products will drive the most impact for your organization.

Key Differences of DAM vs. Data Product Strategy

A data product strategy represents a paradigm shift from traditional data asset management. Instead of raw, uncurated datasets, data products are purpose-built, refined, and governed data assets designed to be consumed directly by various users and applications, maximizing accessibility and usability for specific business outcomes.

Here’s how it works:

-

In terms of user-centric design, data products strategy emphasizes understanding the specific needs, use cases, and consumption patterns of target data consumers (for example, analysts, developers, machine learning models, business applications). Traditional DAM collects and stores data based on source system structures or technical requirements, with less emphasis on the end-user's consumption needs. Consumers typically receive raw or semi-processed data and are responsible for its transformation and interpretation, leading to duplicated effort and potential inconsistencies.

-

Each data product has a clear owner within a specific business domain, which empowers domains to define, enrich, and maintain the quality of their data products. They are accountable for the data's utility and accuracy, fostering a sense of ownership that directly improves data quality and relevance. With DAM, data is often managed by a central IT or data team, which can become a bottleneck, and business domains may feel disconnected from their data, leading to a mentality where data quality issues are not addressed at the source.

-

Data products are published to a centralized data catalog or "data marketplace" with rich metadata, clear documentation (including schema, quality metrics, and usage examples), and defined APIs. This enables self-service discovery, allowing users to find, understand, and subscribe to data products without extensive support from data engineers. In DAM, data discovery is often manual, relying on word-of-mouth or individual expertise.

-

With data products, quality is an inherent feature, and data contracts define expected schemas, formats, and quality rules at the point data is created. Automated validation, lineage tracking, and real-time monitoring are built into the data product's lifecycle. In DAM, data quality is often an afterthought, addressed reactively through downstream cleansing efforts. Lineage can be difficult to trace, making it hard to pinpoint the source of errors or understand data transformations, which can lead to a lack of trust in the data.

Additionally, data products are typically exposed through well-defined APIs (for example, Apache Kafka® topics, REST APIs, SQL interfaces) and standardized data formats. This "API-first" approach ensures seamless, programmatic consumption by applications, microservices, and analytical tools, promoting interoperability across the organization. In DAM, access methods can be ad-hoc, requiring custom connectors, complex data extracts, or manual data wrangling, leading to integration challenges and fragmented data consumption patterns.

And data products are treated like software products, with metrics tracked on their usage, performance, and the business outcomes they enable. Formal feedback loops are established with consumers to gather input, identify pain points, and inform iterative improvements to the data product, ensuring its continued relevance and value. With DAM, it can often be challenging to measure the direct value or impact of raw data. Feedback mechanisms are often informal or non-existent, making it difficult to identify data quality issues or opportunities for improvement.

At Victoria's Secret, Confluent has also allowed the company to adopt a data products mindset so teams across the organization can start viewing the data as reusable assets that are easily discoverable by data consumers and can be used to build out infinite valuable use cases. Victoria’s Secret can tap into real-time data and build a matrix out of it so that the other teams can then feed into that data to provide financial metrics, customer metrics, marketing preferences, and personalized recommendations to customers.

Data Products vs. Traditional DAM

| Element | Data Products (Universal Data Products) | Traditional DAM |

|---|---|---|

| User-Centric Design | Data is purposefully built and curated for specific consumer needs and use cases, delivered "ready-to-use." | Data is often collected and stored based on source system structures, requiring significant consumer effort for interpretation. |

| Domain Ownership & Decentralization | Business domains own and are accountable for their data products, fostering quality and relevance at the source. | Centralized IT/data teams often manage data, leading to bottlenecks and less direct business accountability for data quality. |

| Discoverability & Self-Service | Published in a data catalog with rich metadata, documentation, and APIs, enabling easy self-service discovery and subscription. | Data resides in disparate systems with poor documentation, requiring manual discovery and reliance on experts. |

| Quality & Trust by Design | Quality, schemas, and lineage are embedded from the outset via data contracts, automated validation, and continuous monitoring. | Data quality is often addressed reactively downstream; lineage is hard to trace, leading to trust issues. |

| API-First & Interoperability | Exposed via well-defined APIs and standardized formats, enabling seamless, programmatic consumption and integration across systems. | Ad-hoc access methods, custom connectors, and manual extracts hinder interoperability and create fragmented consumption. |

| Measurable Value & Feedback Loops | Usage, performance, and business impact are tracked. Formal feedback loops drive continuous improvement and ensure ongoing relevance. | Difficult to measure direct value; feedback mechanisms are often informal or non-existent, hindering ongoing optimization. |

How Confluent Can Help You with Data Asset Management

You've learned how data asset management (DAM) transforms data into a strategic asset, but true agility comes from "data as a product." This paradigm embeds governance, quality, and user-centricity directly into the data's lifecycle, making data highly accessible and usable for specific business outcomes. Confluent powers the platform that helps you turn data assets into data products and drive innovation.

To find out more, explore how Confluent's data streaming platform enables these powerful data product capabilities firsthand. Or read our in-depth ebook on adopting a data-product mindset: Conquer Your Data Mess With Universal Data Products